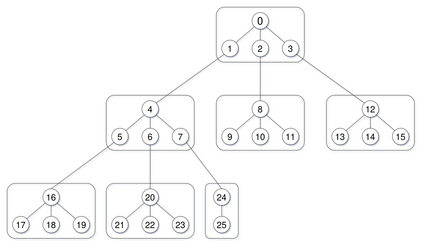

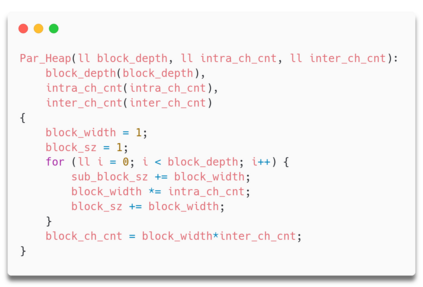

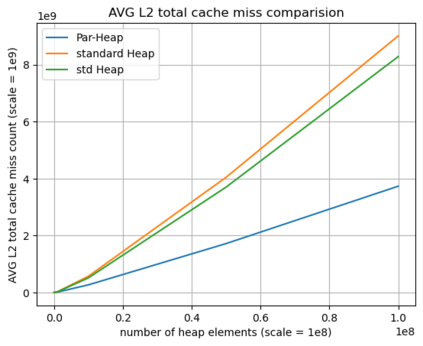

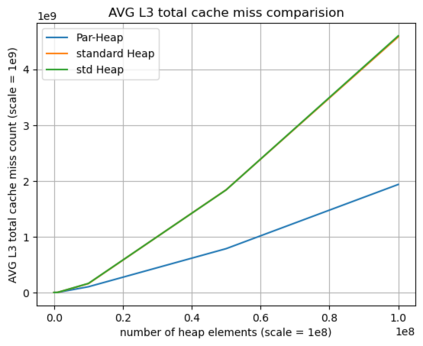

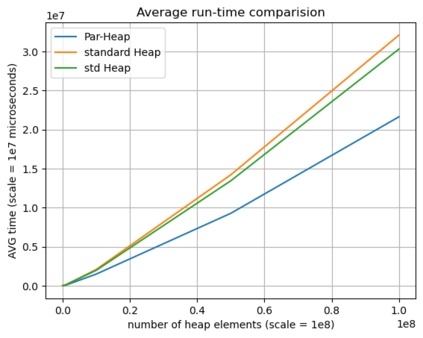

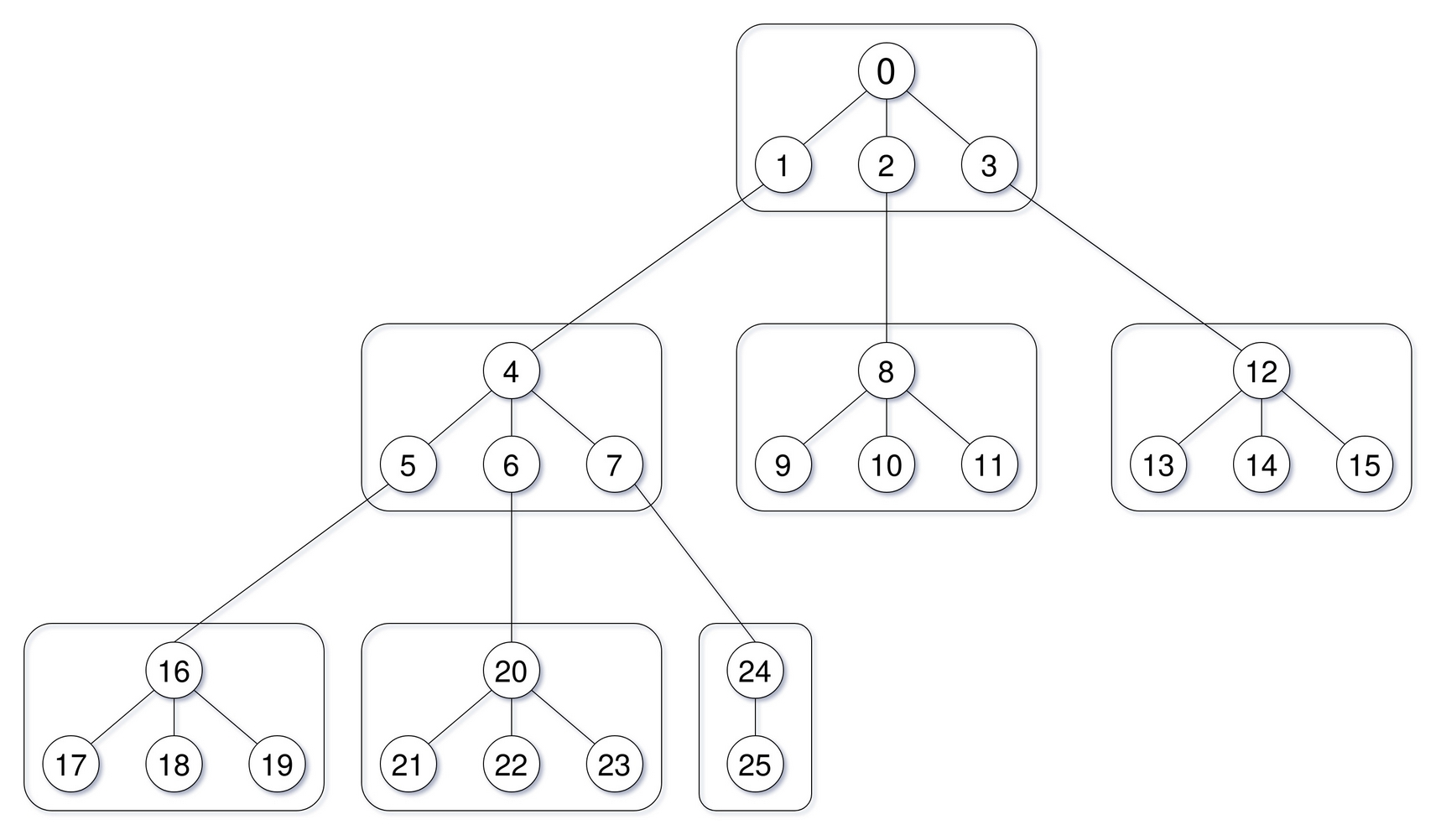

Priority queues are fundamental data structures with widespread applications in various domains, including graph algorithms and network simulations. Their performance critically impacts the overall efficiency of these algorithms. Traditional priority queue implementations often face cache-related performance bottlenecks, especially in modern computing environments with hierarchical memory systems. To address this challenge, we propose an adaptive cache-friendly priority queue that utilizes three adjustable parameters to optimize the heap tree structure for specific system conditions by making a tradeoff between cache friendliness and the average number of cpu instructions needed to carry out the data structure operations. Compared to the implicit binary tree model, our approach significantly reduces the number of cache misses and improves performance, as demonstrated through rigorous testing on the heap sort algorithm. We employ a search method to determine the optimal parameter values, eliminating the need for manual configuration. Furthermore, our data structure is laid out in a single compact block of memory, minimizing the memory consumption and can dynamically grow without the need for costly heap tree reconstructions. The adaptability of our cache-friendly priority queue makes it particularly well-suited for modern computing environments with diverse system architectures.

翻译:暂无翻译