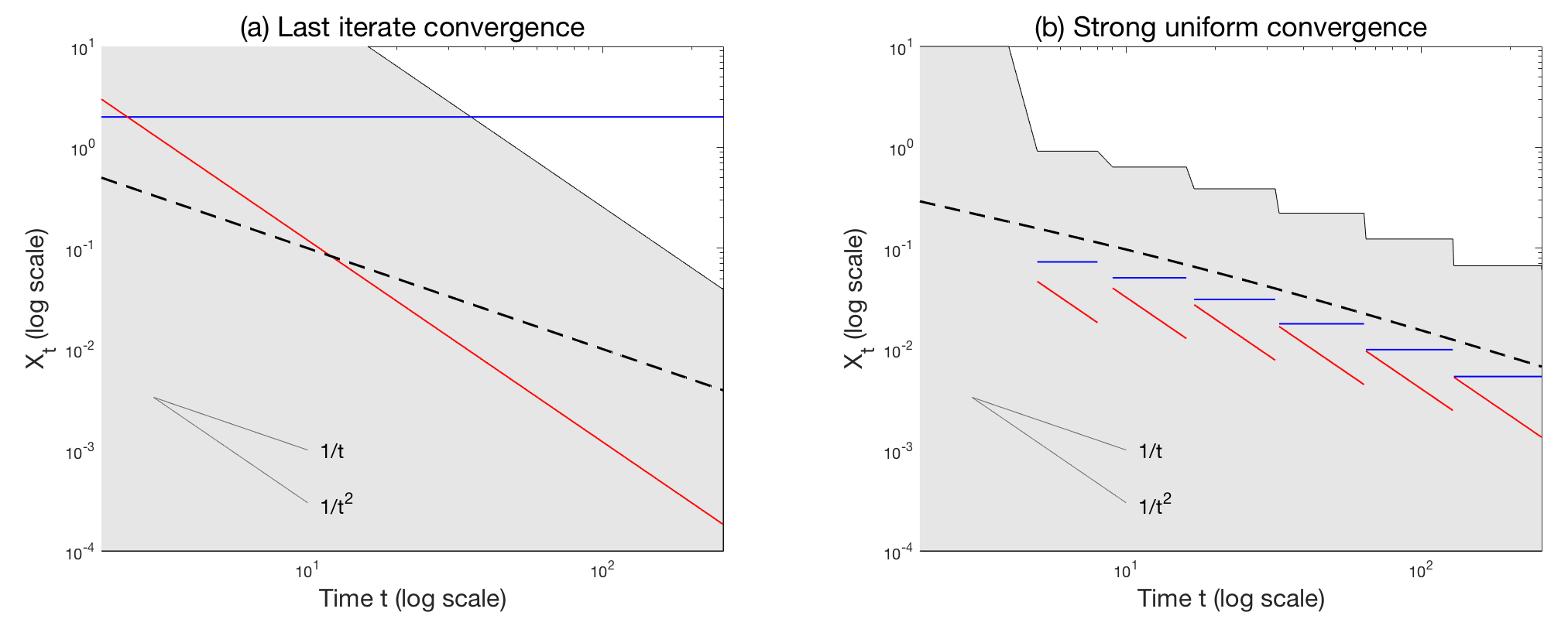

One of the challenges in analyzing a learning algorithm is the circular entanglement between the objective value and the stochastic noise. This is also known as the "chicken and egg" phenomenon. Traditionally, people tackle this issue with the special structure of the problem and hence the analysis is difficult to generalize. In this paper, we present a general framework for analyzing high-probability bounds for stochastic dynamics in learning algorithms. Our framework composes standard techniques from probability theory to give a streamlined three-step recipe with a general and flexible principle to tackle the "chicken and egg" problem. We demonstrate the power and the flexibility of our framework by giving unifying analysis for three very different learning problems with both the last iterate and the strong uniform high probability convergence guarantee. The problems are stochastic gradient descent for strongly convex functions, streaming principal component analysis and linear bandit with stochastic gradient descent updates. We either improve or match the state-of-the-art bounds on all three dynamics.

翻译:分析学习算法的挑战之一是客观价值和随机噪音之间的循环纠缠。 这也被称为“ 鸡蛋和鸡蛋” 现象。 传统上, 人们用问题的特殊结构来解决这个问题, 因此分析很难概括。 在本文中, 我们提出了一个用于分析学习算法中随机动态的高概率界限的总框架。 我们的框架从概率理论中形成了标准技术, 提供了简化的三步配方, 并规定了解决“ 鸡蛋和鸡蛋” 问题的一般和灵活原则。 我们通过对三种截然不同的学习问题进行统一分析, 分析最后的迭代和高度一致的概率保证, 显示了我们框架的力量和灵活性。 问题是, 强共性函数的随机梯度梯度下降, 流主要组件分析, 以及直线带与随机梯度梯度梯度下位更新。 我们要么改进, 要么匹配所有三种动态的状态。