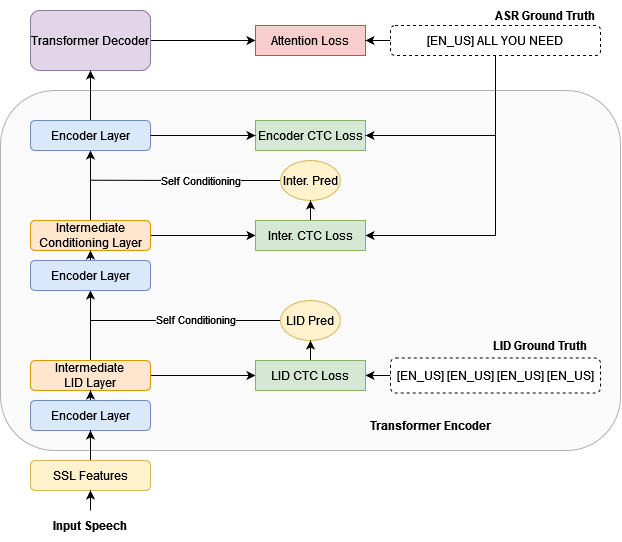

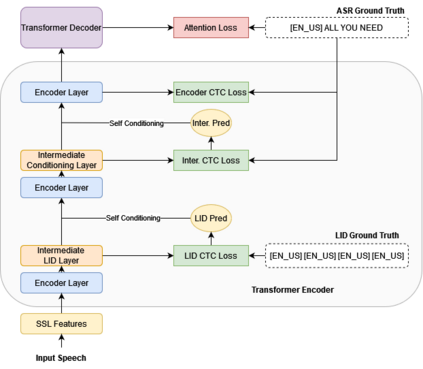

Multilingual Automatic Speech Recognition (ASR) models have extended the usability of speech technologies to a wide variety of languages. With how many languages these models have to handle, however, a key to understanding their imbalanced performance across different languages is to examine if the model actually knows which language it should transcribe. In this paper, we introduce our work on improving performance on FLEURS, a 102-language open ASR benchmark, by conditioning the entire model on language identity (LID). We investigate techniques inspired from recent Connectionist Temporal Classification (CTC) studies to help the model handle the large number of languages, conditioning on the LID predictions of auxiliary tasks. Our experimental results demonstrate the effectiveness of our technique over standard CTC/Attention-based hybrid mod- els. Furthermore, our state-of-the-art systems using self-supervised models with the Conformer architecture improve over the results of prior work on FLEURS by a relative 28.4% CER. Trained models are reproducible recipes are available at https://github.com/ espnet/espnet/tree/master/egs2/fleurs/asr1.

翻译:多语言自动语音识别(ASR)模式将语音技术的使用范围扩大到多种语言,然而,由于这些模式需要处理多少种语言,理解其在不同语言之间不平衡性表现的关键是检查模型是否实际知道它应该转录哪种语言。在本文中,我们介绍我们的工作,通过调整整个语言身份模式(LID),改进102种语言开放的ASR基准FLEURS的性能。我们调查了从最近连接时间分类(CTC)研究中汲取的技术,以帮助模型处理大量语言,以LID对辅助任务的预测为条件。我们的实验结果表明,我们的技术比标准的CTC/Attention-bas-MOD-els系统有效。此外,我们最先进的技术系统使用自我监督的模式,与Conform结构一起,通过相对28.4%的CER改进了FLEURS先前工作的结果。经过培训的模型是可再生的配方,见https://github.com/ espnet/espnet/stree/master/egues2/fres/ge2/frosururs/ 。</s>