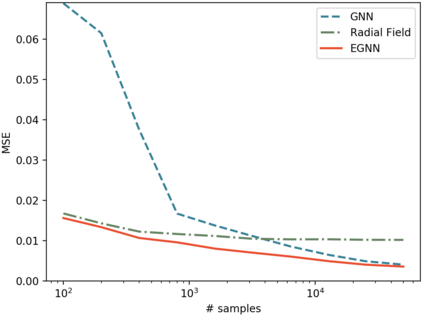

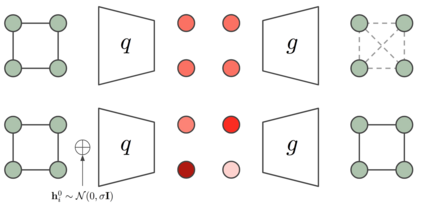

This paper introduces a new model to learn graph neural networks equivariant to rotations, translations, reflections and permutations called E(n)-Equivariant Graph Neural Networks (EGNNs). In contrast with existing methods, our work does not require computationally expensive higher-order representations in intermediate layers while it still achieves competitive or better performance. In addition, whereas existing methods are limited to equivariance on 3 dimensional spaces, our model is easily scaled to higher-dimensional spaces. We demonstrate the effectiveness of our method on dynamical systems modelling, representation learning in graph autoencoders and predicting molecular properties.

翻译:本文介绍了一种新模型,用于学习可与旋转、翻译、反射和变形等同的图形神经网络,称为E(n)-等式图形神经网络(EGNNS),与现有方法不同,我们的工作不需要在中间层进行成本高昂的较高级的计算,尽管它仍然具有竞争性或更好的性能。此外,虽然现有的方法限于3维空间的等同性,但我们的模型很容易缩放到高维空间。我们展示了我们在动态系统建模、在图形自动转换器和预测分子特性方面的代表性学习方法的有效性。