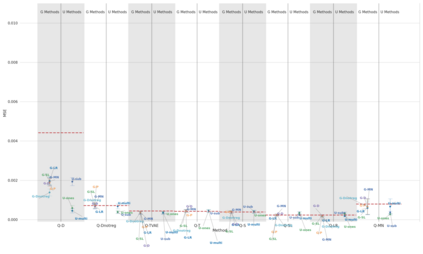

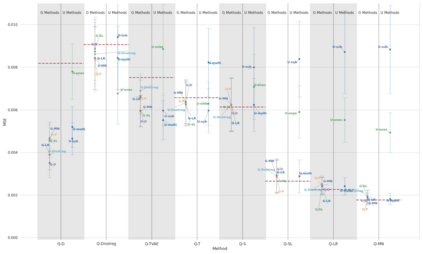

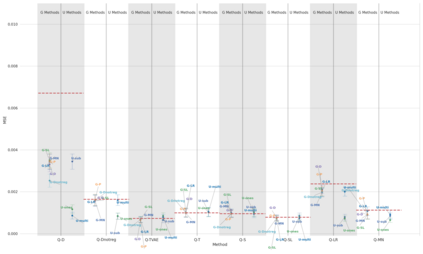

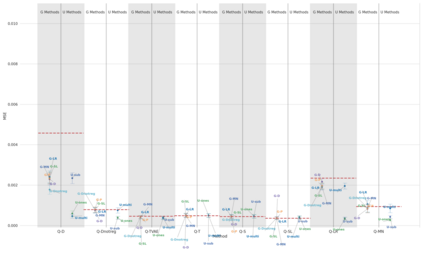

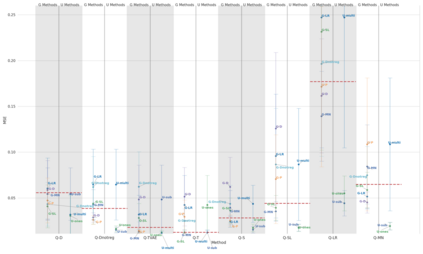

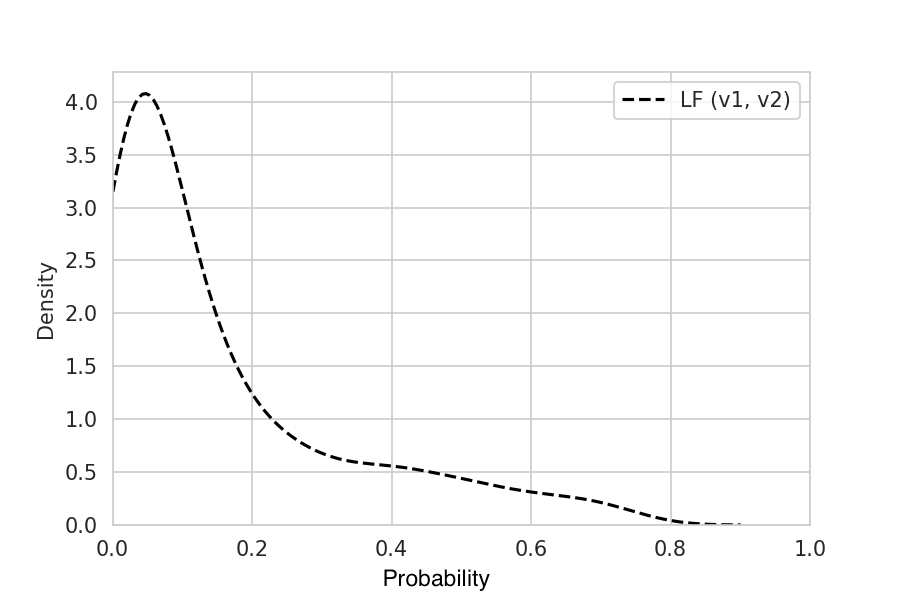

Parameter estimation in empirical fields is usually undertaken using parametric models, and such models readily facilitate statistical inference. Unfortunately, they are unlikely to be sufficiently flexible to be able to adequately model real-world phenomena, and may yield biased estimates. Conversely, non-parametric approaches are flexible but do not readily facilitate statistical inference and may still exhibit residual bias. We explore the potential for Influence Functions (IFs) to (a) improve initial estimators without needing more data (b) increase model robustness and (c) facilitate statistical inference. We begin with a broad introduction to IFs, and propose a neural network method 'MultiNet', which seeks the diversity of an ensemble using a single architecture. We also introduce variants on the IF update step which we call 'MultiStep', and provide a comprehensive evaluation of different approaches. The improvements are found to be dataset dependent, indicating an interaction between the methods used and nature of the data generating process. Our experiments highlight the need for practitioners to check the consistency of their findings, potentially by undertaking multiple analyses with different combinations of estimators. We also show that it is possible to improve existing neural networks for `free', without needing more data, and without needing to retrain them.

翻译:在经验领域,通常使用参数模型进行参数估计,这种模型便于统计推断。不幸的是,这些模型不可能足够灵活,无法充分模拟现实世界现象,并可能产生偏差估计。相反,非参数方法具有灵活性,但并不便于统计推断,可能仍然表现出剩余偏差。我们探索影响功能的潜力,以便(a) 改进初步估计,而不需要更多数据,(b) 提高模型的可靠性,(c) 便利统计推断。我们首先广泛介绍综合框架,并提出神经网络方法“MultiNet ”,该方法利用一个单一结构寻求共同点的多样性。我们还对称为“MultiStep”的综合框架更新步骤引入变量,并对不同方法进行全面评价。我们发现,改进取决于数据集,表明所用方法和数据生成过程的性质之间的相互作用。我们的实验突出表明,从业人员需要检查其发现是否一致,可能与不同的自由网络进行多重分析,我们还需要在不改进现有数据的情况下进行改进。