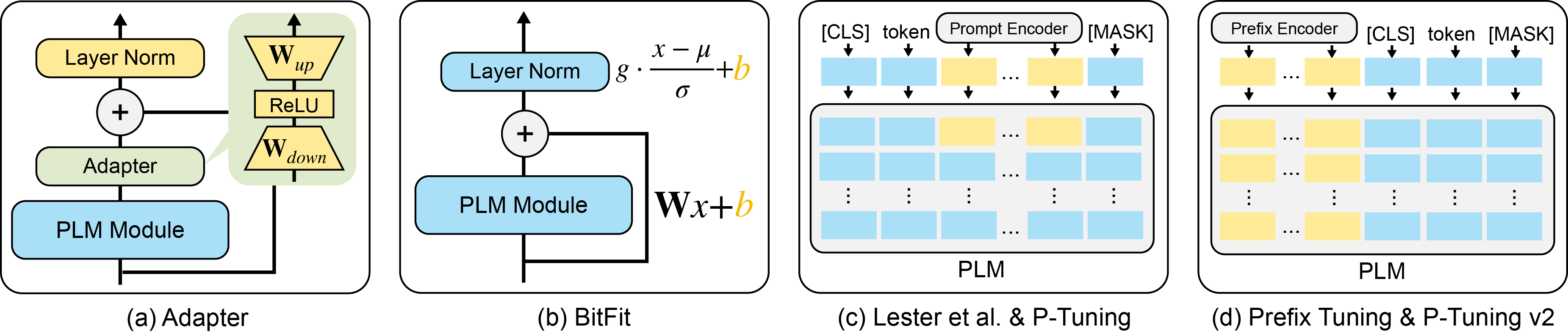

Prompt tuning attempts to update few task-specific parameters in pre-trained models. It has achieved comparable performance to fine-tuning of the full parameter set on both language understanding and generation tasks. In this work, we study the problem of prompt tuning for neural text retrievers. We introduce parameter-efficient prompt tuning for text retrieval across in-domain, cross-domain, and cross-topic settings. Through an extensive analysis, we show that the strategy can mitigate the two issues -- parameter-inefficiency and weak generalizability -- faced by fine-tuning based retrieval methods. Notably, it can significantly improve the out-of-domain zero-shot generalization of the retrieval models. By updating only 0.1% of the model parameters, the prompt tuning strategy can help retrieval models achieve better generalization performance than traditional methods in which all parameters are updated. Finally, to facilitate research on retrievers' cross-topic generalizability, we curate and release an academic retrieval dataset with 18K query-results pairs in 87 topics, making it the largest topic-specific one to date.

翻译:快速调整尝试更新经过培训的模型中少数特定任务参数。 它已经实现了类似性能, 微调了语言理解和生成任务上设定的全部参数。 在这项工作中, 我们研究神经文本检索器的快速调试问题。 我们引入了跨领域、 跨领域和跨主题设置的文本检索的参数效率快速调试。 我们通过广泛的分析, 显示该战略可以减轻基于微调的检索方法所面临的两个问题 -- -- 参数效率低下和一般性弱 -- -- 。 值得注意的是, 它能够大大改进检索模型的外部零光学化。 通过仅更新0.1%的模型参数, 快速调试战略可以帮助检索模型实现比所有参数都更新的传统方法更好的概括化性性工作。 最后, 为了便利对检索器的跨主题通用性进行研究, 我们整理并发布87个专题的18K查询结果对的学术检索数据集, 使其成为迄今为止最大的专题。