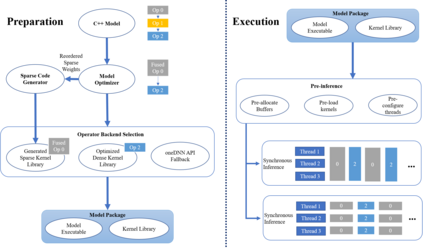

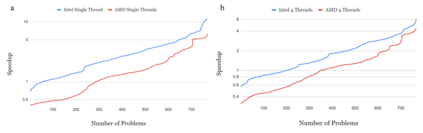

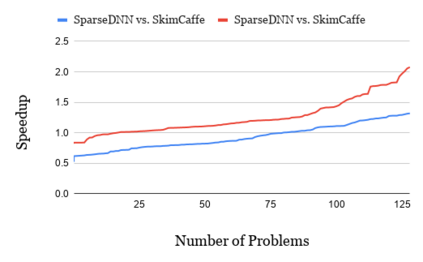

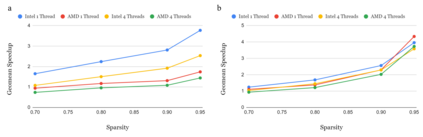

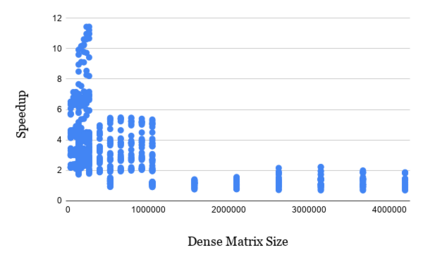

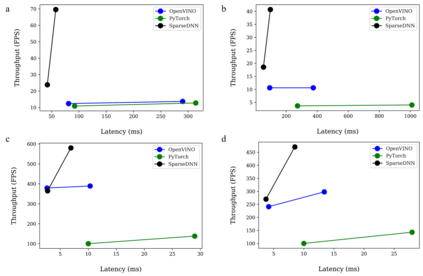

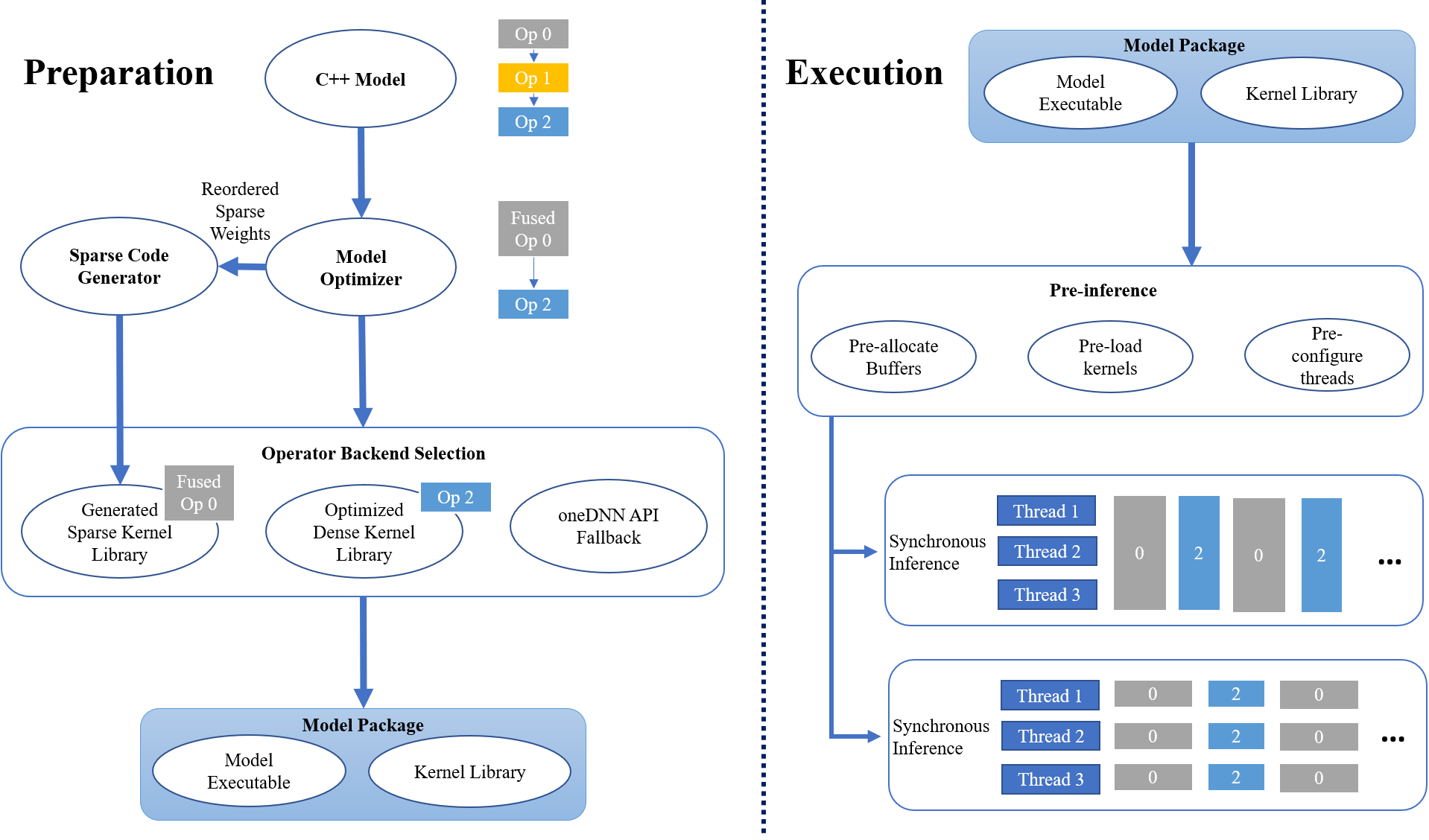

The last few years have seen gigantic leaps in algorithms and systems to support efficient deep learning inference. Pruning and quantization algorithms can now consistently compress neural networks by an order of magnitude. For a compressed neural network, a multitude of inference frameworks have been designed to maximize the performance of the target hardware. While we find mature support for quantized neural networks in production frameworks such as OpenVINO and MNN, support for pruned sparse neural networks is still lacking. To tackle this challenge, we present SparseDNN, a sparse deep learning inference engine targeting CPUs. We present both kernel-level optimizations with a sparse code generator to accelerate sparse operators and novel network-level optimizations catering to sparse networks. We show that our sparse code generator can achieve significant speedups over state-of-the-art sparse and dense libraries. On end-to-end benchmarks such as Huggingface pruneBERT, SparseDNN achieves up to 5x throughput improvement over dense inference with state-of-the-art OpenVINO.

翻译:过去几年中,在支持高效深层学习推断的算法和系统中出现了巨大的飞跃。 普鲁宁和量化算法现在可以不断地以一个数量级压缩神经网络。 对于压缩神经网络来说,已经设计了多种推论框架来最大限度地提高目标硬件的性能。 虽然我们发现在OpenVINO和MNN等生产框架中对量化神经网络的成熟支持,但仍然缺乏对经处理的稀薄神经网络的支持。为了应对这一挑战,我们提出了SprassDNN,这是一个以CPU为目标的稀有深层次的深层推论引擎。我们提出了内核级优化,配有稀薄的代码生成器,以加速稀薄的操作器和新颖的网络级优化,供稀薄的网络使用。我们表明,我们稀薄的代码生成器可以大大加速最先进的分散和密集的图书馆。在Huggingface PruneBERT等终端基准上,SprassenDNNN能够达到5x的超稠密度改善。