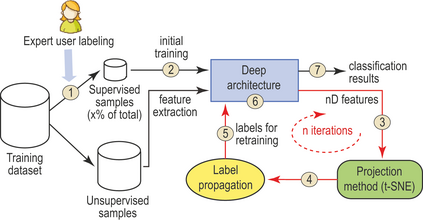

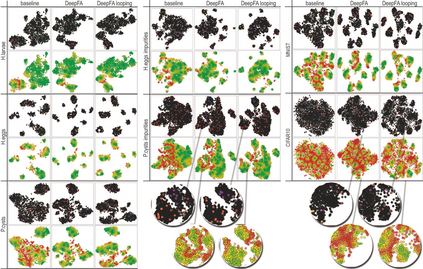

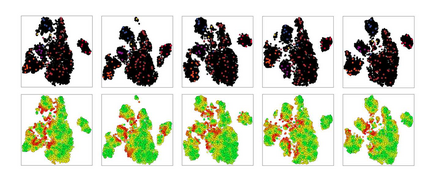

While convolutional neural networks need large labeled sets for training images, expert human supervision of such datasets can be very laborious. Proposed solutions propagate labels from a small set of supervised images to a large set of unsupervised ones to obtain sufficient truly-and-artificially labeled samples to train a deep neural network model. Yet, such solutions need many supervised images for validation. We present a loop in which a deep neural network (VGG-16) is trained from a set with more correctly labeled samples along iterations, created by using t-SNE to project the features of its last max-pooling layer into a 2D embedded space in which labels are propagated using the Optimum-Path Forest semi-supervised classifier. As the labeled set improves along iterations, it improves the features of the neural network. We show that this can significantly improve classification results on test data (using only 1\% to 5\% of supervised samples) of three private challenging datasets and two public ones.

翻译:虽然共生神经网络需要大量有标签的培训图像,但对这种数据集的专家人文监督可能非常费力。拟议解决方案将标签从一小组受监督的图像传播到一大批不受监督的图像中,以获得足够的真正和人工标签的样本来训练深神经网络模型。然而,这些解决方案需要许多受监督的图像来验证。我们提供了一个循环,从一组带有更正确的标签样本的迭代中培训一个深神经网络(VGG-16),通过使用t-SNE将其最后一个最大集合层的特征投射到一个2D内嵌空间,在其中使用Optimum-Path森林半受监督的分类器进行标签的传播。随着标签集沿迭代改进了神经网络的特征。我们表明,这可以大大改进三个有挑战性的私人数据集和两个公共数据集的测试数据的分类结果(仅使用1 ⁇ 至5 ⁇ )。