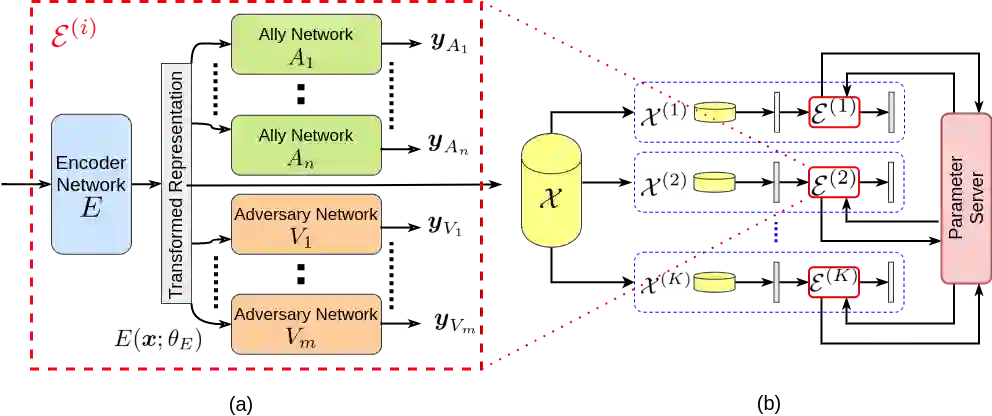

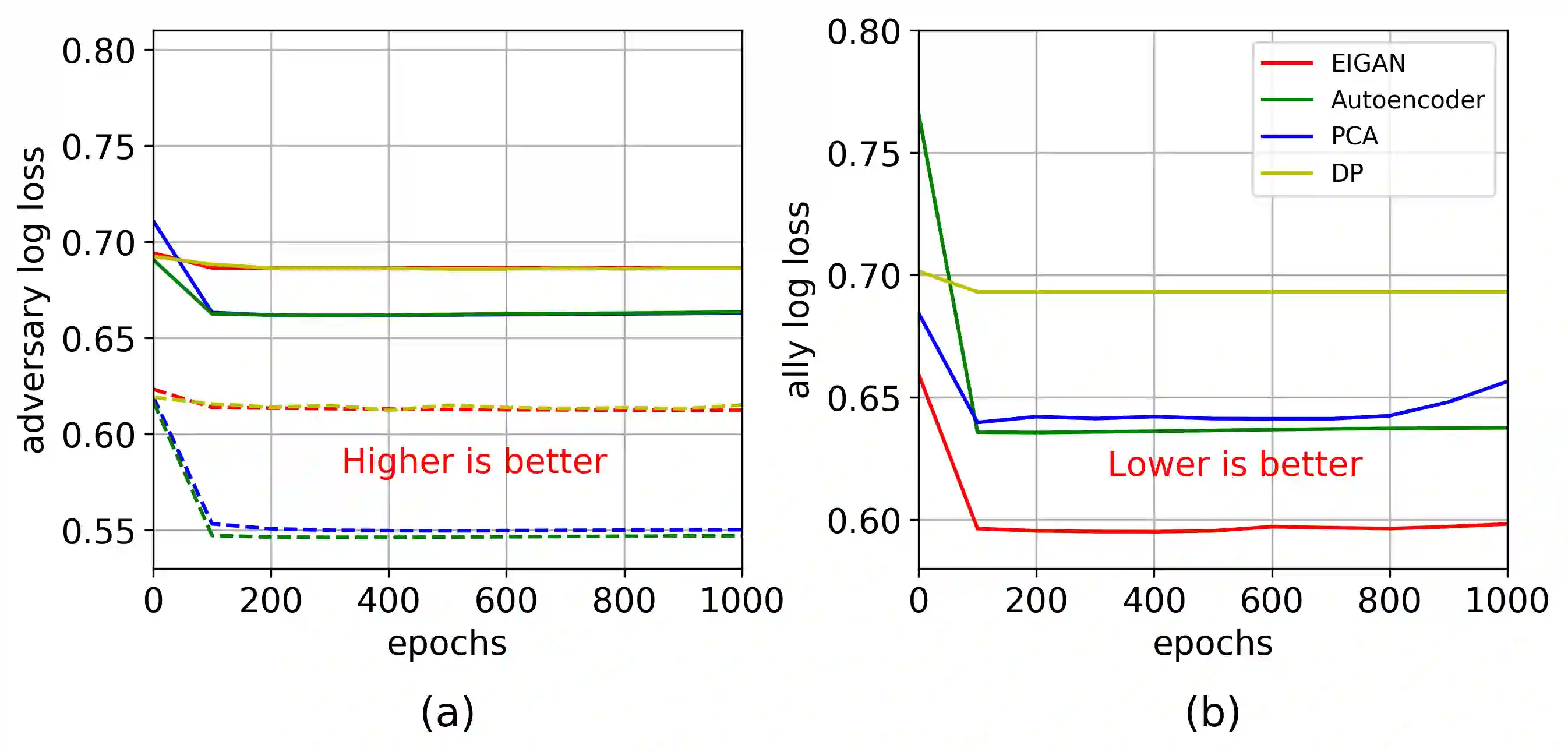

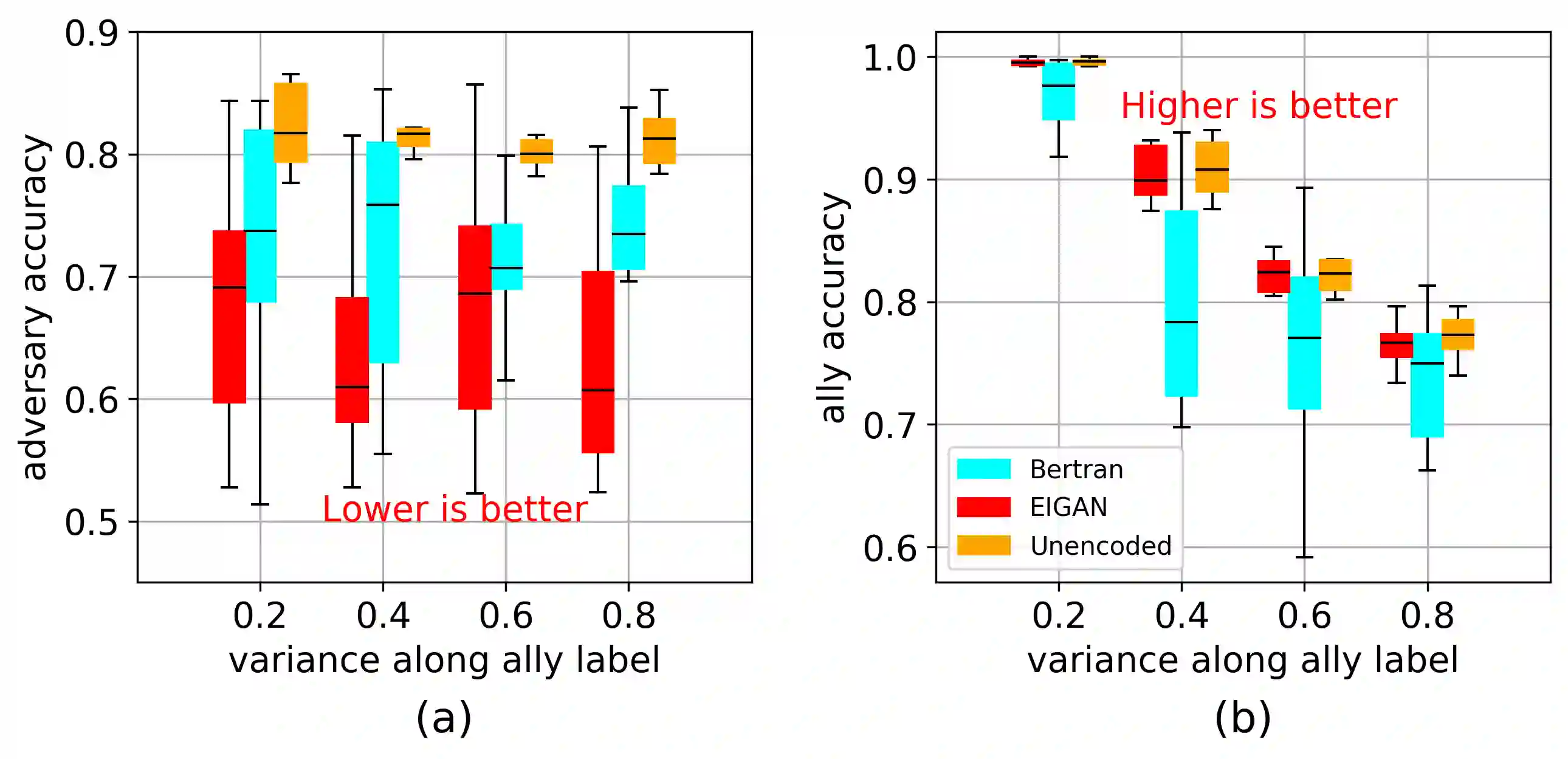

We study the problem of learning representations that are private yet informative, i.e., provide information about intended "ally" targets while hiding sensitive "adversary" attributes. We propose Exclusion-Inclusion Generative Adversarial Network (EIGAN), a generalized private representation learning (PRL) architecture that accounts for multiple ally and adversary attributes unlike existing PRL solutions. While centrally-aggregated dataset is a prerequisite for most PRL techniques, data in real-world is often siloed across multiple distributed nodes unwilling to share the raw data because of privacy concerns. We address this practical constraint by developing D-EIGAN, the first distributed PRL method that learns representations at each node without transmitting the source data. We theoretically analyze the behavior of adversaries under the optimal EIGAN and D-EIGAN encoders and the impact of dependencies among ally and adversary tasks on the optimization objective. Our experiments on various datasets demonstrate the advantages of EIGAN in terms of performance, robustness, and scalability. In particular, EIGAN outperforms the previous state-of-the-art by a significant accuracy margin (47% improvement), and D-EIGAN's performance is consistently on par with EIGAN under different network settings.

翻译:我们研究的是与现有PRL解决方案不同的多种盟友和敌对属性的学习代表性问题。虽然集中汇总的数据集是大多数PRL技术的先决条件,但现实世界中的数据往往分散在多个分布式的节点中,因为隐私问题而不愿分享原始数据。我们通过开发D-EIGAN来解决这一实际制约因素。D-EIGAN是第一个分布式PRL方法,在每一个节点上学习演示,而不传输源数据。我们从理论上分析在EIGAN和D-EIGAN最佳组合下的对手行为以及盟友和敌对任务对优化目标的依赖性的影响。我们对各种数据集的实验表明EIGAN在性能、稳健性和可扩展性方面的优势。特别是,EGAN在EGAN下以显著的准确性比值衡量了先前的州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州-州