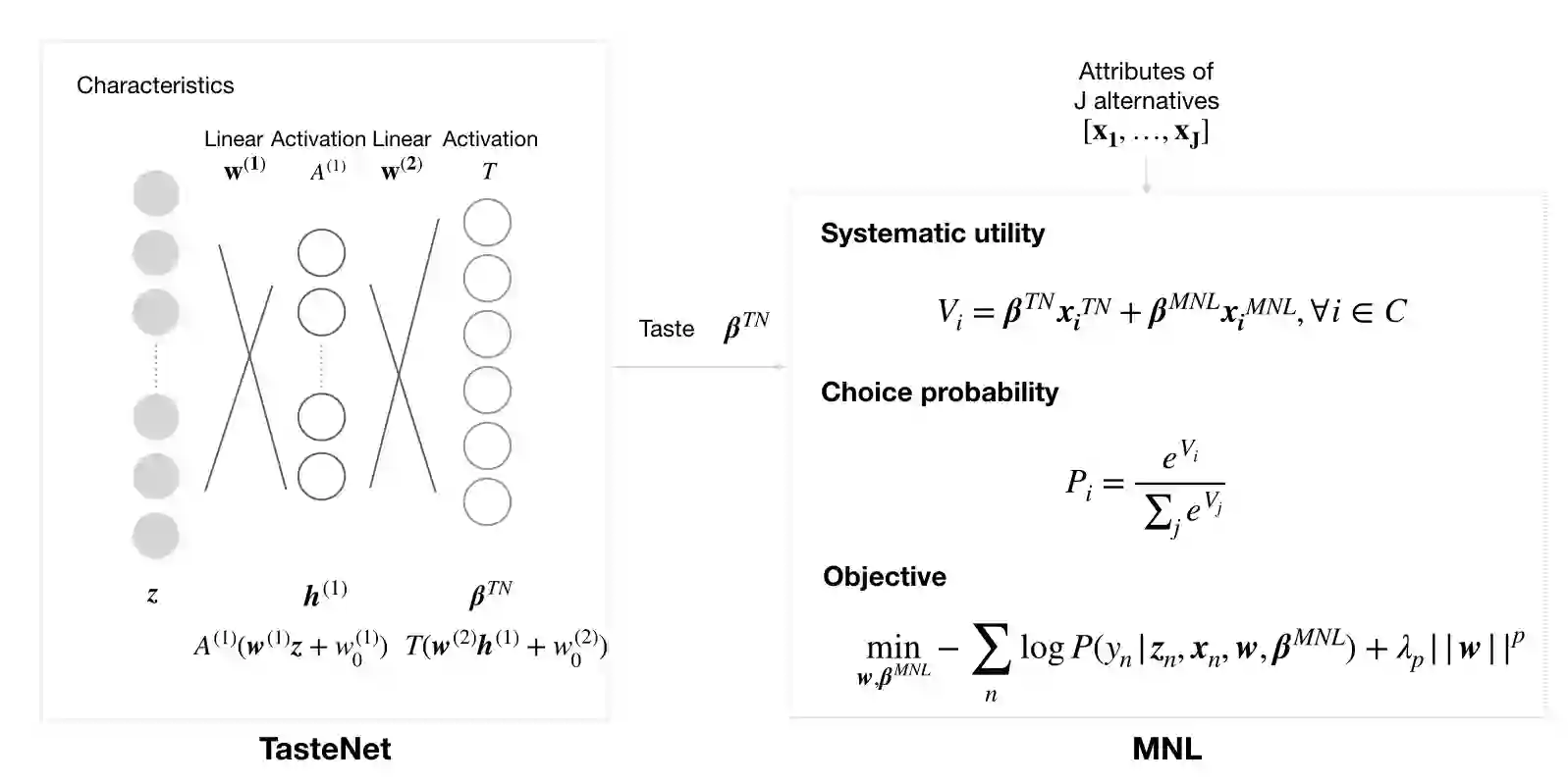

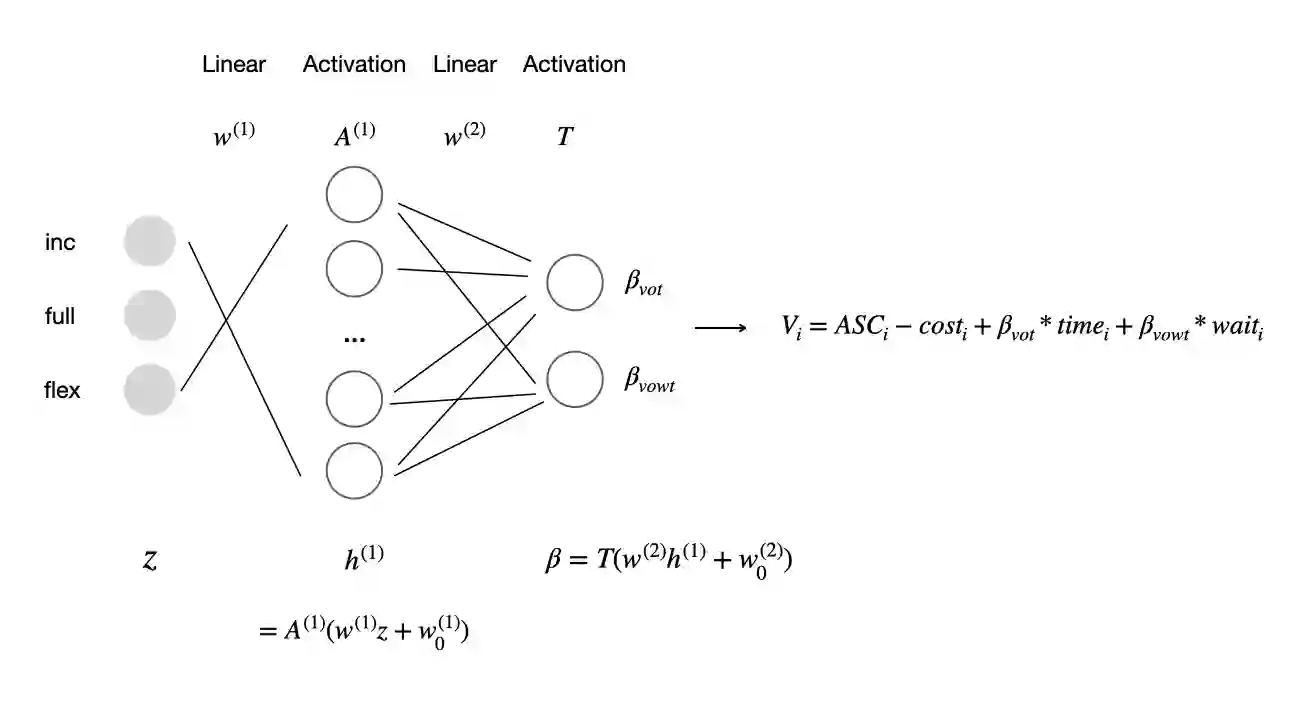

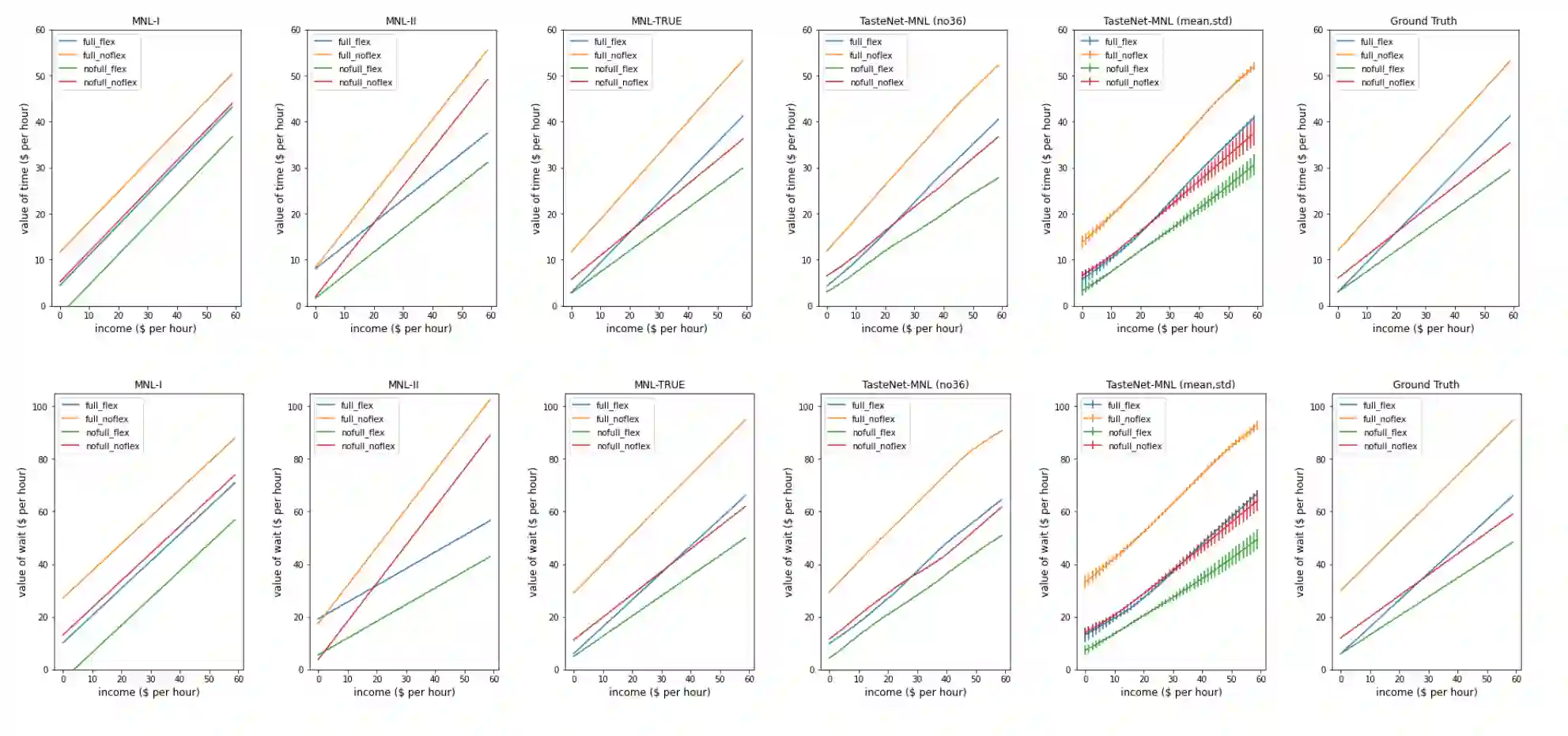

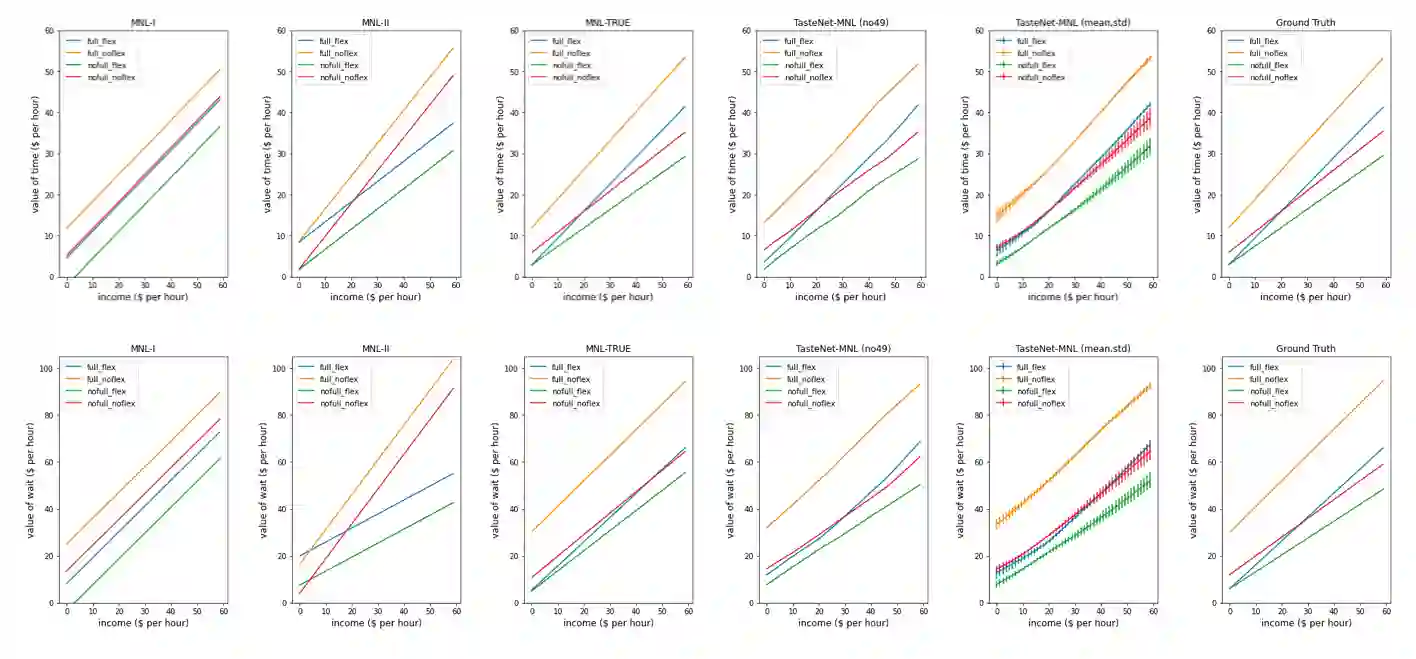

Discrete choice models (DCMs) require a priori knowledge of the utility functions, especially how tastes vary across individuals. Utility misspecification may lead to biased estimates, inaccurate interpretations and limited predictability. In this paper, we utilize a neural network to learn taste representation. Our formulation consists of two modules: a neural network (TasteNet) that learns taste parameters (e.g., time coefficient) as flexible functions of individual characteristics; and a multinomial logit (MNL) model with utility functions defined with expert knowledge. Taste parameters learned by the neural network are fed into the choice model and link the two modules. Our approach extends the L-MNL model (Sifringer et al., 2020) by allowing the neural network to learn the interactions between individual characteristics and alternative attributes. Moreover, we formalize and strengthen the interpretability condition - requiring realistic estimates of behavior indicators (e.g., value-of-time, elasticity) at the disaggregated level, which is crucial for a model to be suitable for scenario analysis and policy decisions. Through a unique network architecture and parameter transformation, we incorporate prior knowledge and guide the neural network to output realistic behavior indicators at the disaggregated level. We show that TasteNet-MNL reaches the ground-truth model's predictability and recovers the nonlinear taste functions on synthetic data. Its estimated value-of-time and choice elasticities at the individual level are close to the ground truth. On a publicly available Swissmetro dataset, TasteNet-MNL outperforms benchmarking MNLs and Mixed Logit model's predictability. It learns a broader spectrum of taste variations within the population and suggests a higher average value-of-time.

翻译:discrete 选择模型( DDCM ) 需要先验地了解通用功能, 特别是个人之间的品味差异。 工具性偏差可能导致偏差估计、 不准确的解释和有限的可预测性。 在本文中, 我们使用神经网络来学习品味表现。 我们的配方由两个模块组成: 神经网络( TasteNet), 学习品味参数( 例如时间系数), 学习个人特性的灵活功能; 多数值逻辑( MNL) 模式, 具有专家知识界定的通用功能。 神经网络所学的塔瑟值参数被输入到选择模型中, 连接两个模块。 我们的方法是扩展L- MNEL 模型( Sifringer et al., 2020), 使神经网络能够学习个人特性和替代属性之间的相互作用。 此外, 我们正式化和强化了可解释性条件, 需要真实性的行为指标( 例如, 时间值、 时间、弹性) 和 mostal- most 标准, 这对于模型适合情景分析和政策决定。 在不精确的网络架构和参数转换中, 我们将先前的实地数据和分类数据显示地面数据序列指标显示。