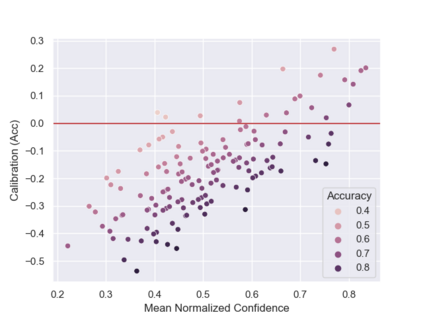

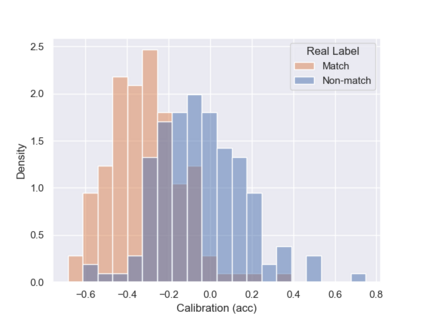

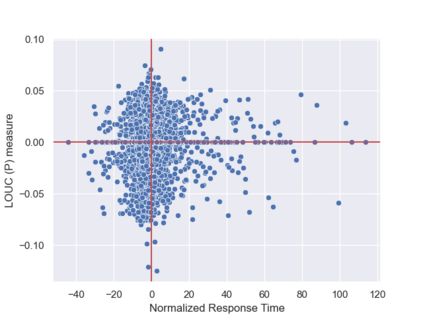

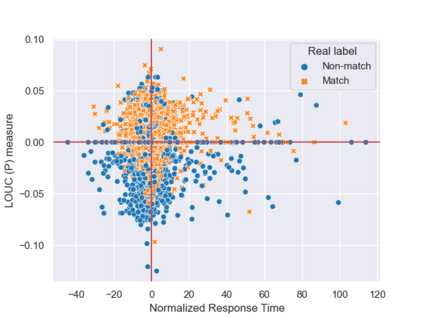

Schema matching is a core data integration task, focusing on identifying correspondences among attributes of multiple schemata. Numerous algorithmic approaches were suggested for schema matching over the years, aiming at solving the task with as little human involvement as possible. Yet, humans are still required in the loop -- to validate algorithms and to produce ground truth data for algorithms to be trained against. In recent years, a new research direction investigates the capabilities and behavior of humans while performing matching tasks. Previous works utilized this knowledge to predict, and even improve, the performance of human matchers. In this work, we continue this line of research by suggesting a novel measure to evaluate the performance of human matchers, based on calibration, a common meta-cognition measure. The proposed measure enables detailed analysis of various factors of the behavior of human matchers and their relation to human performance. Such analysis can be further utilized to develop heuristics and methods to better asses and improve the annotation quality.

翻译:暂无翻译