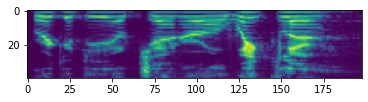

Audio-visual automatic speech recognition (AV-ASR) extends speech recognition by introducing the video modality as an additional source of information. In this work, the information contained in the motion of the speaker's mouth is used to augment the audio features. The video modality is traditionally processed with a 3D convolutional neural network (e.g. 3D version of VGG). Recently, image transformer networks arXiv:2010.11929 demonstrated the ability to extract rich visual features for image classification tasks. Here, we propose to replace the 3D convolution with a video transformer to extract visual features. We train our baselines and the proposed model on a large scale corpus of YouTube videos. The performance of our approach is evaluated on a labeled subset of YouTube videos as well as on the LRS3-TED public corpus. Our best video-only model obtains 34.9% WER on YTDEV18 and 19.3% on LRS3-TED, a 10% and 9% relative improvements over our convolutional baseline. We achieve the state of the art performance of the audio-visual recognition on the LRS3-TED after fine-tuning our model (1.6% WER). In addition, in a series of experiments on multi-person AV-ASR, we obtained an average relative reduction of 2% WER over our convolutional video frontend.

翻译:视听自动语音识别(AV-ASR)通过引入视频模式作为额外的信息来源,扩大了语音识别。在这项工作中,发言者口部运动中所含的信息被用于增强音频功能。视频模式传统上由3D进化神经网络(如VGG的3D版本)处理。最近,图像变压器网络arXiv:2010.11929展示了为图像分类任务提取丰富的视觉特征的能力。在这里,我们提议用视频变压器取代3D演动,以提取视觉特征。我们用大比例的YouTube视频材料来培训我们的基线和拟议模型。我们方法的性能通过一个贴标签的YouTube视频集和LRS3-TED公共版来评估。我们最好的视频模型在YTDEV18上获得了34.9%的WER,在LRS3-TED上获得了19.3%的R3,比我们的变压基线提高了10%和9%的相对改进。我们实现了在AV3升动后对LRS3进动成像系列的视听认知的状态,我们获得了在对A前摄像模型进行精化后升级的V1.6。