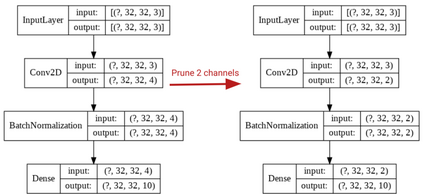

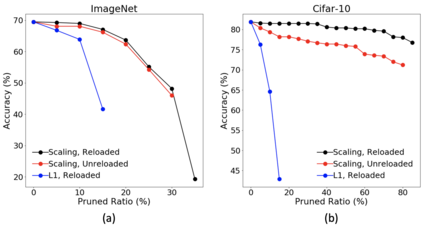

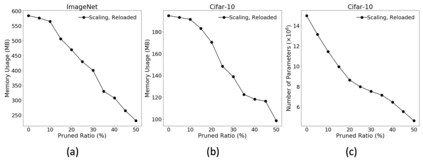

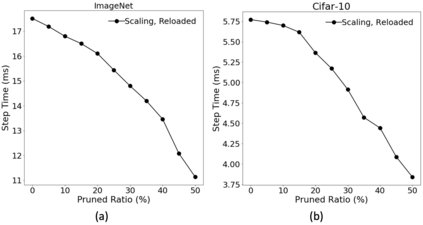

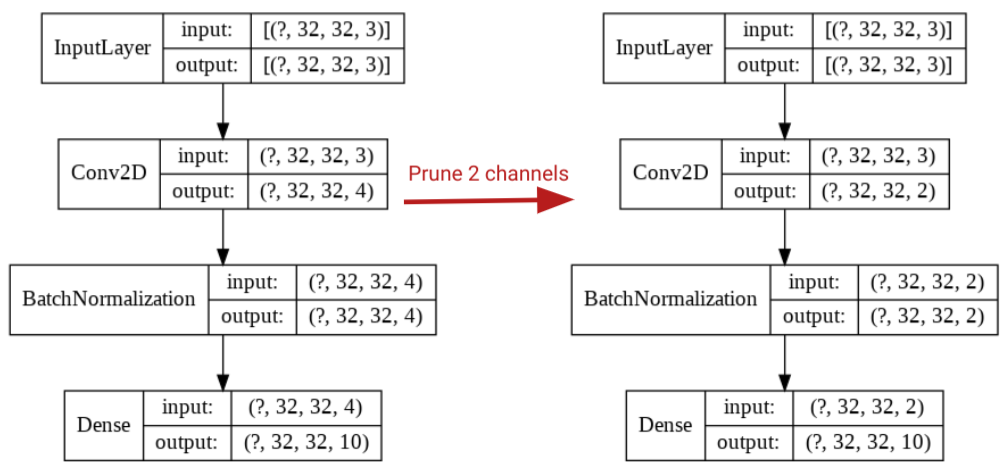

The deployment of convolutional neural networks is often hindered by high computational and storage requirements. Structured model pruning is a promising approach to alleviate these requirements. Using the VGG-16 model as an example, we measure the accuracy-efficiency trade-off for various structured model pruning methods and datasets (CIFAR-10 and ImageNet) on Tensor Processing Units (TPUs). To measure the actual performance of models, we develop a structured model pruning library for TensorFlow2 to modify models in place (instead of adding mask layers). We show that structured model pruning can significantly improve model memory usage and speed on TPUs without losing accuracy, especially for small datasets (e.g., CIFAR-10).

翻译:计算和储存要求高,往往会阻碍进化神经网络的部署。结构化模型运行是缓解这些要求的一个很有希望的方法。我们以VGG-16模型为例,衡量各种结构化模型运行方法和数据集(CIFAR-10和图像网)在Tensor处理器(TPUs)上的准确性效率权衡。为了衡量模型的实际性能,我们为TensorFlow2开发了一个结构化模型运行库,以修改现有模型(而不是添加掩码层)。我们表明结构化模型运行可以大大改进模型存储的使用和TPU的速度,而不会失去准确性,特别是小型数据集(例如CIFAR-10)。