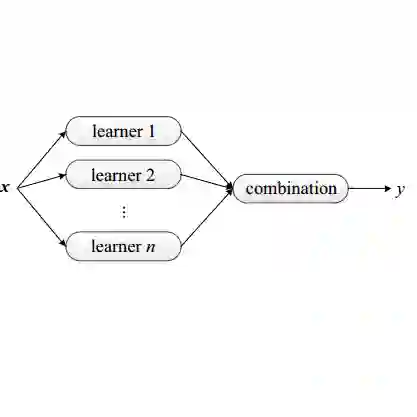

An approach to evolutionary ensemble learning for classification is proposed in which boosting is used to construct a stack of programs. Each application of boosting identifies a single champion and a residual dataset, i.e. the training records that thus far were not correctly classified. The next program is only trained against the residual, with the process iterating until some maximum ensemble size or no further residual remains. Training against a residual dataset actively reduces the cost of training. Deploying the ensemble as a stack also means that only one classifier might be necessary to make a prediction, so improving interpretability. Benchmarking studies are conducted to illustrate competitiveness with the prediction accuracy of current state-of-the-art evolutionary ensemble learning algorithms, while providing solutions that are orders of magnitude simpler. Further benchmarking with a high cardinality dataset indicates that the proposed method is also more accurate and efficient than XGBoost.

翻译:推荐一种渐进式混合学习以进行分类的方法,其中使用推力来构建堆叠程序。 每一次应用推力都确定了单一冠军和剩余数据集, 即迄今为止没有正确分类的培训记录。 下一个方案仅针对剩余数据进行培训, 程序循环直到某个最大混合体大小或没有进一步的剩余数据。 利用残余数据集进行培训, 积极降低培训费用。 将堆叠组合作为堆叠部署还意味着可能只有一个分类器来进行预测, 从而改进可解释性。 进行了基准化研究, 以说明与当前最新进化混合学习算法预测准确性相比的竞争力, 同时提供规模更简单的解决方案。 与一个高基点数据集的进一步基准显示, 拟议的方法也比 XGBoost 更准确和有效 。