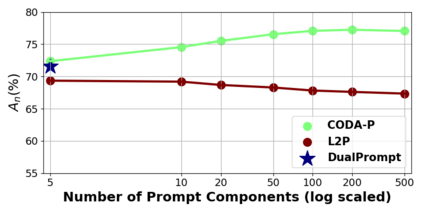

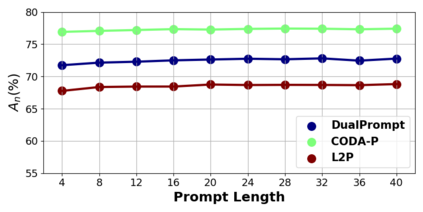

Computer vision models suffer from a phenomenon known as catastrophic forgetting when learning novel concepts from continuously shifting training data. Typical solutions for this continual learning problem require extensive rehearsal of previously seen data, which increases memory costs and may violate data privacy. Recently, the emergence of large-scale pre-trained vision transformer models has enabled prompting approaches as an alternative to data-rehearsal. These approaches rely on a key-query mechanism to generate prompts and have been found to be highly resistant to catastrophic forgetting in the well-established rehearsal-free continual learning setting. However, the key mechanism of these methods is not trained end-to-end with the task sequence. Our experiments show that this leads to a reduction in their plasticity, hence sacrificing new task accuracy, and inability to benefit from expanded parameter capacity. We instead propose to learn a set of prompt components which are assembled with input-conditioned weights to produce input-conditioned prompts, resulting in a novel attention-based end-to-end key-query scheme. Our experiments show that we outperform the current SOTA method DualPrompt on established benchmarks by as much as 4.5% in average final accuracy. We also outperform the state of art by as much as 4.4% accuracy on a continual learning benchmark which contains both class-incremental and domain-incremental task shifts, corresponding to many practical settings. Our code is available at https://github.com/GT-RIPL/CODA-Prompt

翻译:CODA-Prompt:基于分解式注意力的提示的持续学习方法,无需反复练习即可实现连续学习。计算机视觉模型在从不断变换的训练数据中学习新概念时会出现灾难性遗忘现象。通常解决这个连续学习问题的方法是对之前看到的数据进行大量练习,这会增加存储成本并可能违反数据隐私。最近,大规模预训练的视觉转换器模型的出现使得提示方法成为无需反复练习的替代方法。这些方法依赖于关键查询机制来生成提示,并且在经过初步的持续学习测试后,其能抵御灾难性遗忘。然而,这些方法的关键查询机制并没有在任务序列中进行端到端的训练。我们的实验表明,这导致了它们的可塑性降低,从而牺牲了新任务的准确性,无法从扩展的参数容量中受益。我们提出了一种学习提示组件的方法,通过输入条件的权重组合这些组件生成输入相关的提示,从而产生一种基于注意力的端到端的关键-查询方案。我们的实验表明,在已有的基准测试中,我们的方法相对目前最优方法 DualPrompt,平均最终准确率提高了最多4.5%。在包含类增量和域增量任务转移的连续学习基准测试中,我们的方法的准确率相对于最先进的技术提高了最多4.4%。我们的代码可在 https://github.com/GT-RIPL/CODA-Prompt 上获取。

相关内容

Source: Apple - iOS 8