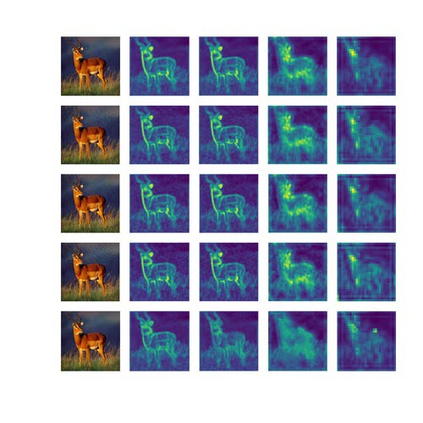

Recent studies show deep neural networks (DNNs) are extremely vulnerable to the elaborately designed adversarial examples. Adversarial learning with those adversarial examples has been proved as one of the most effective methods to defend against such an attack. At present, most existing adversarial examples generation methods are based on first-order gradients, which can hardly further improve models' robustness, especially when facing second-order adversarial attacks. Compared with first-order gradients, second-order gradients provide a more accurate approximation of the loss landscape with respect to natural examples. Inspired by this, our work crafts second-order adversarial examples and uses them to train DNNs. Nevertheless, second-order optimization involves time-consuming calculation for Hessian-inverse. We propose an approximation method through transforming the problem into an optimization in the Krylov subspace, which remarkably reduce the computational complexity to speed up the training procedure. Extensive experiments conducted on the MINIST and CIFAR-10 datasets show that our adversarial learning with second-order adversarial examples outperforms other fisrt-order methods, which can improve the model robustness against a wide range of attacks.

翻译:最近的研究表明,深层神经网络极易受到精心设计的对抗性实例的伤害。与这些对抗性实例的反向学习已被证明是防范这种攻击的最有效方法之一。目前,大多数现有的对抗性实例生成方法都以一阶梯度为基础,很难进一步提高模型的稳健性,特别是在面临二阶对立攻击时。与一阶梯度相比,二阶梯能更准确地接近自然实例的损失情况。受此启发,我们的工作手法第二阶对抗性实例并利用它们来训练DNS。然而,第二阶阶优化需要为赫斯亚反面人花费时间的计算。我们建议一种近似方法,将问题转化为克里洛夫亚空间的优化,大大降低计算复杂性,以加快培训程序。在MINIST和CIFAR-10数据集上进行的广泛实验表明,我们用二阶对抗性对抗性实例进行的对抗性学习优于其他裂分法方法,可以改进针对广泛攻击的模型的稳健性。