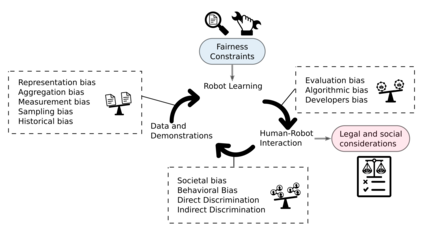

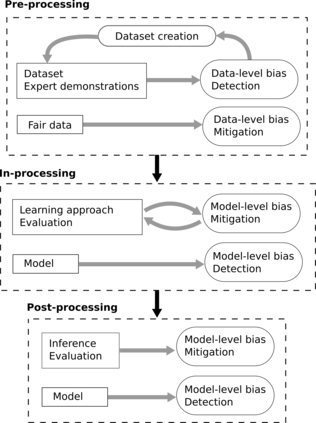

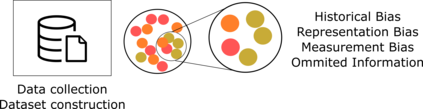

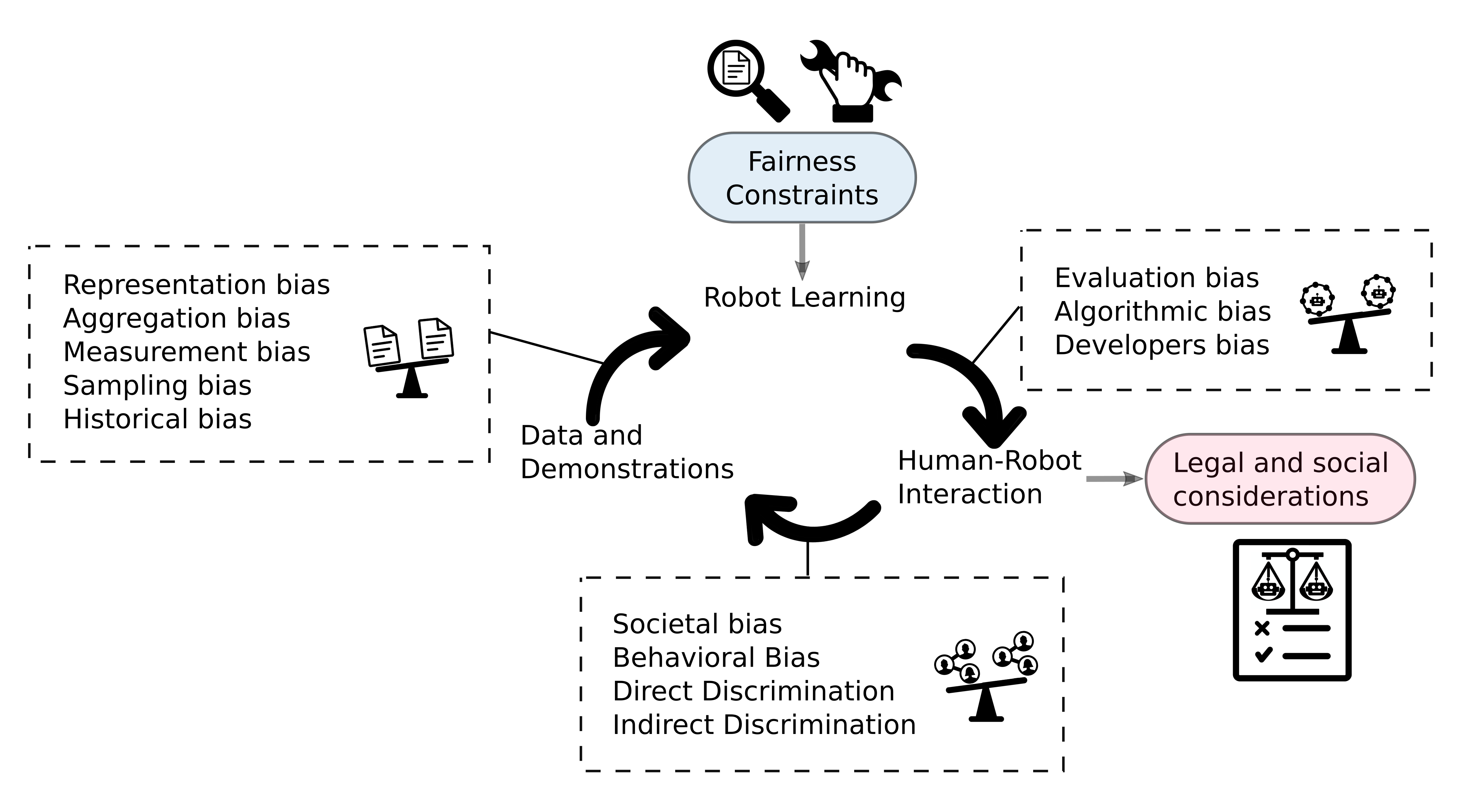

Machine learning has significantly enhanced the abilities of robots, enabling them to perform a wide range of tasks in human environments and adapt to our uncertain real world. Recent works in various domains of machine learning have highlighted the importance of accounting for fairness to ensure that these algorithms do not reproduce human biases and consequently lead to discriminatory outcomes. With robot learning systems increasingly performing more and more tasks in our everyday lives, it is crucial to understand the influence of such biases to prevent unintended behavior toward certain groups of people. In this work, we present the first survey on fairness in robot learning from an interdisciplinary perspective spanning technical, ethical, and legal challenges. We propose a taxonomy for sources of bias and the resulting types of discrimination due to them. Using examples from different robot learning domains, we examine scenarios of unfair outcomes and strategies to mitigate them. We present early advances in the field by covering different fairness definitions, ethical and legal considerations, and methods for fair robot learning. With this work, we aim at paving the road for groundbreaking developments in fair robot learning.

翻译:机器学习极大地提高了机器人的能力,使他们能够在人类环境中执行广泛的任务,适应我们不确定的现实世界。机器学习领域最近的工作突出了公平会计的重要性,以确保这些算法不会复制人类偏见,从而导致歧视结果。随着机器人学习系统在日常生活中越来越多地履行越来越多的任务,了解这种偏见的影响以防止对某些人群的意外行为至关重要。在这项工作中,我们介绍了关于从跨学科角度从技术、伦理和法律挑战中学习机器人的公平性第一次调查。我们建议对偏见的来源和由此产生的歧视类型进行分类。我们利用不同机器人学习领域的例子,审查不公平结果的情景和战略,以缓解这些后果和战略。我们通过涵盖不同的公平定义、伦理和法律考虑以及公平机器人学习的方法,提出该领域的早期进展。我们这样做的目的是为公平机器人学习的突破性发展铺平道路。