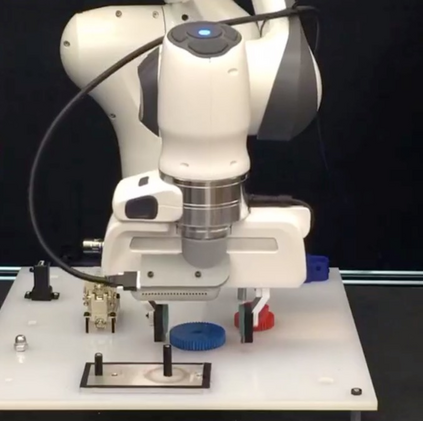

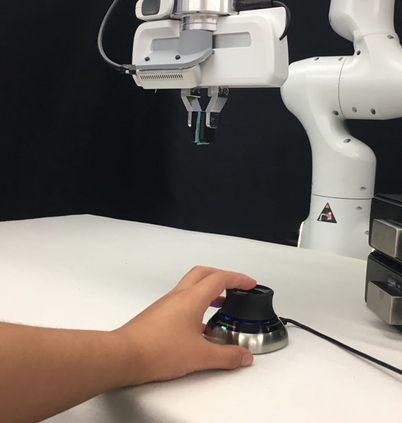

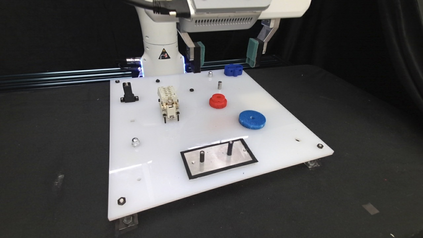

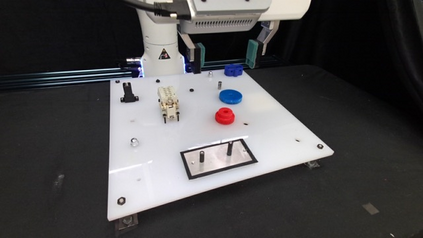

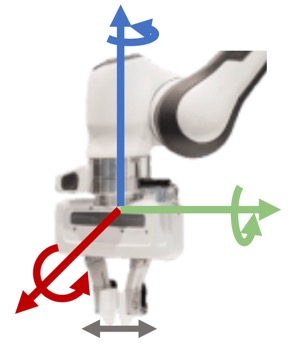

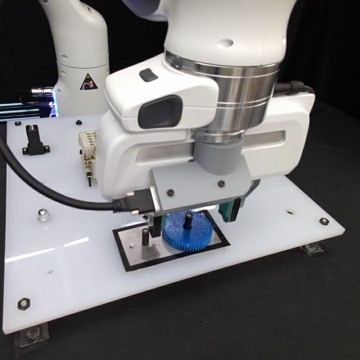

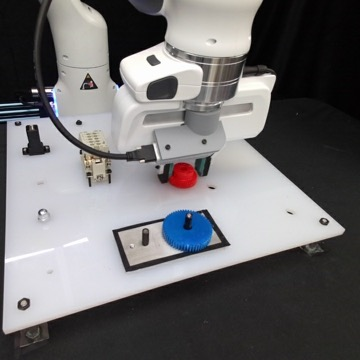

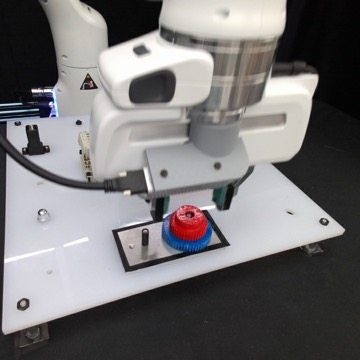

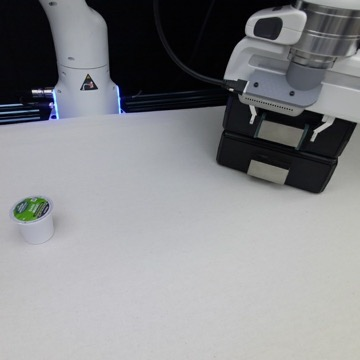

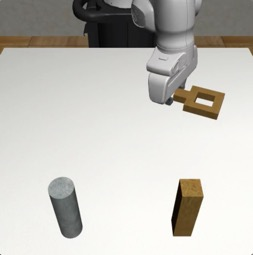

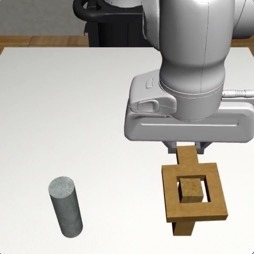

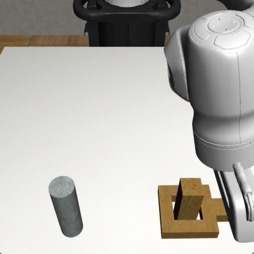

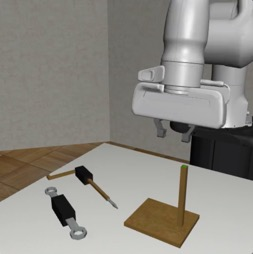

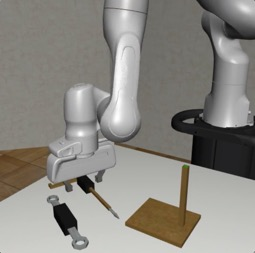

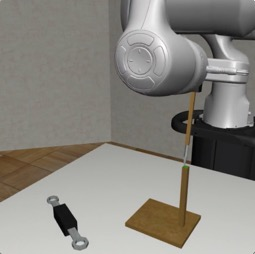

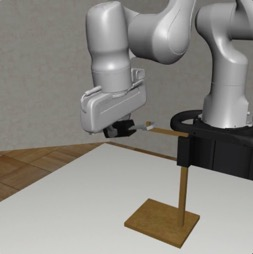

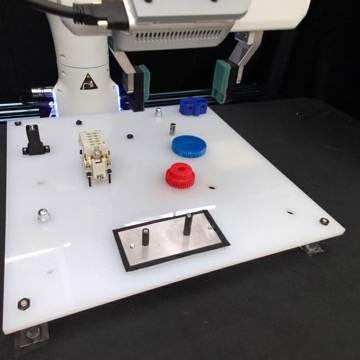

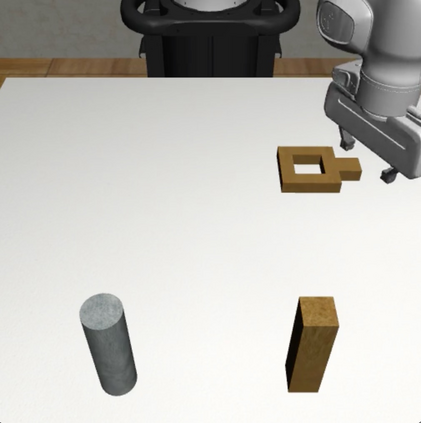

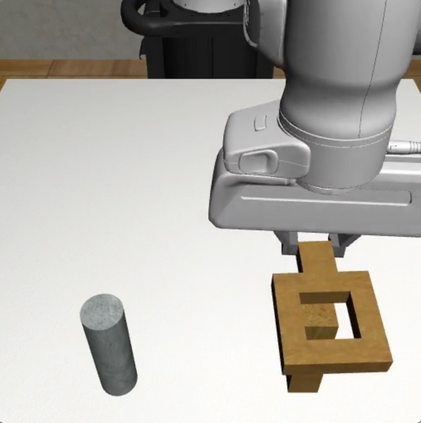

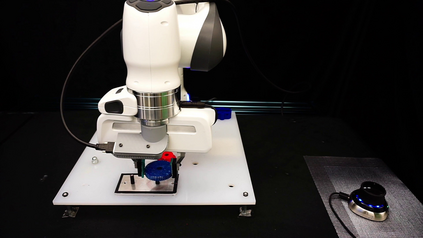

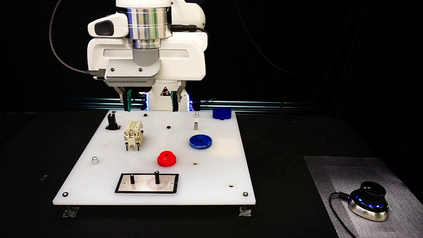

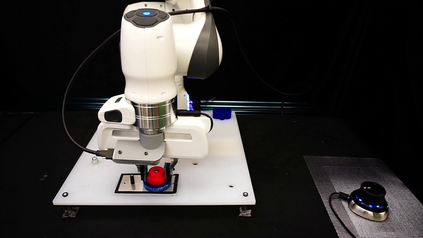

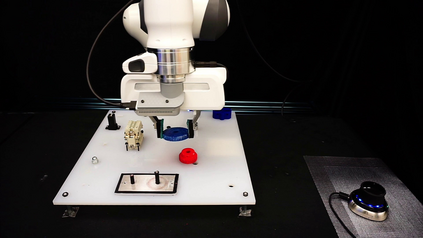

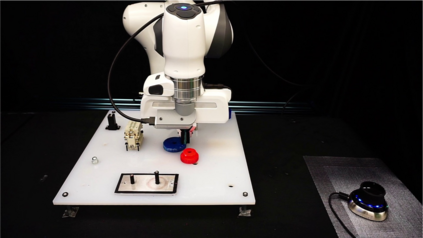

With the rapid growth of computing powers and recent advances in deep learning, we have witnessed impressive demonstrations of novel robot capabilities in research settings. Nonetheless, these learning systems exhibit brittle generalization and require excessive training data for practical tasks. To harness the capabilities of state-of-the-art robot learning models while embracing their imperfections, we present Sirius, a principled framework for humans and robots to collaborate through a division of work. In this framework, partially autonomous robots are tasked with handling a major portion of decision-making where they work reliably; meanwhile, human operators monitor the process and intervene in challenging situations. Such a human-robot team ensures safe deployments in complex tasks. Further, we introduce a new learning algorithm to improve the policy's performance on the data collected from the task executions. The core idea is re-weighing training samples with approximated human trust and optimizing the policies with weighted behavioral cloning. We evaluate Sirius in simulation and on real hardware, showing that Sirius consistently outperforms baselines over a collection of contact-rich manipulation tasks, achieving 8% boost in simulation and 27% on real hardware than the state-of-the-art methods, with 3 times faster convergence and 15% memory size. Videos and code are available at https://ut-austin-rpl.github.io/sirius/

翻译:随着计算能力的迅速增长和最近深层次学习的进展,我们目睹了在研究环境中新机器人能力令人印象深刻的展示。然而,这些学习系统展示了简单化,需要过多的培训数据来完成实际任务。为了利用最先进的机器人学习模型的能力,同时接受其不完善之处,我们提出天狼星,这是人类和机器人通过分工进行合作的原则框架。在这个框架内,部分自主的机器人的任务是在他们可靠工作的地方处理大部分决策;与此同时,人类操作者监测过程并干预具有挑战性的情况。这样的人类机器人团队确保了在复杂任务中安全部署。此外,我们引入一种新的学习算法来提高从任务处决中收集的数据的政策性能。核心想法是用近似人类信任重新对比培训样本,并优化加权行为克隆的政策。我们在模拟和真实硬件方面对天狼星进行评估,显示天狼星在收集接触力丰富的操作任务方面始终超越基线,在模拟中实现了8%的提升,在实际硬件上比状态/图像/地图编码更快。在15个时间/存储速度/速度/速度。