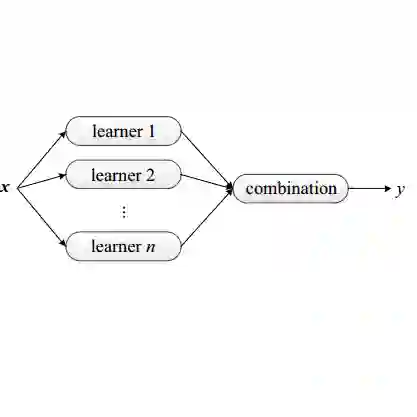

Class imbalance (CI) in classification problems arises when the number of observations belonging to one class is lower than the other classes. Ensemble learning that combines multiple models to obtain a robust model has been prominently used with data augmentation methods to address class imbalance problems. In the last decade, a number of strategies have been added to enhance ensemble learning and data augmentation methods, along with new methods such as generative adversarial networks (GANs). A combination of these has been applied in many studies, but the true rank of different combinations would require a computational review. In this paper, we present a computational review to evaluate data augmentation and ensemble learning methods used to address prominent benchmark CI problems. We propose a general framework that evaluates 10 data augmentation and 10 ensemble learning methods for CI problems. Our objective was to identify the most effective combination for improving classification performance on imbalanced datasets. The results indicate that combinations of data augmentation methods with ensemble learning can significantly improve classification performance on imbalanced datasets. These findings have important implications for the development of more effective approaches for handling imbalanced datasets in machine learning applications.

翻译:在分类问题中,当一个类别的观测数量比其他类别少时,便会出现类别不平衡(CI)。集成学习将多个模型组合起来以获得强韧模型,并广泛使用数据增强方法来解决类别不平衡问题。在过去的十年中,出现了许多策略来增强集成学习和数据增强方法,包括生成对抗网络(GANs)等新方法。这些策略的组合已被应用于许多研究中,但要找出不同组合的真正排名需要进行计算审查。在本文中,我们提出了一个计算评估框架,评估10种数据增强和10种集成学习方法来解决着名的CI问题。我们的目标是确定在不平衡数据集上提高分类性能的最有效组合。结果表明,数据增强方法与集成学习方法的组合可以显著提高不平衡数据集的分类性能。这些发现对于开发在机器学习应用中处理不平衡数据集的更有效方法具有重要意义。