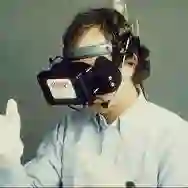

Hand action recognition is a special case of action recognition with applications in human-robot interaction, virtual reality or life-logging systems. Building action classifiers able to work for such heterogeneous action domains is very challenging. There are very subtle changes across different actions from a given application but also large variations across domains (e.g. virtual reality vs life-logging). This work introduces a novel skeleton-based hand motion representation model that tackles this problem. The framework we propose is agnostic to the application domain or camera recording view-point. When working on a single domain (intra-domain action classification) our approach performs better or similar to current state-of-the-art methods on well-known hand action recognition benchmarks. And, more importantly, when performing hand action recognition for action domains and camera perspectives which our approach has not been trained for (cross-domain action classification), our proposed framework achieves comparable performance to intra-domain state-of-the-art methods. These experiments show the robustness and generalization capabilities of our framework.

翻译:手动行动识别是人类机器人互动、虚拟现实或生命记录系统中应用的行动识别的一个特殊案例。 能够为此类不同行动领域工作的行动分类人员建设工作非常具有挑战性。 在特定应用的不同行动上,存在非常微妙的变化,但不同领域也存在巨大差异(例如虚拟现实与生活-博客)。 这项工作引入了一个新的基于骨架的手动代表模型,以解决这一问题。 我们提出的框架对应用程序域或相机记录视图点具有不可知性。 当在一个单一领域(内部行动分类)上工作时,我们的方法表现得更好或相似于在众所周知的手动行动识别基准方面的最新方法。 更重要的是,当对我们的方法未受过培训的行动领域和相机视角进行手动行动识别(跨部行动分类)时,我们拟议的框架取得了与内部状态方法相似的业绩。 这些实验显示了我们框架的稳健性和一般化能力。