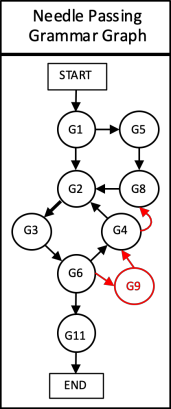

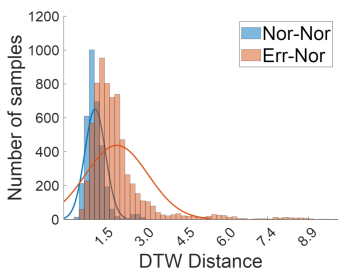

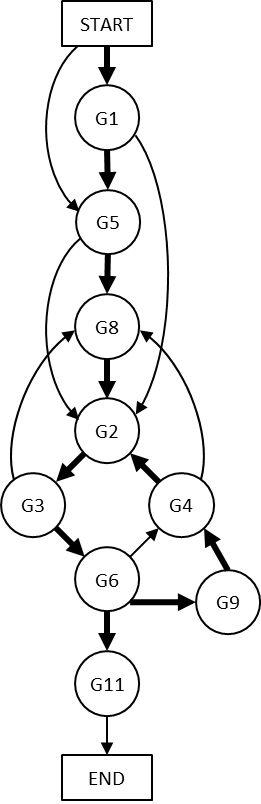

Background We aim to develop a method for automated detection of potentially erroneous motions that lead to sub-optimal surgeon performance and safety-critical events in robot-assisted surgery. Methods We develop a rubric for identifying task and gesture-specific Executional and Procedural errors and evaluate dry-lab demonstrations of Suturing and Needle Passing tasks from the JIGSAWS dataset. We characterize erroneous parts of demonstrations by labeling video data, and use distribution similarity analysis and trajectory averaging on kinematic data to identify parameters that distinguish erroneous gestures. Results Executional error frequency varies by task and gesture and correlates with skill level. Some predominant error modes in each gesture are distinguishable by analyzing error-specific kinematic parameters. Procedural errors could lead to lower performance scores and increased demonstration times but also depend on surgical style. Conclusions This study provides preliminary evidence that automated error detection can provide context-dependent and quantitative feedback to surgical trainees for performance improvement.

翻译:我们的目标是开发一种方法,用于自动检测可能导致机器人辅助外科手术中次优外科性能和安全临界事件的潜在错误动作。我们开发了一种方法,用于识别任务和特定手势执行和程序错误,并评估JIGSAWS数据集中的悬浮和针头传递任务的干拉演示。我们通过标注视频数据来辨别演示的错误部分,并使用分布相似性分析和运动数据平均轨迹来辨别错误手势的参数。结果执行错误频率因任务和手势而异,与技能水平相关。每个动作中的一些主要错误模式可以通过分析具体错误的运动参数加以区分。程序错误可能导致性能分数降低,增加演示时间,但也取决于外科风格。本研究报告提供了初步证据,表明自动误检可以向外科受训人员提供基于背景和数量方面的反馈,以改进性能。