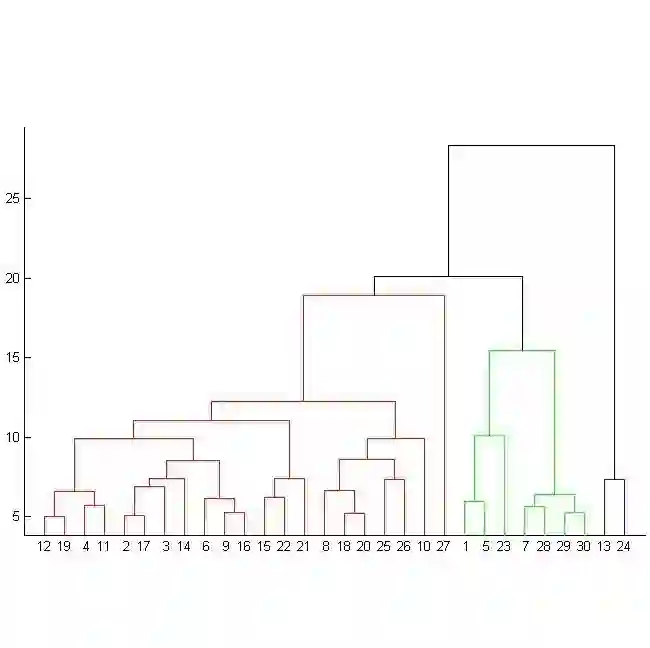

The main question is: why and how can we ever predict based on a finite sample? The question is not answered by statistical learning theory. Here, I suggest that prediction requires belief in "predictability" of the underlying dependence, and learning involves search for a hypothesis where these beliefs are violated the least given the observations. The measure of these violations ("errors") for given data, hypothesis and particular type of predictability beliefs is formalized as concept of incongruity in modal Logic of Observations and Hypotheses (LOH). I show on examples of many popular textbook learners (from hierarchical clustering to k-NN and SVM) that each of them minimizes its own version of incongruity. In addition, the concept of incongruity is shown to be flexible enough for formalization of some important data analysis problems, not considered as part of ML.

翻译:主要问题是:为什么和如何根据有限的样本预测?问题不是统计学理论回答的。在这里,我建议预测要求相信基本依赖性的“可预见性 ”, 学习涉及寻找一种假设,即这些信仰被侵犯的情况最少。对这些特定数据、假设和特定类型的可预测性信念的衡量(“错误”)正式确定为观察和假象模式逻辑(LOH)中的不一致性概念。我用许多受欢迎的教科书学习者(从等级分组到 k-NN和SVM)的例子来说明,他们每一个人都尽可能减少自己版本的不相容性。 此外,不相容的概念被证明足够灵活,可以正式处理一些重要的数据分析问题,但并不被视为 ML 的一部分。