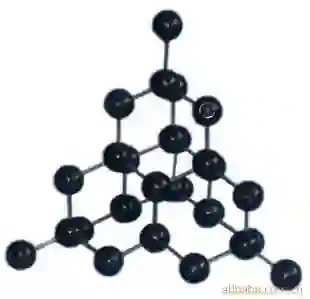

In this work, we propose a robust framework that employs adversarially robust training to safeguard the machine learning models against perturbed testing data. We achieve this by incorporating the worst-case additive adversarial error within a fixed budget for each sample during model estimation. Our main focus is to provide a plug-and-play solution that can be incorporated in the existing machine learning algorithms with minimal changes. To that end, we derive the ready-to-use solution for several widely used loss functions with a variety of norm constraints on adversarial perturbation for various supervised and unsupervised ML problems, including regression, classification, two-layer neural networks, graphical models, and matrix completion. The solutions are either in closed-form, 1-D optimization, semidefinite programming, difference of convex programming or a sorting-based algorithm. Finally, we validate our approach by showing significant performance improvement on real-world datasets for supervised problems such as regression and classification, as well as for unsupervised problems such as matrix completion and learning graphical models, with very little computational overhead.

翻译:在这项工作中,我们提出一个强有力的框架,利用对抗性强的训练来保障机器学习模型不受扰动测试数据的影响。我们通过将最坏的附加对抗性差纳入模型估计期间每个样本的固定预算来实现这一点。我们的主要重点是提供一个插头和游戏解决方案,可以纳入现有的机器学习算法中,但变化最小。为此,我们为几个广泛使用的损失函数找到现成的解决方案,对各种受监督和不受监督的 ML 问题,包括回归、分类、两层神经网络、图形模型和矩阵完成等,进行对抗性干扰规范限制。这些解决方案要么是封闭式、1D优化、半确定式编程、组合编程差异或基于分类的算法。最后,我们验证了我们的方法,方法是在真实世界数据集上显示显著的性能改进,用于监管的问题,如回归和分类,以及诸如矩阵完成和学习图形模型等未经纠正的问题,而计算间接费用很少。