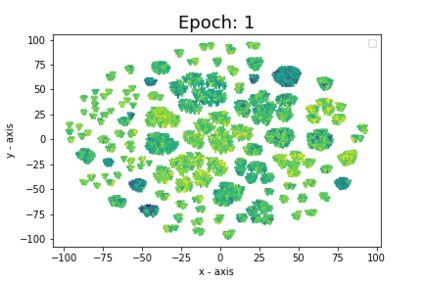

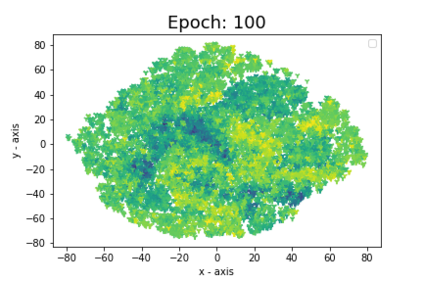

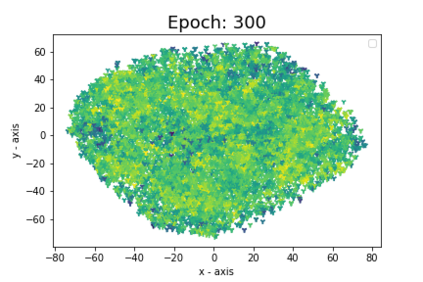

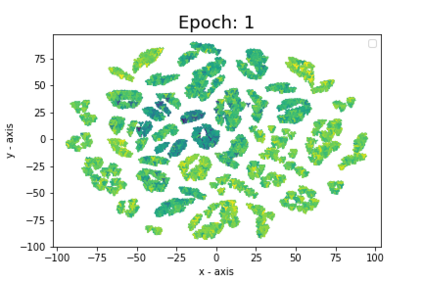

Neural Architecture Search (NAS) has recently gained increased attention, as a class of approaches that automatically searches in an input space of network architectures. A crucial part of the NAS pipeline is the encoding of the architecture that consists of the applied computational blocks, namely the operations and the links between them. Most of the existing approaches either fail to capture the structural properties of the architectures or use hand-engineered vector to encode the operator information. In this paper, we propose the replacement of fixed operator encoding with learnable representations in the optimization process. This approach, which effectively captures the relations of different operations, leads to smoother and more accurate representations of the architectures and consequently to improved performance of the end task. Our extensive evaluation in ENAS benchmark demonstrates the effectiveness of the proposed operation embeddings to the generation of highly accurate models, achieving state-of-the-art performance. Finally, our method produces top-performing architectures that share similar operation and graph patterns, highlighting a strong correlation between the structural properties of the architecture and its performance.

翻译:最近,神经结构搜索(NAS)作为一种在网络结构输入空间中自动搜索的一种方法,最近受到越来越多的注意。NAS管道的一个关键部分是将由应用计算区块组成的结构编码,即操作和它们之间的联系。大多数现有方法要么未能捕捉结构的结构属性,要么使用手工设计矢量来编码操作员的信息。在本文件中,我们建议用在优化过程中可学习的表示方式取代固定操作员编码。这种方法有效地捕捉了不同操作的关系,导致结构的更平稳和更准确的表述,从而改进了最终任务的绩效。我们在ENAS基准中的广泛评价表明拟议操作与生成高度精确模型的实效,实现了最先进的性能。最后,我们的方法产生了具有类似操作和图形模式的顶级性能结构,突出了结构结构特征与其性能之间的密切关联。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem