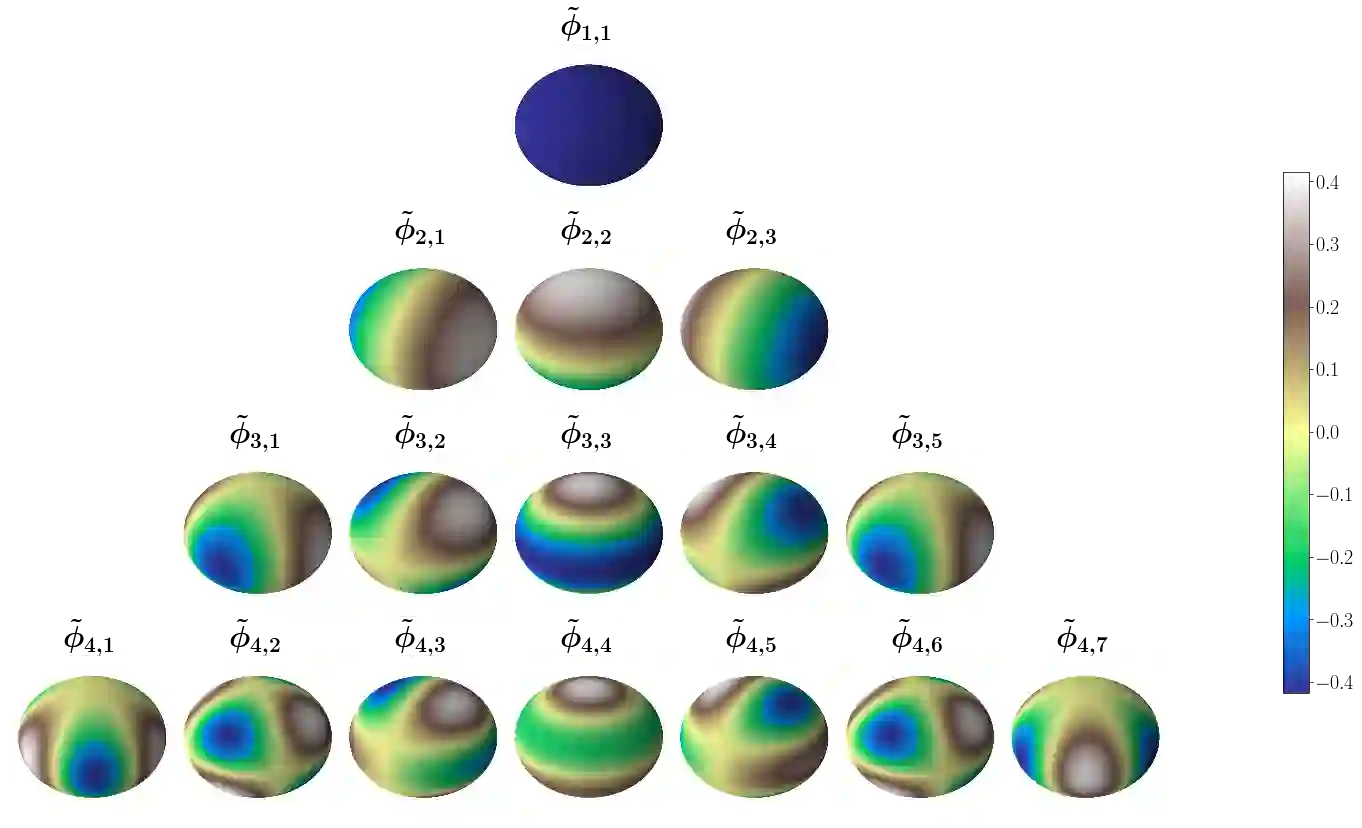

An interesting observation in artificial neural networks is their favorable generalization error despite typically being extremely overparameterized. It is well known that classical statistical learning methods often result in vacuous generalization errors in the case of overparameterized neural networks. Adopting the recently developed Neural Tangent (NT) kernel theory, we prove uniform generalization bounds for overparameterized neural networks in kernel regimes, when the true data generating model belongs to the reproducing kernel Hilbert space (RKHS) corresponding to the NT kernel. Importantly, our bounds capture the exact error rates depending on the differentiability of the activation functions. In order to establish these bounds, we propose the information gain of the NT kernel as a measure of complexity of the learning problem. Our analysis uses a Mercer decomposition of the NT kernel in the basis of spherical harmonics and the decay rate of the corresponding eigenvalues. As a byproduct of our results, we show the equivalence between the RKHS corresponding to the NT kernel and its counterpart corresponding to the Mat\'ern family of kernels, that induces a very general class of models. We further discuss the implications of our analysis for some recent results on the regret bounds for reinforcement learning algorithms, which use overparameterized neural networks.

翻译:在人工神经网络中,一个有趣的观察是,人工神经网络的偏好一般化错误,尽管通常都过于过分分化。众所周知,传统的统计学习方法往往在超分化神经网络中造成空洞的泛化错误。采用最近开发的神经内核(NT)内核理论,我们证明在内核系统中,当真正的数据生成模型属于再生产与NT内核相对应的Hilbert空间(RKHS)的复制核心部分时,对超分神经网络(RKHS)来说是统一的。重要的是,根据激活功能的可变性,我们的界限可以捕捉到准确的错误率。为了建立这些界限,我们建议将NT内核获得的信息作为衡量学习问题复杂性的尺度。我们的分析使用了内核内核超度神经网络(RKHS)在复制内核空间(RKHS)和对应系统内核功能的对应值之间的等值。我们用NT内核内核的精确度,我们用NT内核内核的内核和对应的内核网络获得的信息,我们最近对内核分析结果的排序的升级分析,我们用NT&Q内核分析的内核部分的内核结果,我们用来进一步对等分数。我们用来对等的内核分析,我们的分析使用了内核的内核的内核的内核分析,我们用来对等的内核的内核分析,我们用来对等的内核的内核的内核的内核分析,我们家的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核的内核,我们用。