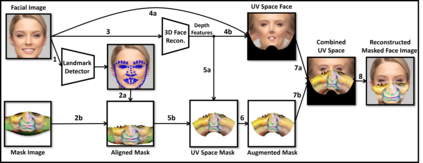

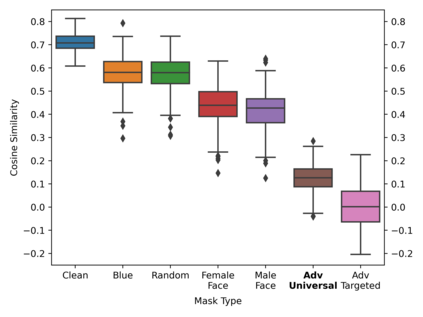

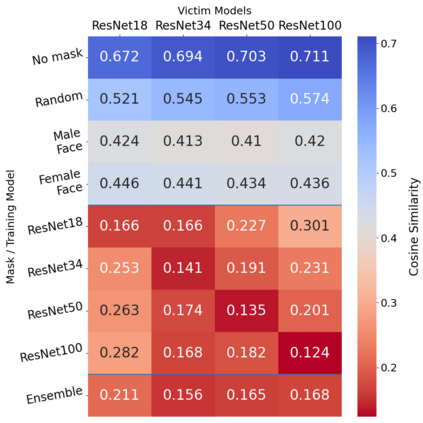

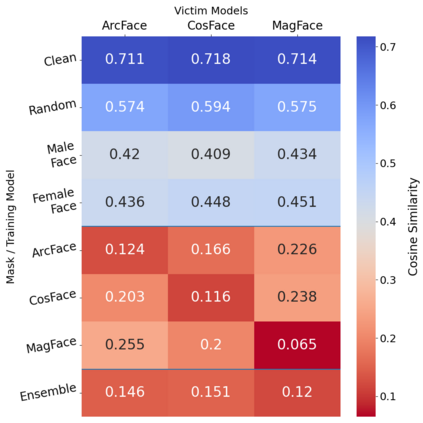

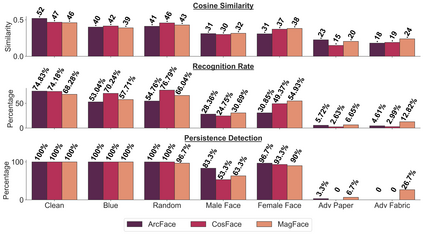

Deep learning-based facial recognition (FR) models have demonstrated state-of-the-art performance in the past few years, even when wearing protective medical face masks became commonplace during the COVID-19 pandemic. Given the outstanding performance of these models, the machine learning research community has shown increasing interest in challenging their robustness. Initially, researchers presented adversarial attacks in the digital domain, and later the attacks were transferred to the physical domain. However, in many cases, attacks in the physical domain are conspicuous, and thus may raise suspicion in real-world environments (e.g., airports). In this paper, we propose Adversarial Mask, a physical universal adversarial perturbation (UAP) against state-of-the-art FR models that is applied on face masks in the form of a carefully crafted pattern. In our experiments, we examined the transferability of our adversarial mask to a wide range of FR model architectures and datasets. In addition, we validated our adversarial mask's effectiveness in real-world experiments (CCTV use case) by printing the adversarial pattern on a fabric face mask. In these experiments, the FR system was only able to identify 3.34% of the participants wearing the mask (compared to a minimum of 83.34% with other evaluated masks). A demo of our experiments can be found at: https://youtu.be/_TXkDO5z11w.

翻译:在过去几年里,深层学习的面部识别模型(FR)展示了最新的表现,即使戴防护面罩在COVID-19大流行期间变得司空见惯。鉴于这些模型的杰出表现,机器学习研究界对挑战其稳健性表现出越来越大的兴趣。最初,研究人员在数字领域展示了对抗性攻击,后来这些攻击转移到了物理领域。然而,在许多情况下,物理领域的攻击是显而易见的,因此可能会在现实世界环境中(例如机场)引起怀疑。在本文中,我们提议采用反面面具,即针对以精心设计的模式在面部面具上应用的全方位对抗性对抗性渗透(UAP)模式。在我们的实验中,我们研究了我们的对抗性面具可转移到广泛的FR模型架构和数据集的可转移性。此外,我们验证了我们的对抗性面具在现实世界环境中(例如,机场)的效用(CCTV使用案例),我们通过将对抗性面罩打印在织物面罩上,一种有形的对抗性对抗性对立面罩上。在这些实验中,应用了最先进的FRMFMFMFM(A% 3.)系统只能识别其他的参与者。