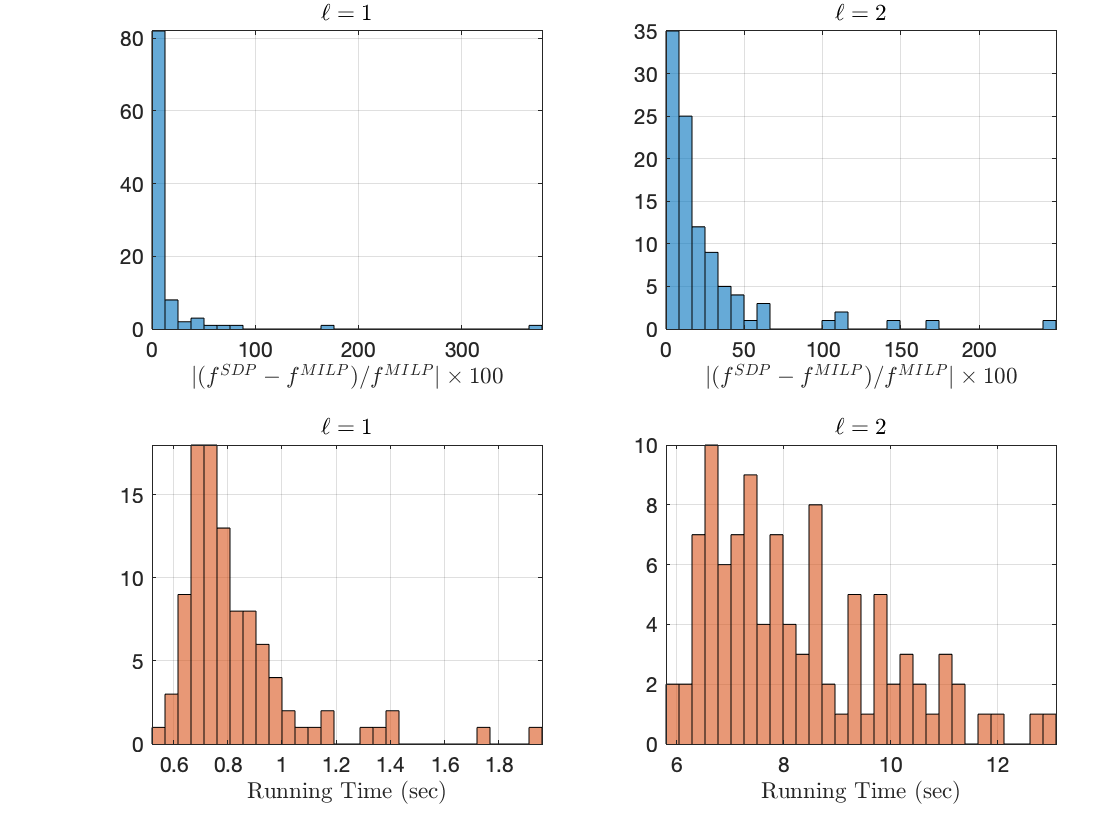

Certifying the safety or robustness of neural networks against input uncertainties and adversarial attacks is an emerging challenge in the area of safe machine learning and control. To provide such a guarantee, one must be able to bound the output of neural networks when their input changes within a bounded set. In this paper, we propose a semidefinite programming (SDP) framework to address this problem for feed-forward neural networks with general activation functions and input uncertainty sets. Our main idea is to abstract various properties of activation functions (e.g., monotonicity, bounded slope, bounded values, and repetition across layers) with the formalism of quadratic constraints. We then analyze the safety properties of the abstracted network via the S-procedure and semidefinite programming. Our framework spans the trade-off between conservatism and computational efficiency and applies to problems beyond safety verification. We evaluate the performance of our approach via numerical problem instances of various sizes.

翻译:在安全机器学习和控制领域,证明神经网络在投入不确定性和对抗性攻击方面的安全性或稳健性是一项新出现的挑战。为了提供这种保证,人们必须能够在神经网络投入变化与一组封闭时将其输出捆绑起来。在本文件中,我们提议了一个半无限制的编程框架来解决这个问题,供具有一般激活功能和输入不确定性组合的进料向神经网络使用。我们的主要想法是抽象地将激活功能的各种特性(例如单向、捆绑斜坡、约束值和跨层重复)与二次限制的形式主义结合起来。我们随后通过S-程序化和半无限制编程分析抽象网络的安全特性。我们的框架跨越了保守主义与计算效率之间的交易,并适用于安全核查以外的问题。我们通过不同大小的数字问题实例评估我们方法的绩效。