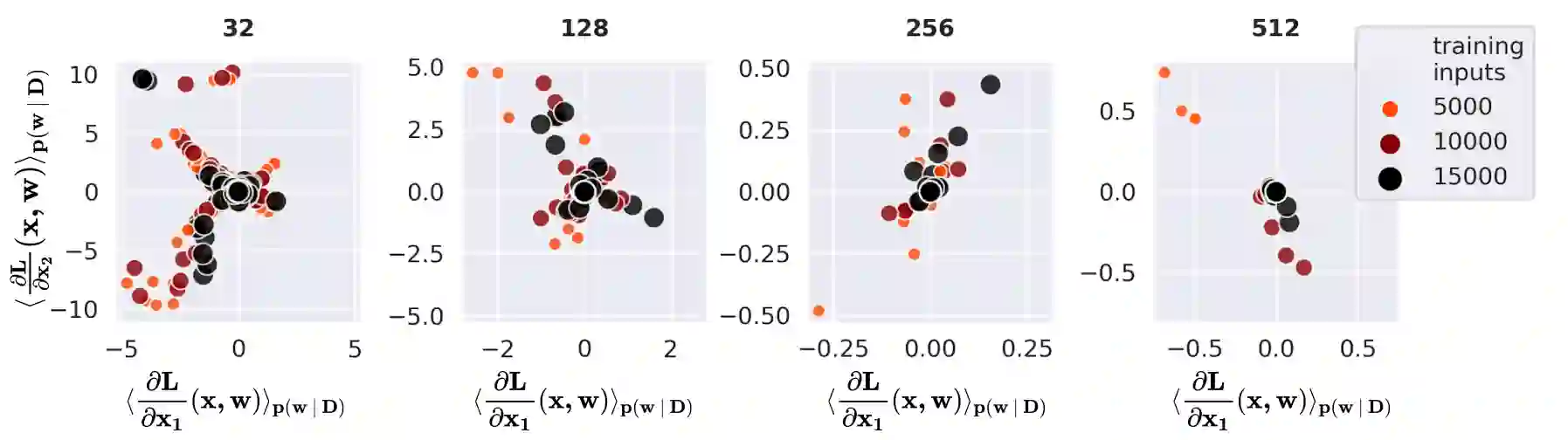

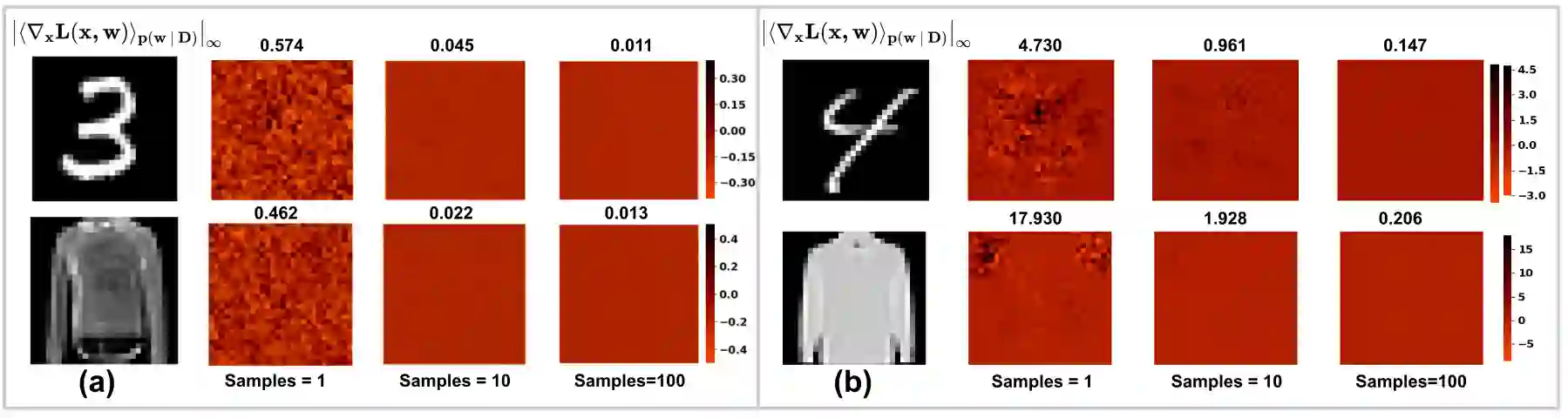

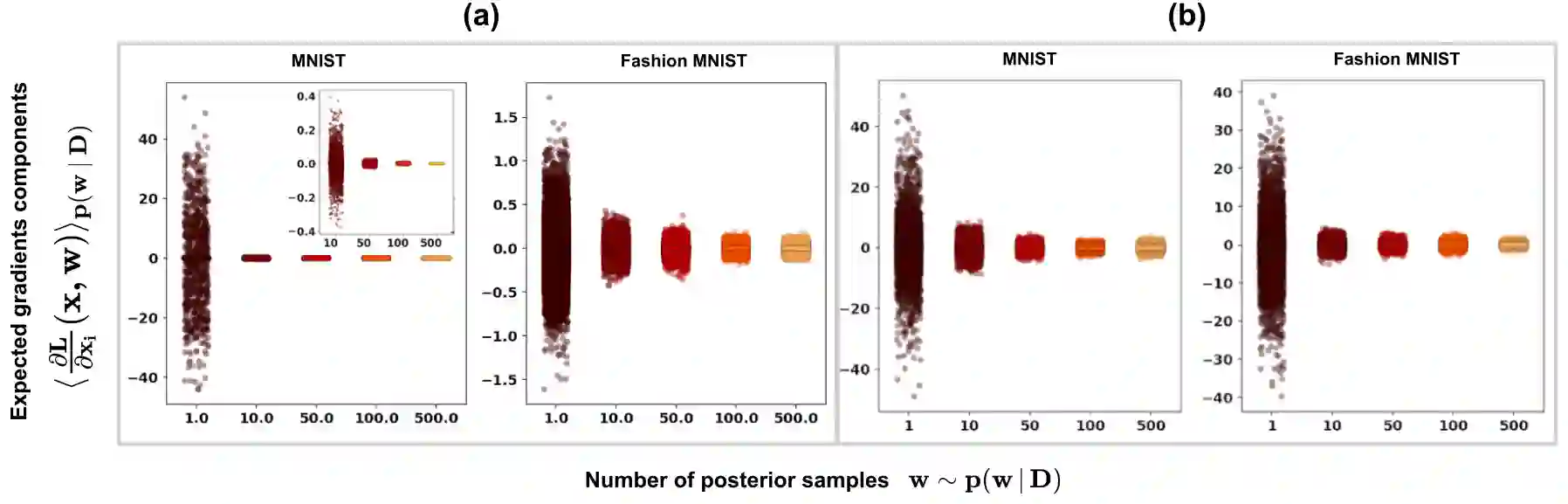

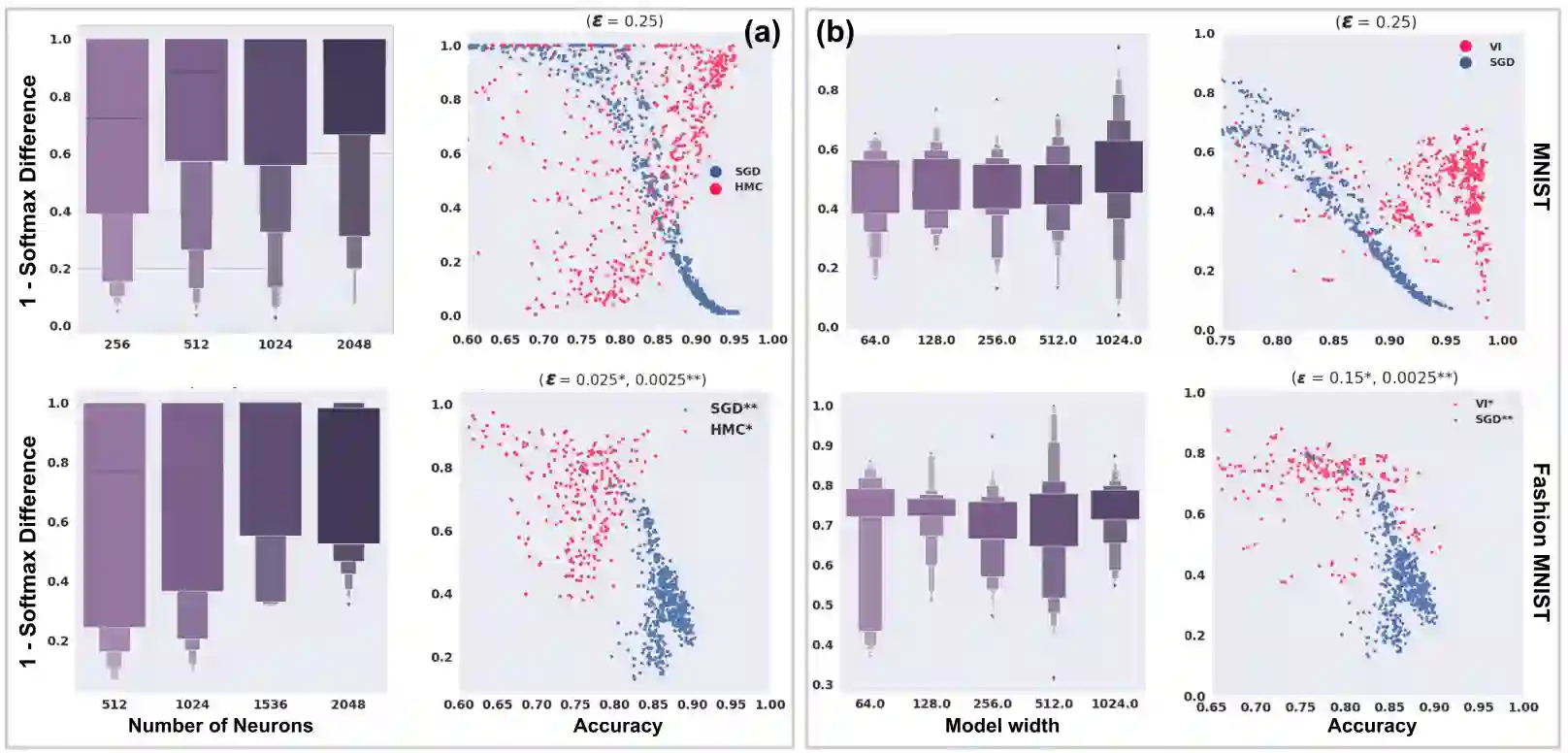

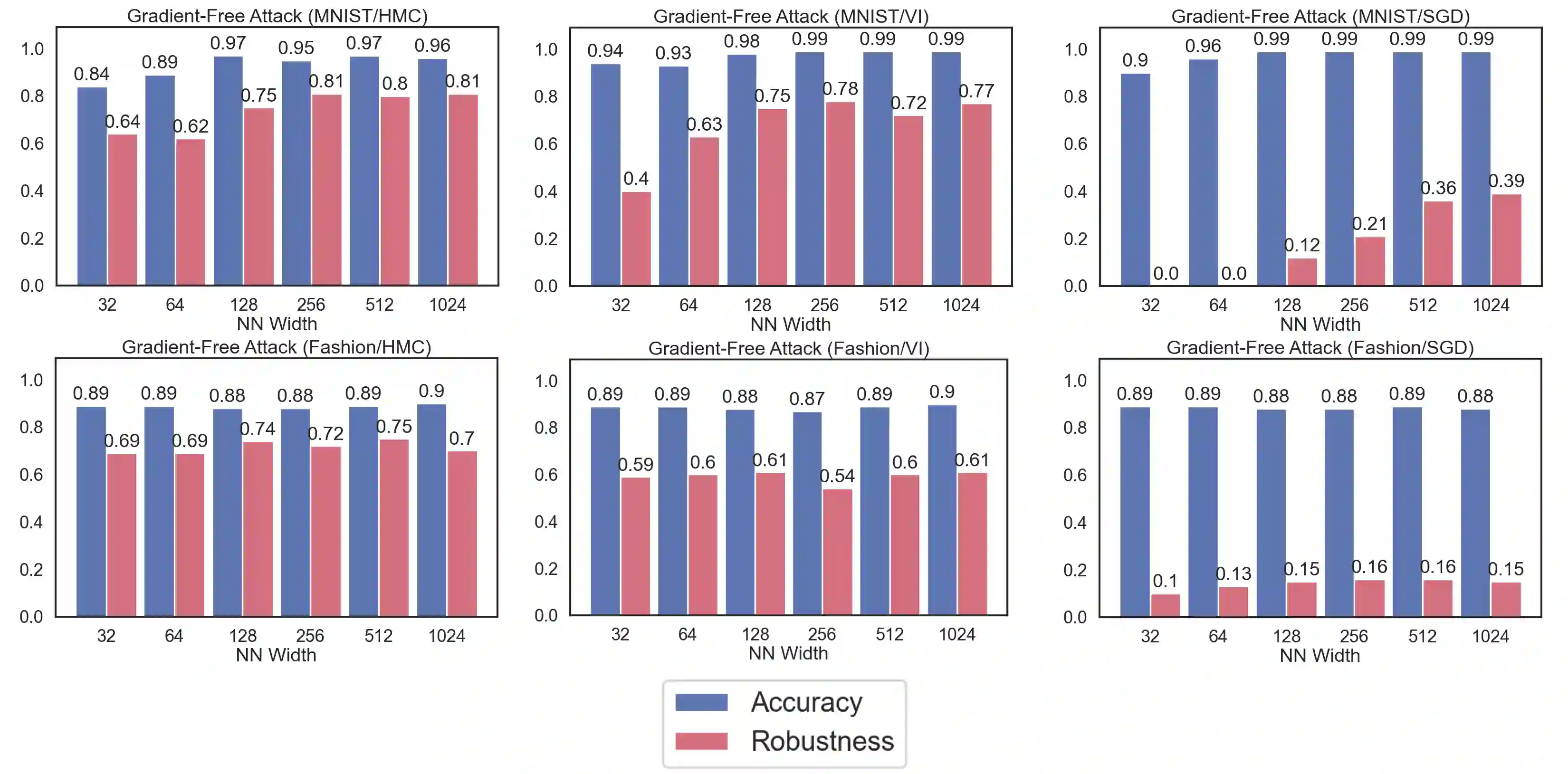

Vulnerability to adversarial attacks is one of the principal hurdles to the adoption of deep learning in safety-critical applications. Despite significant efforts, both practical and theoretical, training deep learning models robust to adversarial attacks is still an open problem. In this paper, we analyse the geometry of adversarial attacks in the large-data, overparameterized limit for Bayesian Neural Networks (BNNs). We show that, in the limit, vulnerability to gradient-based attacks arises as a result of degeneracy in the data distribution, i.e., when the data lies on a lower-dimensional submanifold of the ambient space. As a direct consequence, we demonstrate that in this limit BNN posteriors are robust to gradient-based adversarial attacks. Crucially, we prove that the expected gradient of the loss with respect to the BNN posterior distribution is vanishing, even when each neural network sampled from the posterior is vulnerable to gradient-based attacks. Experimental results on the MNIST, Fashion MNIST, and half moons datasets, representing the finite data regime, with BNNs trained with Hamiltonian Monte Carlo and Variational Inference, support this line of arguments, showing that BNNs can display both high accuracy on clean data and robustness to both gradient-based and gradient-free based adversarial attacks.

翻译:尽管在实践和理论方面作出了重大努力,但培训能抵御对抗性攻击的深层次学习模式仍然是一个尚未解决的问题。在本文中,我们分析了大数据中对抗性攻击的几何结构,对巴伊西亚神经网络(巴伊西亚神经网络)的过度分界线进行了分析。我们表明,在极限中,对基于梯度的攻击的脆弱性产生于数据分布的退化,即当数据位于环境空间的低维次层时,即当数据位于环境空间的低维次层时。我们的直接后果是,我们证明在这种极限中,BNNN的后端攻击对基于梯度的对抗性攻击是强大的。我们证明,即使从后端取样的每个神经网络都容易受到基于梯度的攻击,但预计BNNN的损耗梯度正在消失,而从后端取样的每个神经网络都很容易受到基于梯度的攻击。MNIST、FAshion MNI和半月球的半月球数据集,代表了基于梯度的精确度数据系统,BNNNIS的显示, 和BNPAR 的清晰度显示, 和BNMAR 的透明度,显示了这个基的精确度。