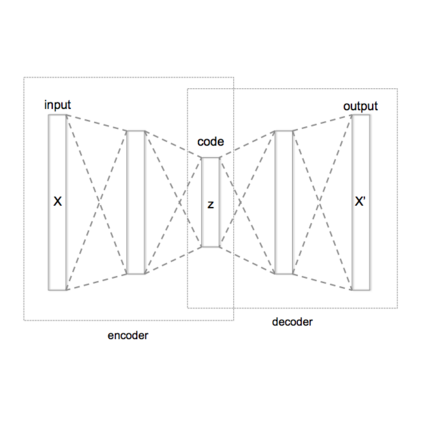

Latent variable models for text, when trained successfully, accurately model the data distribution and capture global semantic and syntactic features of sentences. The prominent approach to train such models is variational autoencoders (VAE). It is nevertheless challenging to train and often results in a trivial local optimum where the latent variable is ignored and its posterior collapses into the prior, an issue known as posterior collapse. Various techniques have been proposed to mitigate this issue. Most of them focus on improving the inference model to yield latent codes of higher quality. The present work proposes a short run dynamics for inference. It is initialized from the prior distribution of the latent variable and then runs a small number (e.g., 20) of Langevin dynamics steps guided by its posterior distribution. The major advantage of our method is that it does not require a separate inference model or assume simple geometry of the posterior distribution, thus rendering an automatic, natural and flexible inference engine. We show that the models trained with short run dynamics more accurately model the data, compared to strong language model and VAE baselines, and exhibit no sign of posterior collapse. Analyses of the latent space show that interpolation in the latent space is able to generate coherent sentences with smooth transition and demonstrate improved classification over strong baselines with latent features from unsupervised pretraining. These results together expose a well-structured latent space of our generative model.

翻译:文本的隐性变量模型,如果经过成功培训,精确地模拟数据分布,并捕捉到全球语义和句子综合特征。这种模型的突出培训方法是变式自动对立器(VAE),然而,在潜在变量被忽视,其后部崩溃到前方,一个被称为后台崩溃的问题,培训并往往导致局部局部优化,这是很困难的。提出了各种技术来缓解这一问题。这些技术大多侧重于改进推断模型,以生成质量更高的潜在代码。目前的工作提出了一种短期动态推论。从先前的隐性变量分布开始,然后运行少量(例如20)朗埃文动态步骤,以其后台分布为指导。我们方法的主要优点是,它不需要一个单独的推断模型,或假设对后台分布进行简单的地理测量,从而产生一个自动、自然和灵活的推导力引擎。我们显示,经过培训的模型能够更准确地模拟数据,比强的语文模型和VAE的潜层对地层图像进行精确的模型,然后在空间定位基线中进行细度分析,没有显示潜深层潜层变的图像,从而展示。