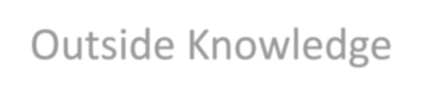

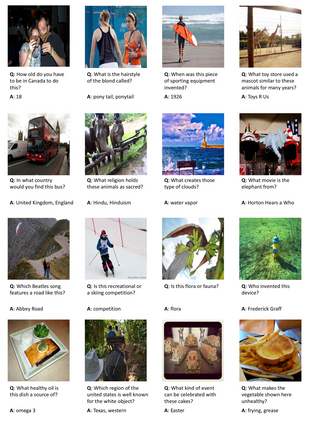

Visual Question Answering (VQA) in its ideal form lets us study reasoning in the joint space of vision and language and serves as a proxy for the AI task of scene understanding. However, most VQA benchmarks to date are focused on questions such as simple counting, visual attributes, and object detection that do not require reasoning or knowledge beyond what is in the image. In this paper, we address the task of knowledge-based visual question answering and provide a benchmark, called OK-VQA, where the image content is not sufficient to answer the questions, encouraging methods that rely on external knowledge resources. Our new dataset includes more than 14,000 questions that require external knowledge to answer. We show that the performance of the state-of-the-art VQA models degrades drastically in this new setting. Our analysis shows that our knowledge-based VQA task is diverse, difficult, and large compared to previous knowledge-based VQA datasets. We hope that this dataset enables researchers to open up new avenues for research in this domain. See http://okvqa.allenai.org to download and browse the dataset.

翻译:理想的视觉问题解答(VQA)让我们研究视觉和语言共同空间的推理,并充当AI现场理解任务的代理。然而,迄今为止,大多数VQA基准都侧重于简单计数、视觉属性和物体探测等问题,而这些问题并不要求推理或了解超出图像范围。在本文中,我们处理基于知识的视觉问题解答任务,并提供一个基准,称为 OK-VQA,其中图像内容不足以回答问题,鼓励使用外部知识资源的方法。我们的新数据集包括了超过14 000个需要外部知识回答的问题。我们显示,在这一新环境中,状态的VQA模型的性能急剧退化。我们的分析表明,我们基于知识的VQA任务与以往基于知识的VQA数据集相比是多种多样、困难和巨大的。我们希望这一数据集使研究人员能够打开这个领域的新的研究途径。见http://okvqa.allenai.org,以便下载和浏览数据集。