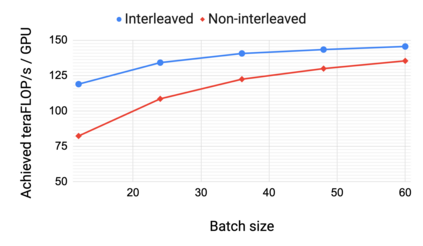

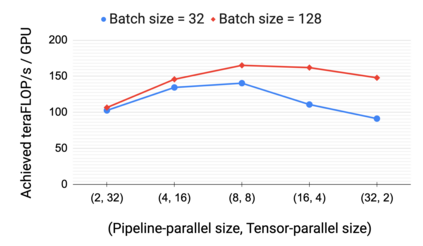

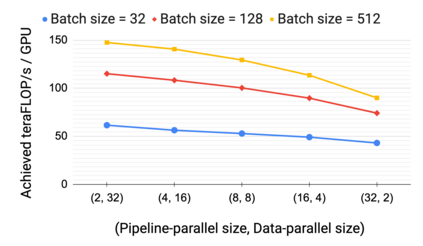

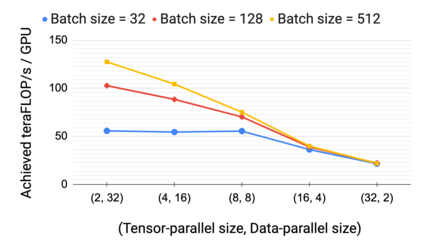

Large language models have led to state-of-the-art accuracies across a range of tasks. However, training these large models efficiently is challenging for two reasons: a) GPU memory capacity is limited, making it impossible to fit large models on a single GPU or even on a multi-GPU server; and b) the number of compute operations required to train these models can result in unrealistically long training times. New methods of model parallelism such as tensor and pipeline parallelism have been proposed to address these challenges; unfortunately, naive usage leads to fundamental scaling issues at thousands of GPUs due to various reasons, e.g., expensive cross-node communication or idle periods waiting on other devices. In this work, we show how to compose different types of parallelism methods (tensor, pipeline, and data paralleism) to scale to thousands of GPUs, achieving a two-order-of-magnitude increase in the sizes of models we can efficiently train compared to existing systems. We discuss various implementations of pipeline parallelism and propose a novel schedule that can improve throughput by more than 10% with comparable memory footprint compared to previously-proposed approaches. We quantitatively study the trade-offs between tensor, pipeline, and data parallelism, and provide intuition as to how to configure distributed training of a large model. The composition of these techniques allows us to perform training iterations on a model with 1 trillion parameters at 502 petaFLOP/s on 3072 GPUs with achieved per-GPU throughput of 52% of peak; previous efforts to train similar-sized models achieve much lower throughput (36% of theoretical peak). Our code has been open-sourced at https://github.com/nvidia/megatron-lm.

翻译:大型语言模型导致了一系列任务中最先进的理解。然而,由于以下两个原因,高效地培训这些大型模型具有挑战性:(a) GPU的存储能力有限,无法将大型模型安装在单一的 GPU 上,甚至无法安装在多GPU服务器上;和(b) 培训这些模型所需的计算操作数量可能导致不现实的长期培训时间。提出了新的模型平行方法,如高压和管道平行法,以应对这些挑战;不幸的是,天真的使用导致数千个GPU出现根本性的升级问题,原因有多种,例如,成本昂贵的跨节点通信或等待其他装置的闲置时期。在这项工作中,我们展示了如何将不同类型的平行方法(电流、管道和数据隐蔽)扩大到数千个GPUP(电流、管道和数据隐蔽),从而在模型规模上实现两级的巨量增长(与现有系统相比,我们讨论了各种管道平行参数的实施,并提出了一个新的时间表,通过超过10 %的离子路的电路传输技术,我们通过以往的存储工具,通过大规模数据进行类似的存储式分析,我们是如何在以往的模型上,我们是如何在数据库中进行类似的。