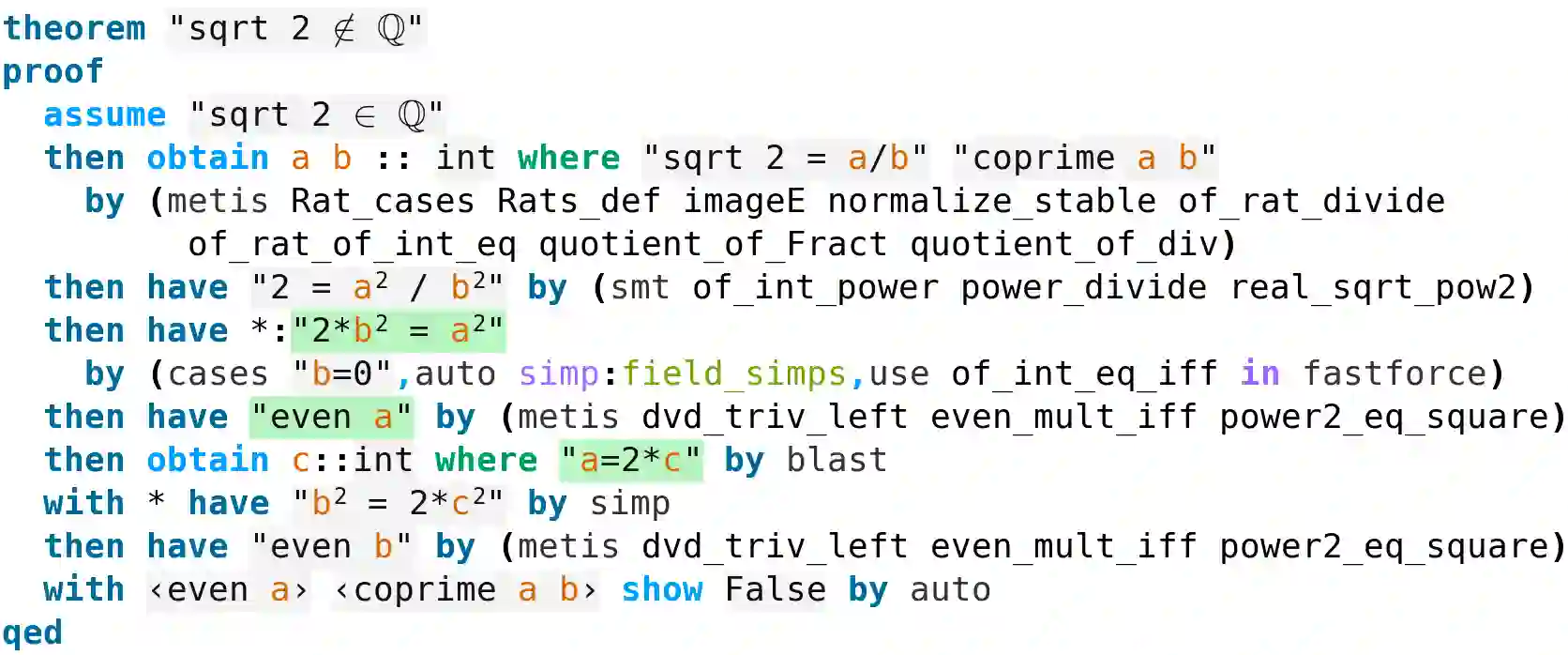

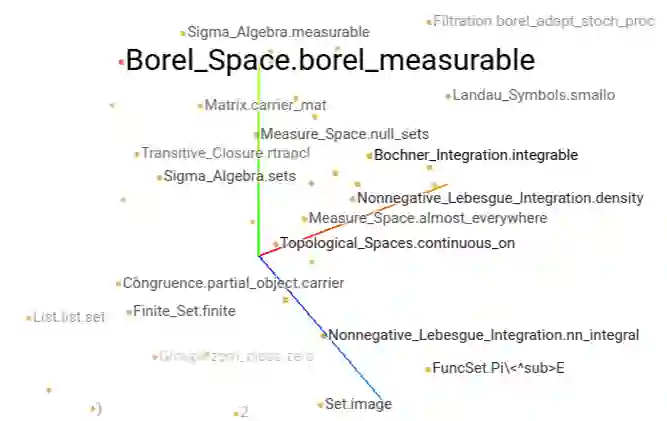

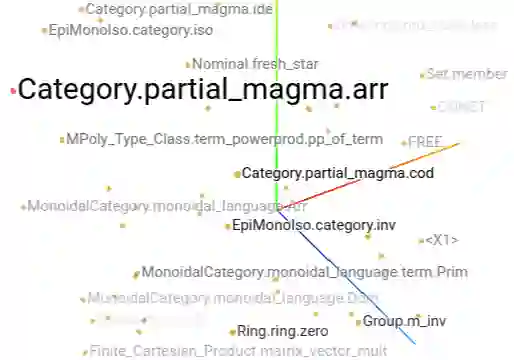

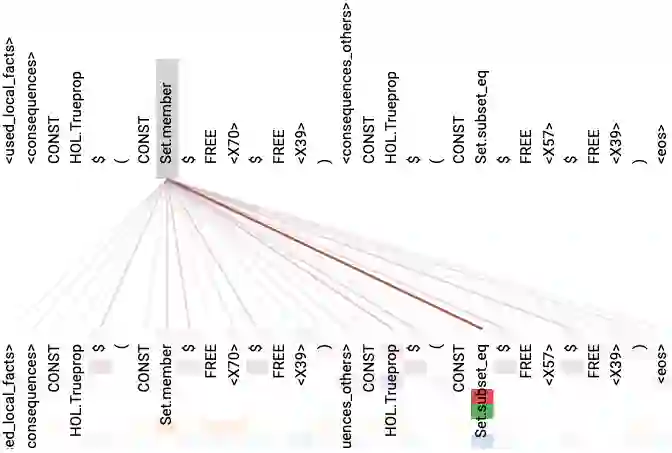

A well-defined benchmark is essential for measuring and accelerating research progress of machine learning models. In this paper, we present a benchmark for high-level mathematical reasoning and study the reasoning capabilities of neural sequence-to-sequence models. We build a non-synthetic dataset from the largest repository of proofs written by human experts in a theorem prover. The dataset has a broad coverage of undergraduate and research-level mathematical and computer science theorems. In our defined task, a model is required to fill in a missing intermediate proposition given surrounding proofs. This task provides a starting point for the long-term goal of having machines generate human-readable proofs automatically. Our experiments and analysis reveal that while the task is challenging, neural models can capture non-trivial mathematical reasoning. We further design a hierarchical transformer that outperforms the transformer baseline.

翻译:明确的基准对于测量和加速机器学习模型的研究进展至关重要。 在本文中, 我们为高级数学推理提供了一个基准, 并研究神经序列到序列模型的推理能力。 我们从人类专家在理论证明中撰写的最大证据库中建立一个非合成数据集。 该数据集广泛覆盖本科生和研究层次的数学和计算机科学理论。 在我们确定的任务中, 需要一个模型来填补给周围提供证据的缺失中间点。 这项任务为机器自动生成人类可读证据的长期目标提供了一个起点。 我们的实验和分析显示, 虽然任务艰巨, 神经模型可以捕捉非三轨数学推理。 我们进一步设计一个超越变压器基线的等级变压器。