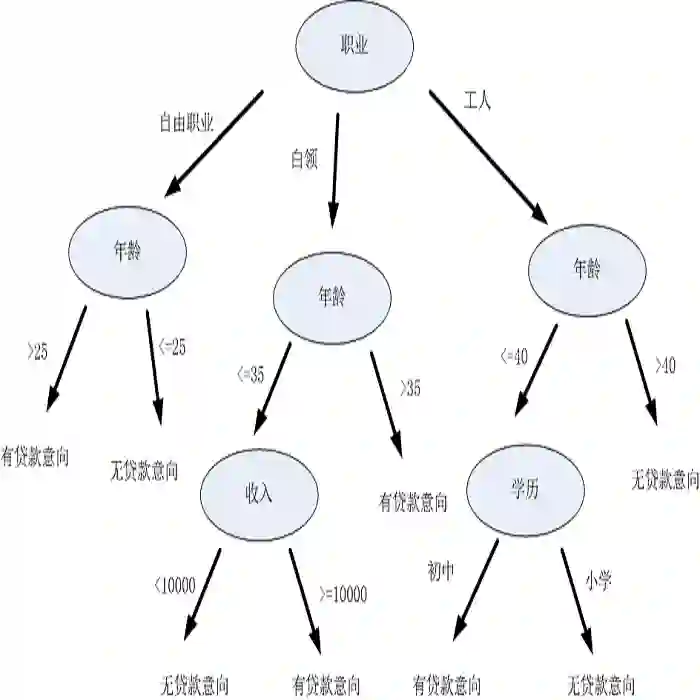

Federated learning is emerging as a machine learning technique that trains a model across multiple decentralized parties. It is renowned for preserving privacy as the data never leaves the computational devices, and recent approaches further enhance its privacy by hiding messages transferred in encryption. However, we found that despite the efforts, federated learning remains privacy-threatening, due to its interactive nature across different parties. In this paper, we analyze the privacy threats in industrial-level federated learning frameworks with secure computation, and reveal such threats widely exist in typical machine learning models such as linear regression, logistic regression and decision tree. For the linear and logistic regression, we show through theoretical analysis that it is possible for the attacker to invert the entire private input of the victim, given very few information. For the decision tree model, we launch an attack to infer the range of victim's private inputs. All attacks are evaluated on popular federated learning frameworks and real-world datasets.

翻译:联邦学习正在作为一种机械学习技术出现,它培养了多个分散政党的模型。它以保护隐私而著称,因为数据从未离开计算装置,而且最近的做法通过隐藏加密中传递的信息而进一步加强了隐私。然而,我们发现,尽管做出了努力,联邦学习仍然威胁隐私,因为其具有不同党派的互动性质。在本文中,我们分析了工业级联邦学习框架中的隐私威胁,并进行了安全计算,并揭示了典型的机器学习模式中广泛存在的此类威胁,如线性回归、物流回归和决策树。在线性回归和物流回归方面,我们通过理论分析表明,攻击者有可能在极少信息的情况下,推翻受害者的全部私人投入。关于决策树模型,我们发动攻击,以推断受害者私人投入的范围。所有攻击都根据流行的联邦学习框架和实际世界数据集进行评估。