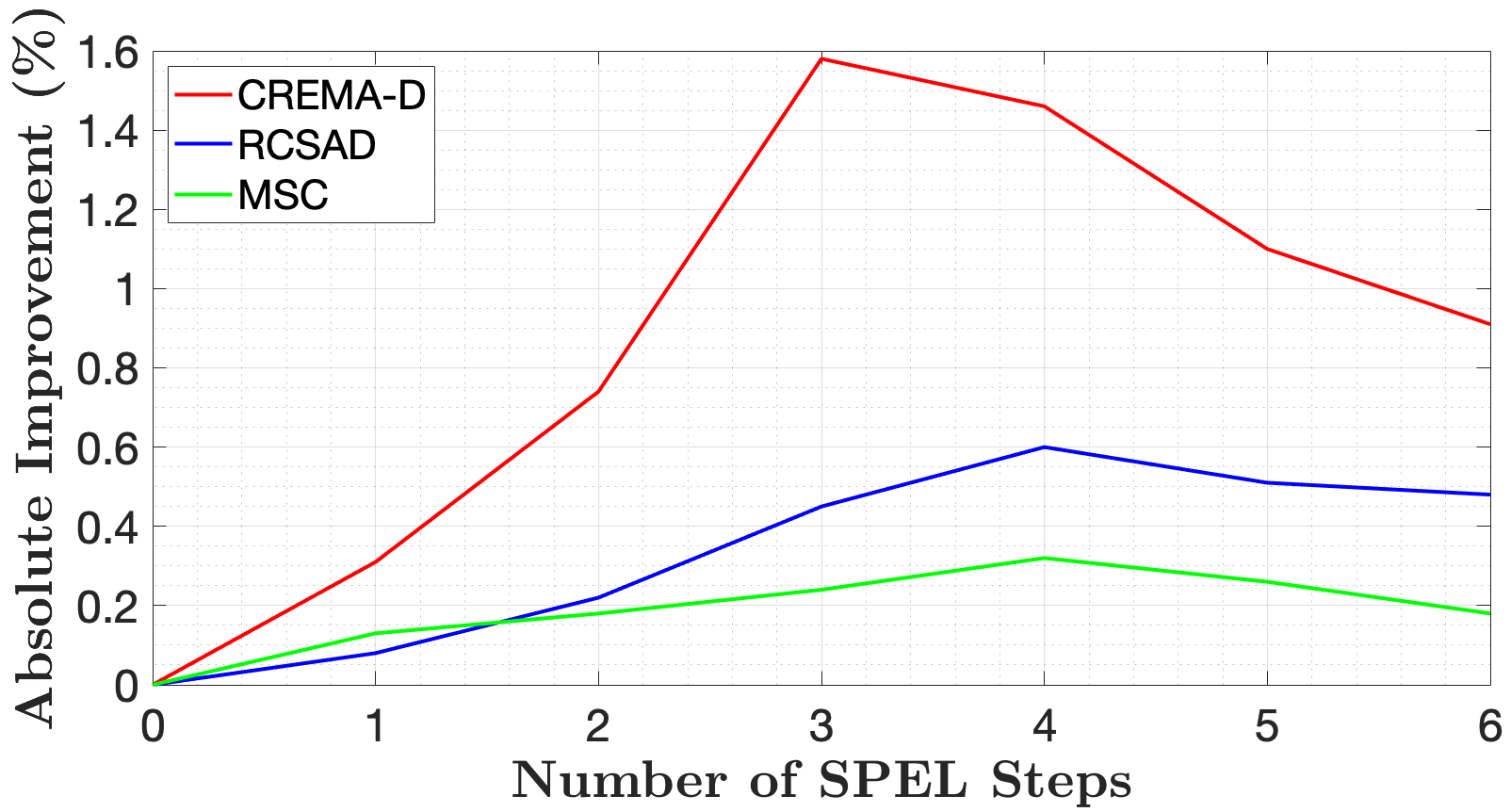

Combining multiple machine learning models into an ensemble is known to provide superior performance levels compared to the individual components forming the ensemble. This is because models can complement each other in taking better decisions. Instead of just combining the models, we propose a self-paced ensemble learning scheme in which models learn from each other over several iterations. During the self-paced learning process based on pseudo-labeling, in addition to improving the individual models, our ensemble also gains knowledge about the target domain. To demonstrate the generality of our self-paced ensemble learning (SPEL) scheme, we conduct experiments on three audio tasks. Our empirical results indicate that SPEL significantly outperforms the baseline ensemble models. We also show that applying self-paced learning on individual models is less effective, illustrating the idea that models in the ensemble actually learn from each other.

翻译:将多机学习模型合并成一个组合体已知能提供优异的性能水平,与构成组合体的各个组成部分相比。这是因为模型可以在作出更好的决定方面相互补充。我们不光将模型合并,而是提出一个自制的混合学习计划,在其中,模型通过几个迭代相互学习。在以假标签为基础的自制学习过程中,除了改进单个模型外,我们的组合体还获得了对目标领域的了解。为了显示我们自制共同学习(SPEL)计划的普遍性,我们在三个音频任务上进行实验。我们的经验结果表明,SPEL大大超越了基准的混合模型。我们还表明,在单个模型上应用自制学习效率较低,说明组合体中模型实际上相互学习的想法。