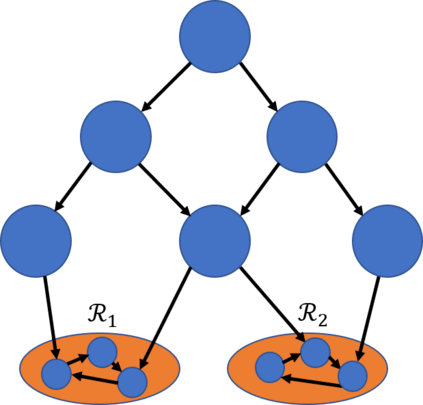

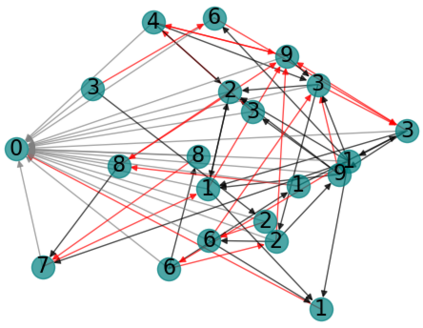

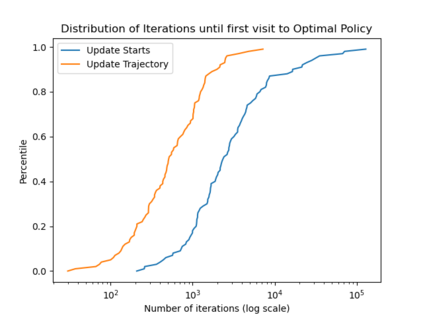

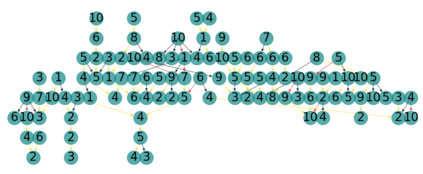

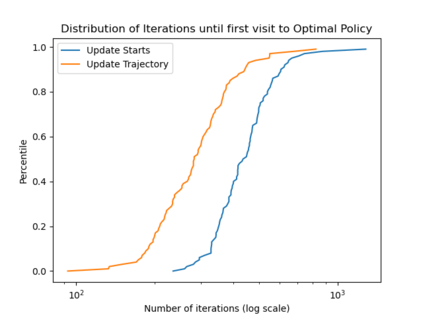

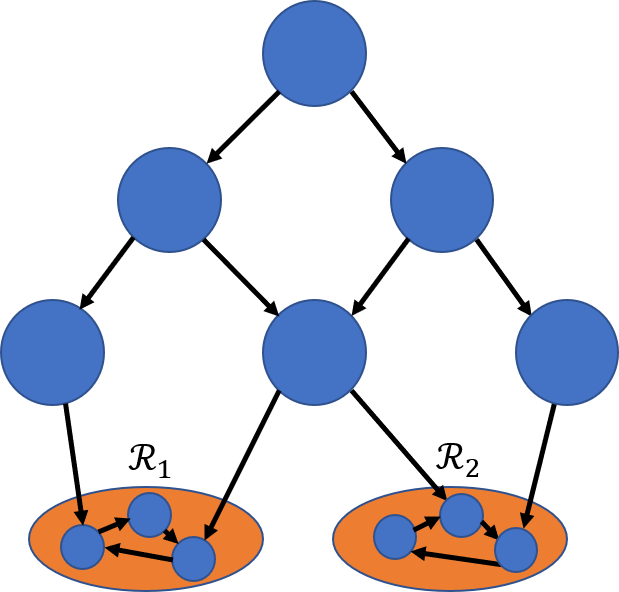

We consider Markov Decision Processes (MDPs) in which every stationary policy induces the same graph structure for the underlying Markov chain and further, the graph has the following property: if we replace each recurrent class by a node, then the resulting graph is acyclic. For such MDPs, we prove the convergence of the stochastic dynamics associated with a version of optimistic policy iteration (OPI), suggested in Tsitsiklis (2002), in which the values associated with all the nodes visited during each iteration of the OPI are updated.

翻译:我们认为,每套固定政策都为基底的马可夫链带来相同的图表结构的Markov决策程序(MDPs),此外,该图具有以下属性:如果我们用节点取代每个常态类,那么所产生的图表就是一个周期性图。对于这种MDPs,我们证明Tsitsiklis(2002年)所建议的与乐观政策迭代(OPI)版本相关的随机动态的趋同,该版本更新了在每次循环期间所访问的所有节点的相关值。

相关内容

Arxiv

3+阅读 · 2018年11月15日

Arxiv

3+阅读 · 2017年12月14日