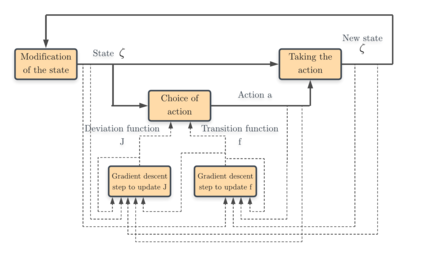

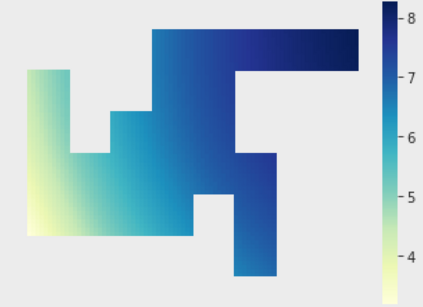

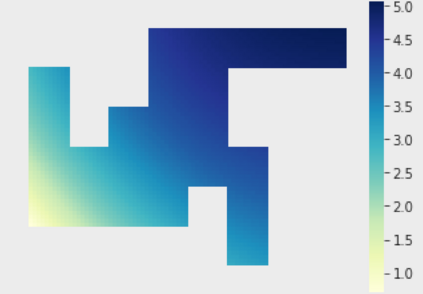

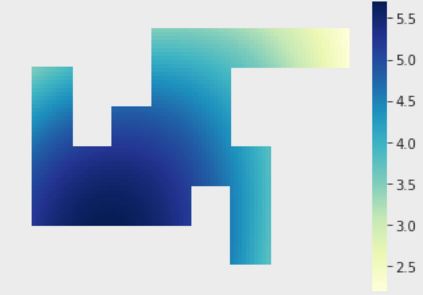

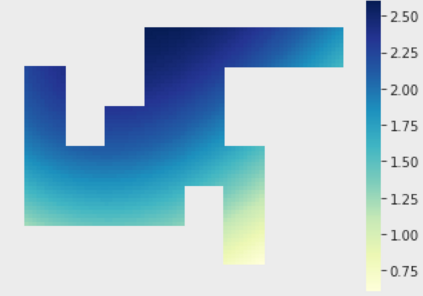

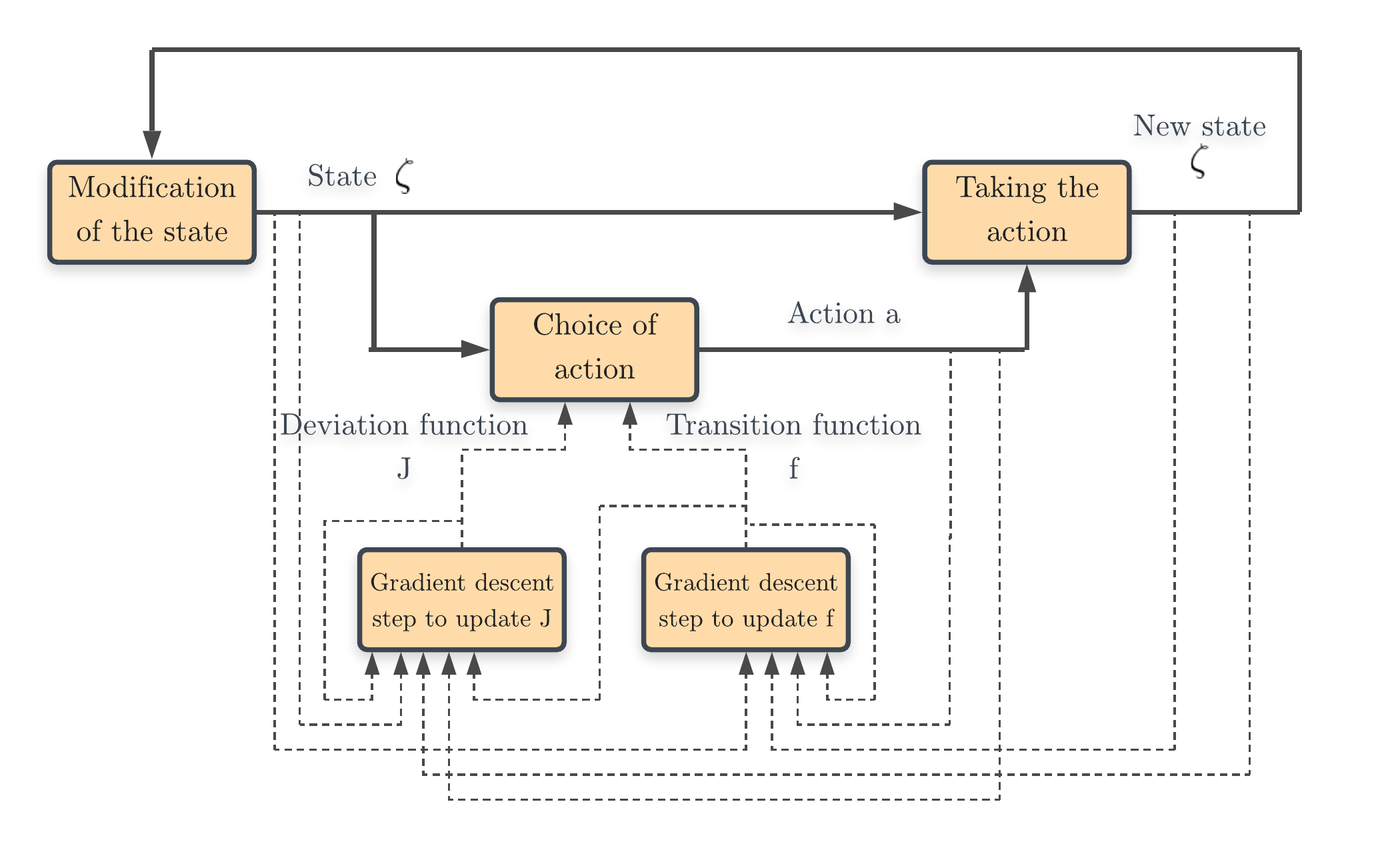

Homeostasis is a prevalent process by which living beings maintain their internal milieu around optimal levels. Multiple lines of evidence suggest that living beings learn to act to predicatively ensure homeostasis (allostasis). A classical theory for such regulation is drive reduction, where a function of the difference between the current and the optimal internal state. The recently introduced homeostatic regulated reinforcement learning theory (HRRL), by defining within the framework of reinforcement learning a reward function based on the internal state of the agent, makes the link between the theories of drive reduction and reinforcement learning. The HRRL makes it possible to explain multiple eating disorders. However, the lack of continuous change in the internal state of the agent with the discrete-time modeling has been so far a key shortcoming of the HRRL theory. Here, we propose an extension of the homeostatic reinforcement learning theory to a continuous environment in space and time, while maintaining the validity of the theoretical results and the behaviors explained by the model in discrete time. Inspired by the self-regulating mechanisms abundantly present in biology, we also introduce a model for the dynamics of the agent internal state, requiring the agent to continuously take actions to maintain homeostasis. Based on the Hamilton-Jacobi-Bellman equation and function approximation with neural networks, we derive a numerical scheme allowing the agent to learn directly how its internal mechanism works, and to choose appropriate action policies via reinforcement learning and an appropriate exploration of the environment. Our numerical experiments show that the agent does indeed learn to behave in a way that is beneficial to its survival in the environment, making our framework promising for modeling animal dynamics and decision-making.

翻译:内存是活生物在最佳水平上维持内部环境的一种普遍过程。 多种证据表明, 活生物学会了行动, 预示着确保内存状态( 空系 ) 。 这种监管的经典理论是驱动减少, 这是当前状态和最佳内部状态之间差异的函数。 最近引入的内存调节强化学习理论( HRRL), 在强化学习框架内界定基于代理人内部状态的奖励功能, 使得驱动减少理论与强化学习之间的关联。 HRRRL 使得能够解释多种饮食失调。 然而, 使用离散时间模型的代理商内部状态缺乏持续变化是HRRRL理论的关键缺陷。 我们在这里提议将内存调节调节强化学习理论扩大到空间和时间的连续环境, 同时保持理论结果和模型解释的行为有效性。 在生物学中, 自我调节机制非常丰富, 我们还引入了一种内存动力动力动力动态模型, 使内存的内存机制真正改变内存的内存机制 。

相关内容

Source: Apple - iOS 8