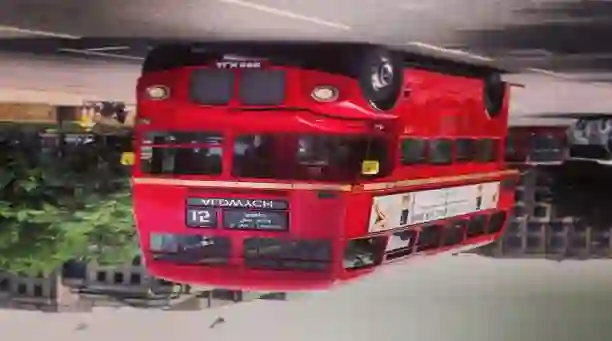

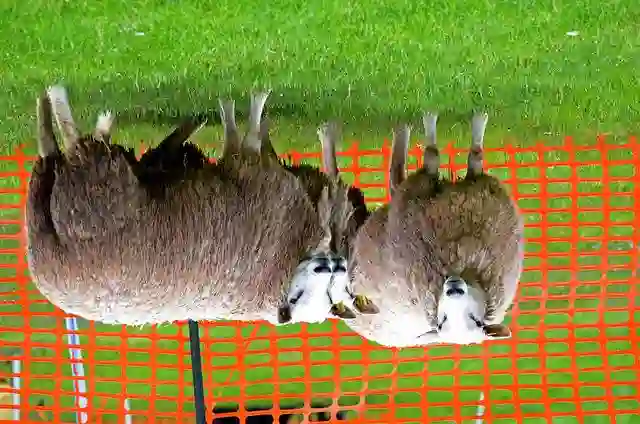

In this work, we study the robustness of a CNN+RNN based image captioning system being subjected to adversarial noises. We propose to fool an image captioning system to generate some targeted partial captions for an image polluted by adversarial noises, even the targeted captions are totally irrelevant to the image content. A partial caption indicates that the words at some locations in this caption are observed, while words at other locations are not restricted.It is the first work to study exact adversarial attacks of targeted partial captions. Due to the sequential dependencies among words in a caption, we formulate the generation of adversarial noises for targeted partial captions as a structured output learning problem with latent variables. Both the generalized expectation maximization algorithm and structural SVMs with latent variables are then adopted to optimize the problem. The proposed methods generate very successful at-tacks to three popular CNN+RNN based image captioning models. Furthermore, the proposed attack methods are used to understand the inner mechanism of image captioning systems, providing the guidance to further improve automatic image captioning systems towards human captioning.

翻译:在这项工作中,我们研究CNN+RNNN基于CNN+RNN的图像字幕系统是否坚固。我们建议假冒一个图像字幕系统,为受到对抗性噪音污染的图像生成一些有针对性的部分字幕,甚至有针对性字幕与图像内容完全无关。部分字幕表明,该字幕中的某些地方的文字被观察到,而其他地点的文字不受限制。这是研究有针对性的部分字幕精确的对称攻击的第一个工作。由于标题中各词的顺序依附关系,我们为目标部分字幕制作了对抗性噪音,作为带有潜在变量的结构化输出学习问题。随后,采用了普遍预期最大化算法和带有潜在变量的结构性SVMS,以优化问题。拟议方法为三个广受欢迎的CNN+RNNN成像字幕模型制作了非常成功的对称。此外,拟议的攻击方法被用来理解图像字幕系统的内部机制,为进一步改进用于人类字幕的自动图像字幕系统提供指导。