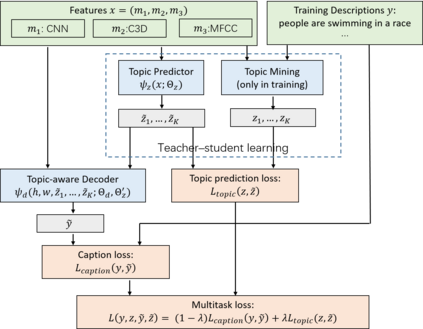

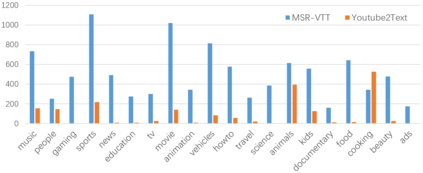

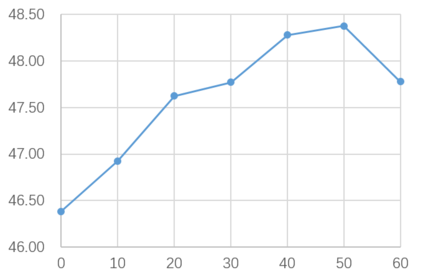

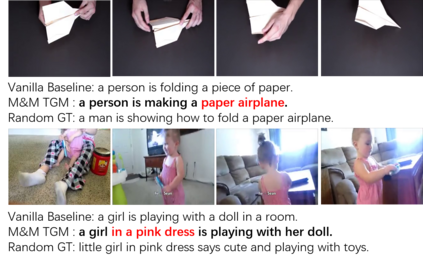

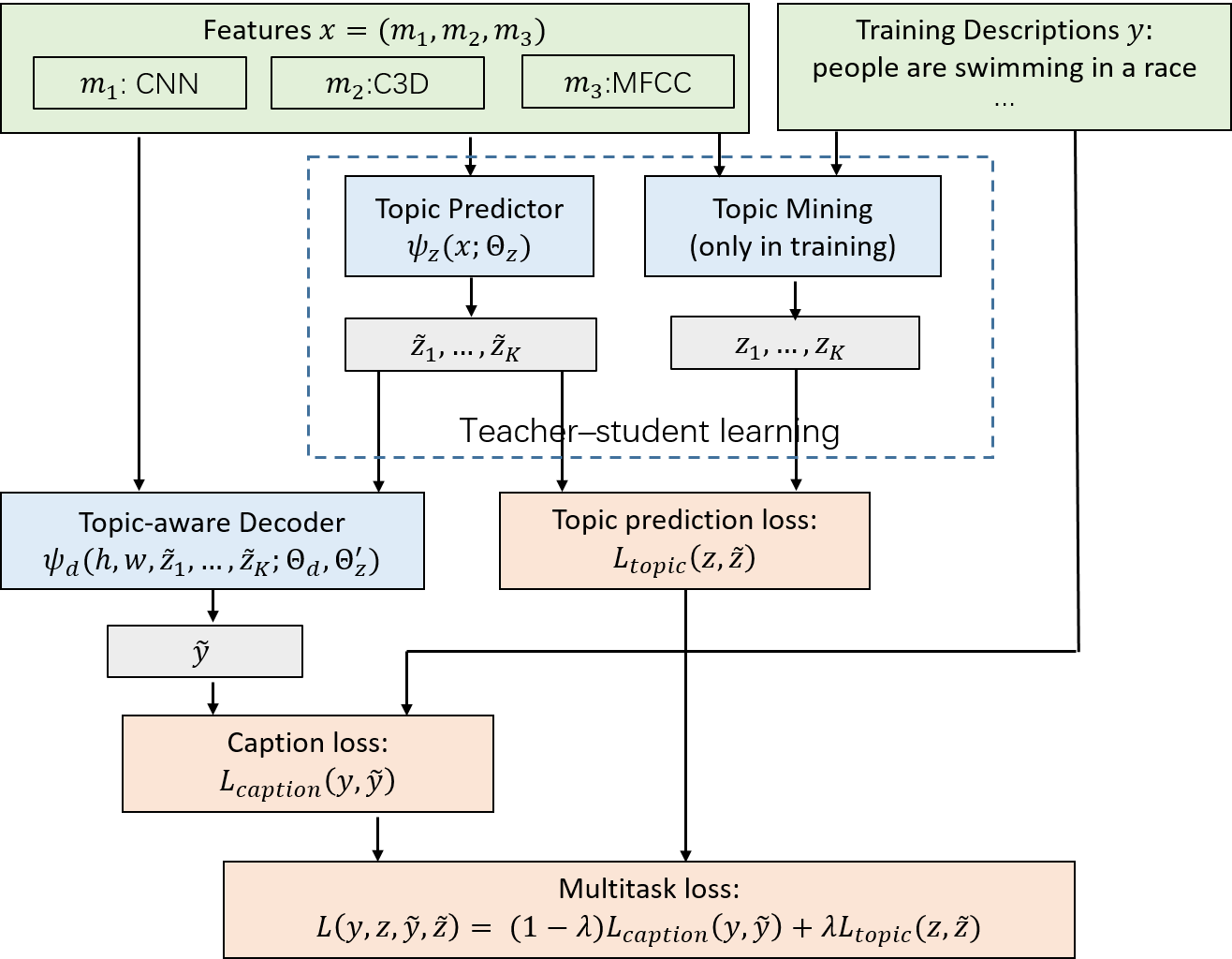

The topic diversity of open-domain videos leads to various vocabularies and linguistic expressions in describing video contents, and therefore, makes the video captioning task even more challenging. In this paper, we propose an unified caption framework, M&M TGM, which mines multimodal topics in unsupervised fashion from data and guides the caption decoder with these topics. Compared to pre-defined topics, the mined multimodal topics are more semantically and visually coherent and can reflect the topic distribution of videos better. We formulate the topic-aware caption generation as a multi-task learning problem, in which we add a parallel task, topic prediction, in addition to the caption task. For the topic prediction task, we use the mined topics as the teacher to train a student topic prediction model, which learns to predict the latent topics from multimodal contents of videos. The topic prediction provides intermediate supervision to the learning process. As for the caption task, we propose a novel topic-aware decoder to generate more accurate and detailed video descriptions with the guidance from latent topics. The entire learning procedure is end-to-end and it optimizes both tasks simultaneously. The results from extensive experiments conducted on the MSR-VTT and Youtube2Text datasets demonstrate the effectiveness of our proposed model. M&M TGM not only outperforms prior state-of-the-art methods on multiple evaluation metrics and on both benchmark datasets, but also achieves better generalization ability.

翻译:开放式视频的多样化主题导致在描述视频内容时出现各种词汇和语言表达方式,从而使视频字幕的任务更具挑战性。在本文中,我们提出一个统一的标题框架,即M &M TGM(M&M TGM),从数据中以不受监督的方式将多式专题从数据中挖掘出来,并指导标题解码器与这些专题的调码器。与预先确定的专题相比,所开采的多式联运专题在语义和视觉上更加一致,能够更好地反映视频的专题分布。我们把专题识别字幕生成作为一个多任务学习问题,除了标题任务之外,我们增加了一个平行的任务,即专题预测。对于专题预测任务,我们利用所探测的专题作为教师培训学生专题预测模型,学习从视频的多式内容中预测潜在专题。与预先界定的专题预测为学习过程提供中间监督。关于标题任务,我们提议了一个新颖的专题认知解码解码解码,用潜在主题指南制作更准确和详细的视频描述。整个学习程序是最终到最后的,而不是同时优化MVMV 和MV模式上的拟议数据模式,同时展示了我们关于MV系统的广泛实验的结果。