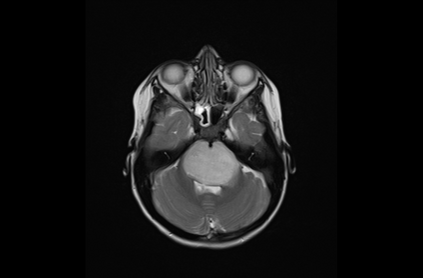

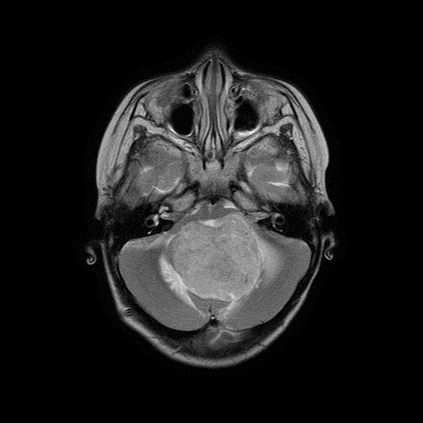

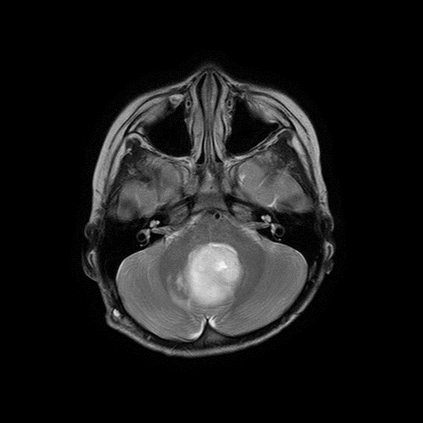

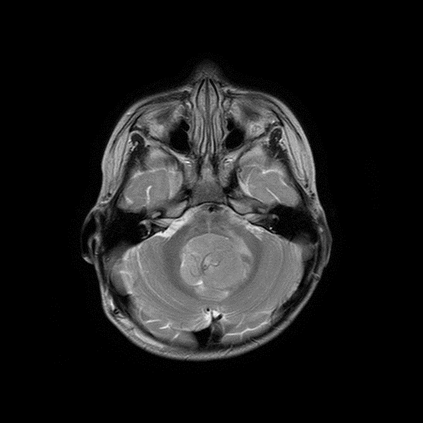

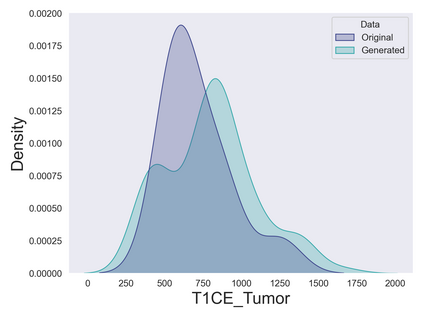

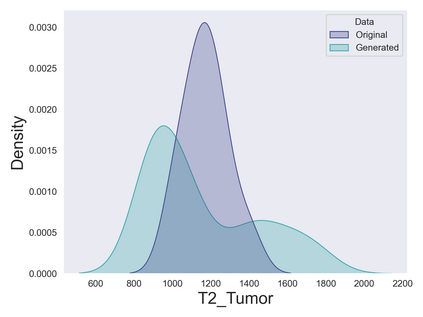

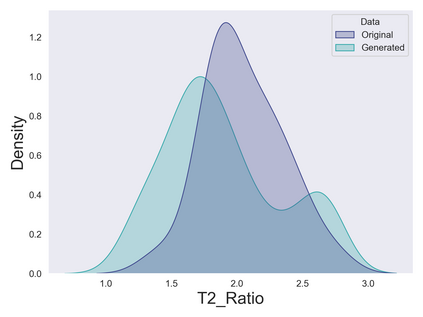

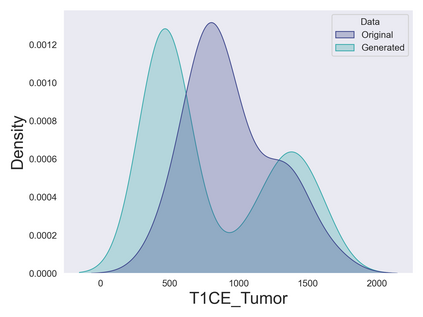

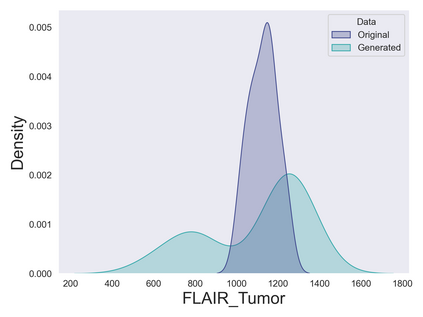

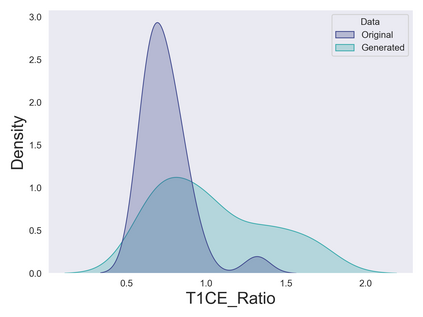

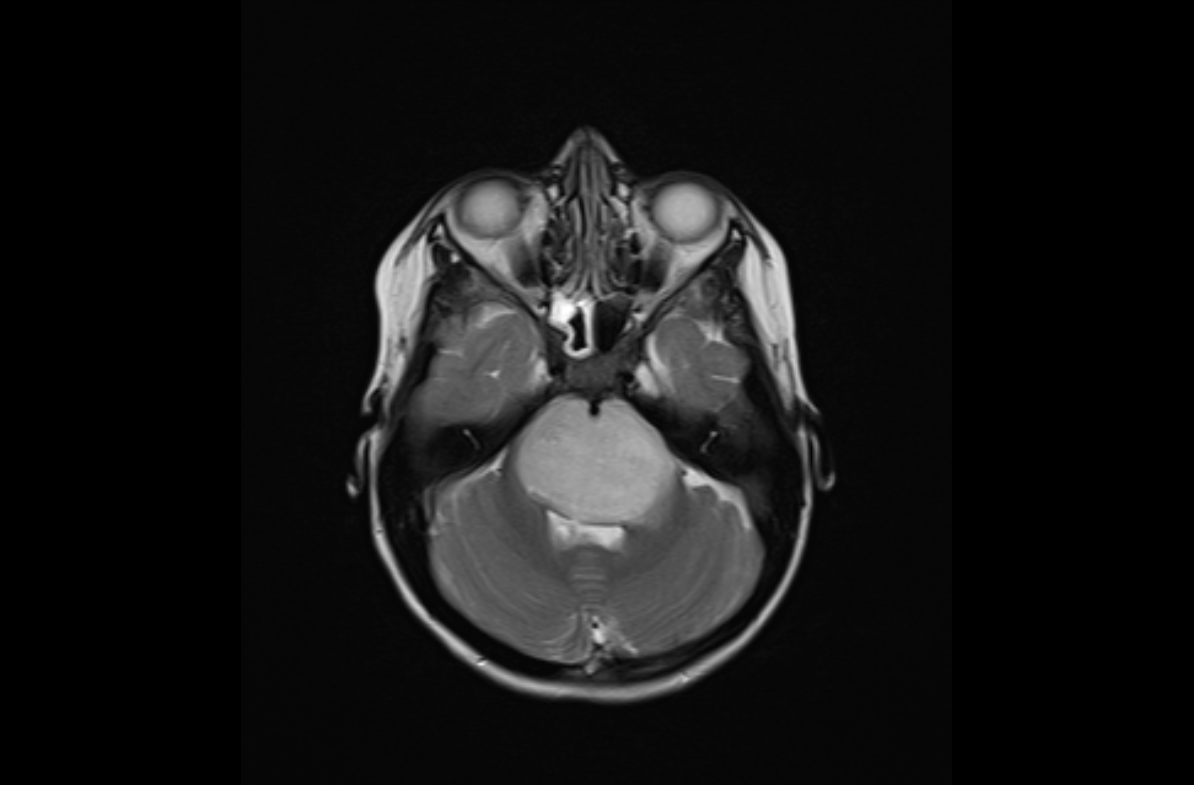

The field of explainability in artificial intelligence has witnessed a growing number of studies and increasing scholarly interest. However, the lack of human-friendly and individual interpretations in explaining the outcomes of machine learning algorithms has significantly hindered the acceptance of these methods by clinicians in their research and clinical practice. To address this, our study employs counterfactual explanations to explore "what if?" scenarios in medical research, aiming to expand our understanding beyond existing boundaries on magnetic resonance imaging (MRI) features for diagnosing pediatric posterior fossa brain tumors. In our case study, the proposed concept provides a novel way to examine alternative decision-making scenarios that offer personalized and context-specific insights, enabling the validation of predictions and clarification of variations under diverse circumstances. Additionally, we explore the potential use of counterfactuals for data augmentation and evaluate their feasibility as an alternative approach in our medical research case. The results demonstrate the promising potential of using counterfactual explanations to enhance trust and acceptance of AI-driven methods in clinical research.

翻译:暂无翻译