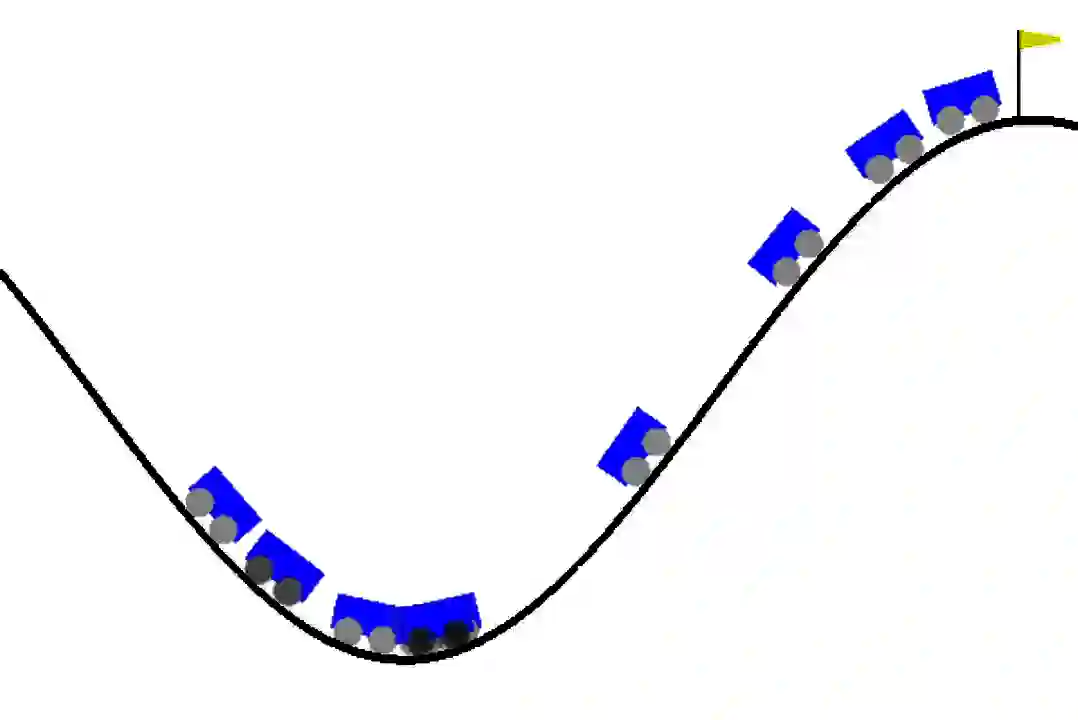

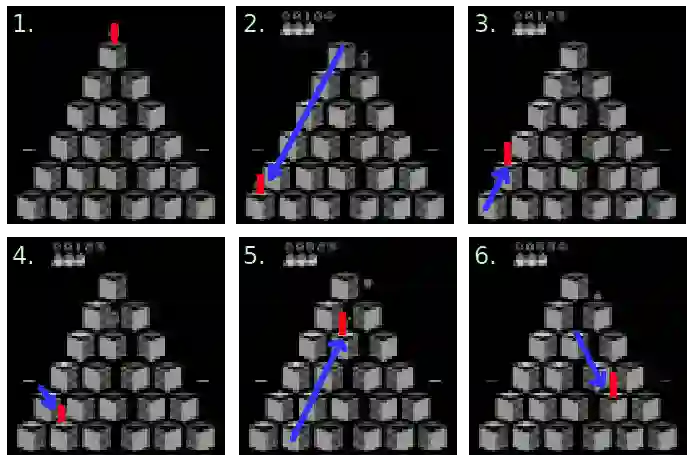

Reinforcement learning is a powerful approach to learn behaviour through interactions with an environment. However, behaviours are usually learned in a purely reactive fashion, where an appropriate action is selected based on an observation. In this form, it is challenging to learn when it is necessary to execute new decisions. This makes learning inefficient, especially in environments that need various degrees of fine and coarse control. To address this, we propose a proactive setting in which the agent not only selects an action in a state but also for how long to commit to that action. Our TempoRL approach introduces skip connections between states and learns a skip-policy for repeating the same action along these skips. We demonstrate the effectiveness of TempoRL on a variety of traditional and deep RL environments, showing that our approach is capable of learning successful policies up to an order of magnitude faster than vanilla Q-learning.

翻译:强化学习是一种通过与环境互动学习行为的有力方法。 但是,行为通常以纯粹被动的方式学习,根据观察选择适当的行动。 以这种形式,当需要执行新的决定时,学习是困难的。 这使得学习效率低下, 特别是在需要不同程度的精细和粗糙控制的环境中。 为了解决这个问题,我们提议了一个积极的环境,使代理人不仅选择州内的行动,而且选择承诺该行动的时间长度。 我们的TepoRL 方法引入了各州之间的跳过联系,并学习了跳过政策,在这些跳过中重复同样的行动。 我们展示了TepoRL在各种传统和深层的RL环境中的有效性,这表明我们的方法能够将成功的政策学习到比Vanilla Q- 学习更快的规模。