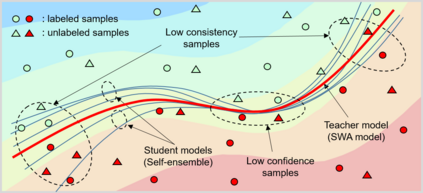

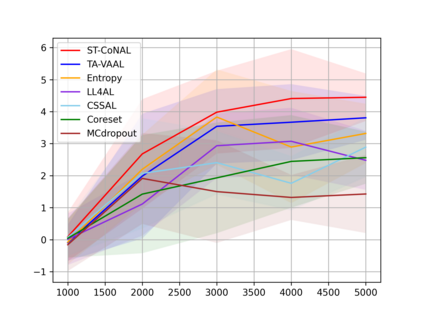

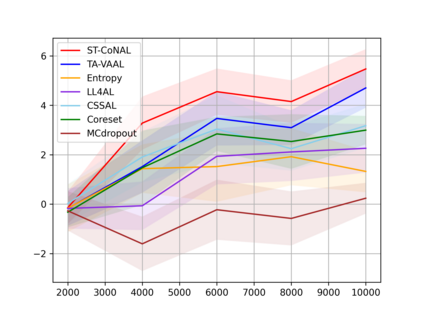

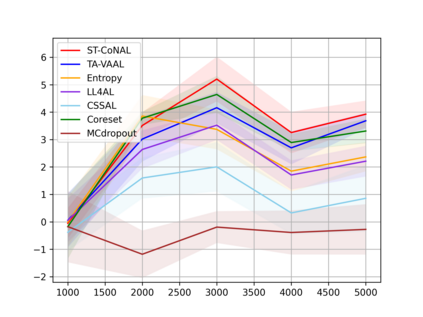

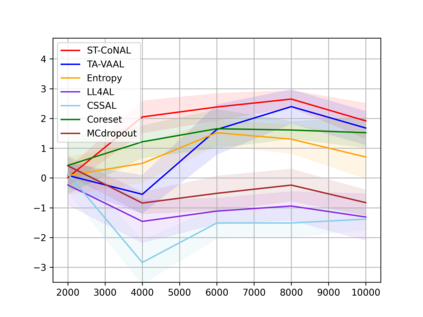

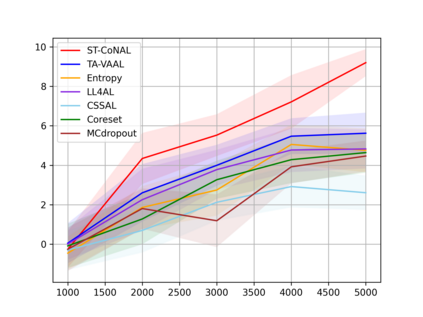

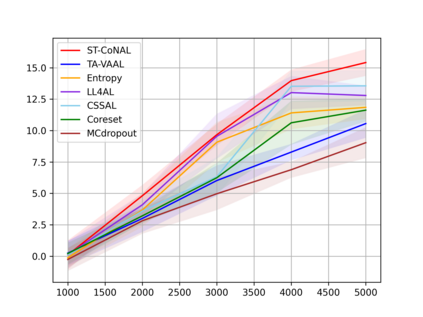

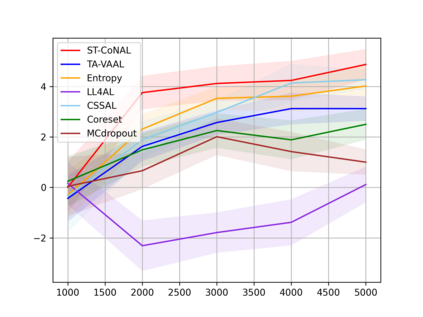

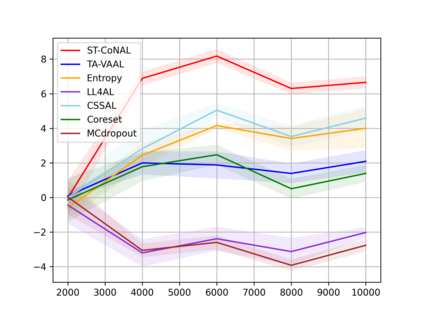

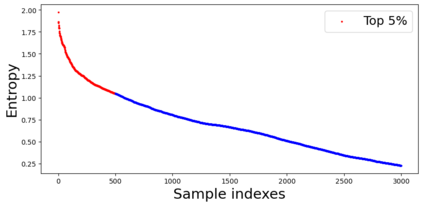

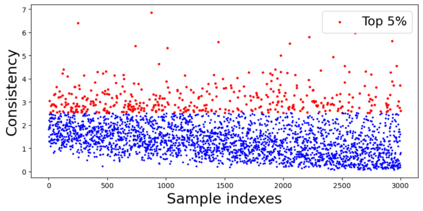

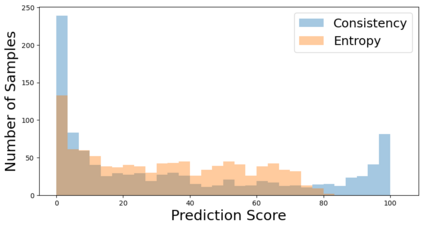

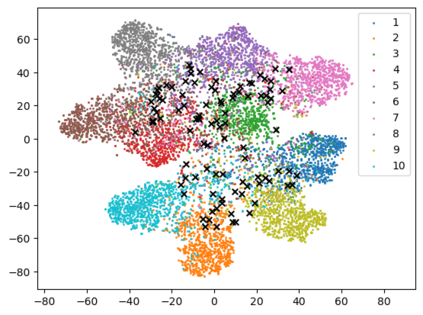

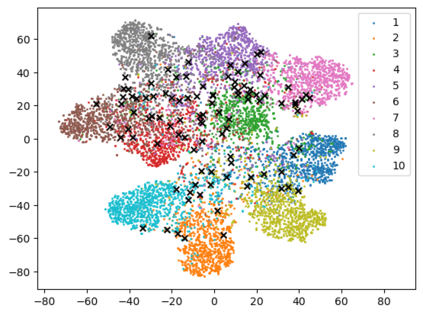

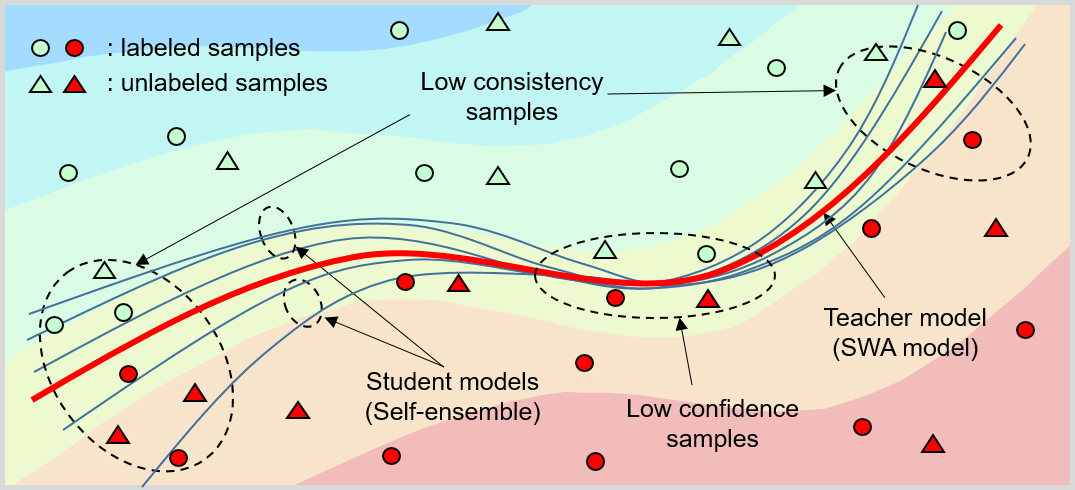

Modern deep learning has achieved great success in various fields. However, it requires the labeling of huge amounts of data, which is expensive and labor-intensive. Active learning (AL), which identifies the most informative samples to be labeled, is becoming increasingly important to maximize the efficiency of the training process. The existing AL methods mostly use only a single final fixed model for acquiring the samples to be labeled. This strategy may not be good enough in that the structural uncertainty of a model for given training data is not considered to acquire the samples. In this study, we propose a novel acquisition criterion based on temporal self-ensemble generated by conventional stochastic gradient descent (SGD) optimization. These self-ensemble models are obtained by capturing the intermediate network weights obtained through SGD iterations. Our acquisition function relies on a consistency measure between the student and teacher models. The student models are given a fixed number of temporal self-ensemble models, and the teacher model is constructed by averaging the weights of the student models. Using the proposed acquisition criterion, we present an AL algorithm, namely student-teacher consistency-based AL (ST-CoNAL). Experiments conducted for image classification tasks on CIFAR-10, CIFAR-100, Caltech-256, and Tiny ImageNet datasets demonstrate that the proposed ST-CoNAL achieves significantly better performance than the existing acquisition methods. Furthermore, extensive experiments show the robustness and effectiveness of our methods.

翻译:现代深层学习在各个领域都取得了巨大成功。然而,这要求对大量数据进行标签,这些数据成本昂贵,劳动密集型。积极学习(AL),确定了最有知识的样本,对于最大限度地提高培训过程的效率越来越重要。现有的AL方法大多只使用一个单一的最后固定模型来获取标注的样本。这一战略可能不够好,因为某一培训数据模型的结构不确定性不被视为获取样本。在本研究中,我们根据传统随机梯度梯度下降(SGD)优化产生的时间性自合自合标准提出了一个新的获取标准。这些自我学习(AL)通过采集通过SGD迭代获得的中间网络重量而获得的自我聚合模型。我们获取功能主要依靠学生和教师模型之间的一致度衡量标准。学生模型有固定数量的自定义模型,而教师模型则是通过平均我们学生模型的重量来构建的。我们使用一个AL算法,即以学生-教师一致性为基础的AL(ST-CONAL)优化的基层梯底底基底基底基底(ST-SONL)优化。为CIRA-10号模型的大规模实验方法,为CIFAR-CA-SAL-CRA 演示了现有的图像分类任务展示了现有的CIR-CRA-CRA-CIS-CRA-SU-SU-SU-SU-SU-SD-SU-SUT-S-T-T-S-SU-SU-SUT-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-CL-T-T-T-T-T-T-T-T-T-T-T-TAR-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-CL-CL-TAR-T-T-C-C-TAR-C-T-TAR-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T-T