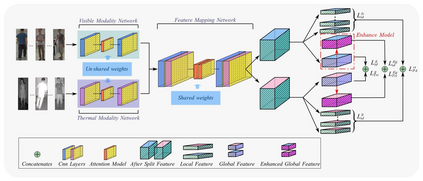

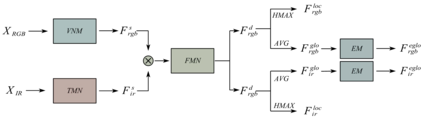

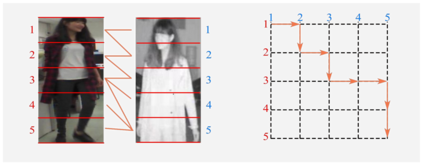

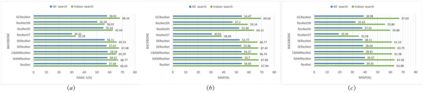

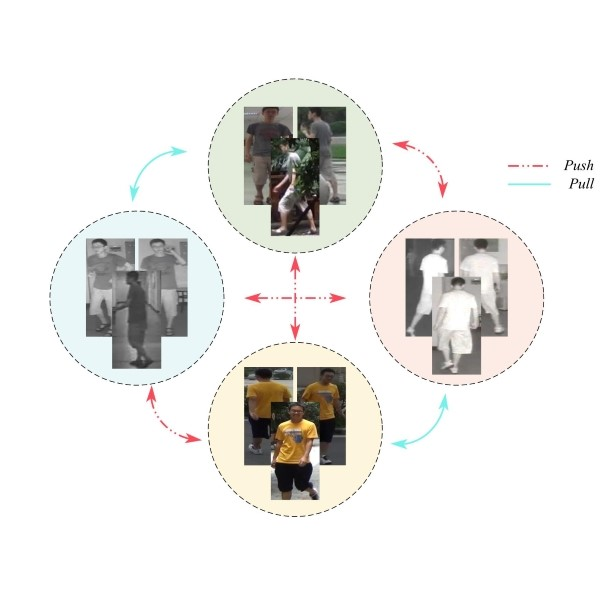

In addition to considering the recognition difficulty caused by human posture and occlusion, it is also necessary to solve the modal differences caused by different imaging systems in the Visible-Thermal cross-modal person re-identification (VT-ReID) task. In this paper,we propose the Cross-modal Local Shortest Path and Global Enhancement (CM-LSP-GE) modules,a two-stream network based on joint learning of local and global features. The core idea of our paper is to use local feature alignment to solve occlusion problem, and to solve modal difference by strengthening global feature. Firstly, Attention-based two-stream ResNet network is designed to extract dual-modality features and map to a unified feature space. Then, to solve the cross-modal person pose and occlusion problems, the image are cut horizontally into several equal parts to obtain local features and the shortest path in local features between two graphs is used to achieve the fine-grained local feature alignment. Thirdly, a batch normalization enhancement module applies global features to enhance strategy, resulting in difference enhancement between different classes. The multi granularity loss fusion strategy further improves the performance of the algorithm. Finally, joint learning mechanism of local and global features is used to improve cross-modal person re-identification accuracy. The experimental results on two typical datasets show that our model is obviously superior to the most state-of-the-art methods. Especially, on SYSU-MM01 datasets, our model can achieve a gain of 2.89%and 7.96% in all search term of Rank-1 and mAP. The source code will be released soon.

翻译:除了考虑人类姿态和封闭性造成的认知困难之外,还有必要解决在可见-热跨模式人再识别(VT-ReID)任务中不同成像系统造成的模式差异。在本文件中,我们提议了跨模式当地短路和全球增强(CM-LSP-GE)模块,一个基于对当地和全球特征共同学习的双流网络。我们的文件的核心理念是利用本地特征对齐来解决隐蔽性问题,并通过加强全球特征解决模式差异。首先,基于关注的双流ResNet网络旨在提取双向模式特征特征,并绘制地图到统一的特征空间。然后,为了解决跨模式人构成和隔离问题的跨模式当地最短路径(CM-LSP-GEGE)模块,将图像水平切成几个相等部分,两个图表之间最短的本地特征路径用于实现精细的本地特征调整。第三,一个分级的升级升级模块将全球特征用于加强战略,导致不同类别之间的差异增强。多方向的双流路路路路ResNet网络设计,一个用于实验性模型的升级性模型,最终将改进了全球数据采集结果。