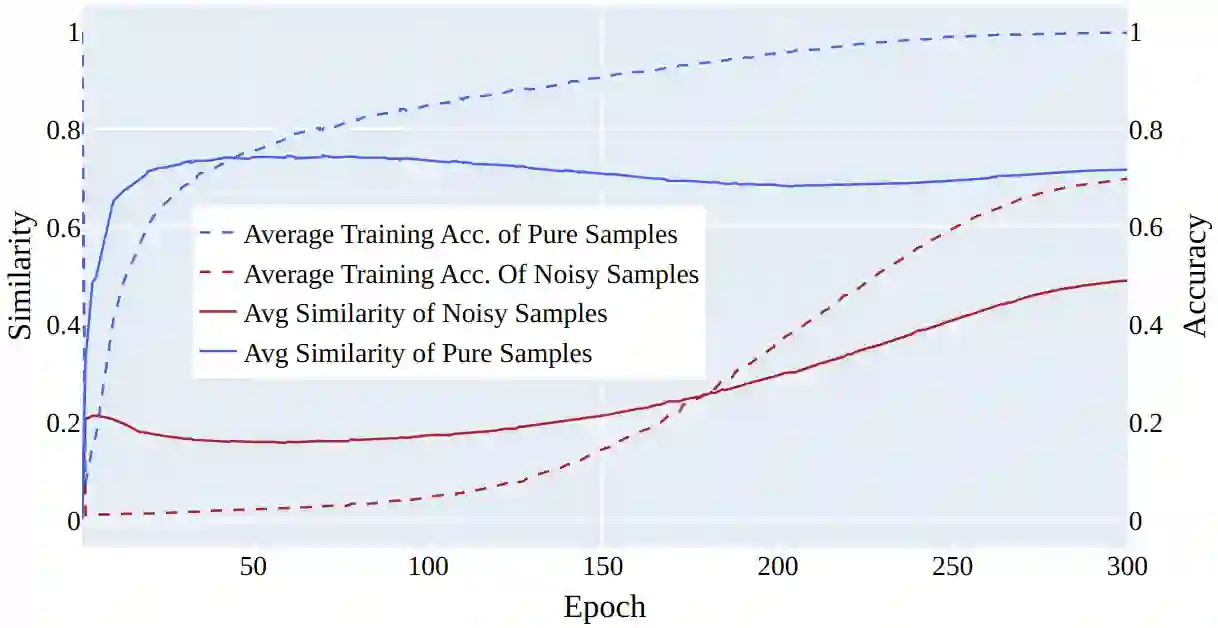

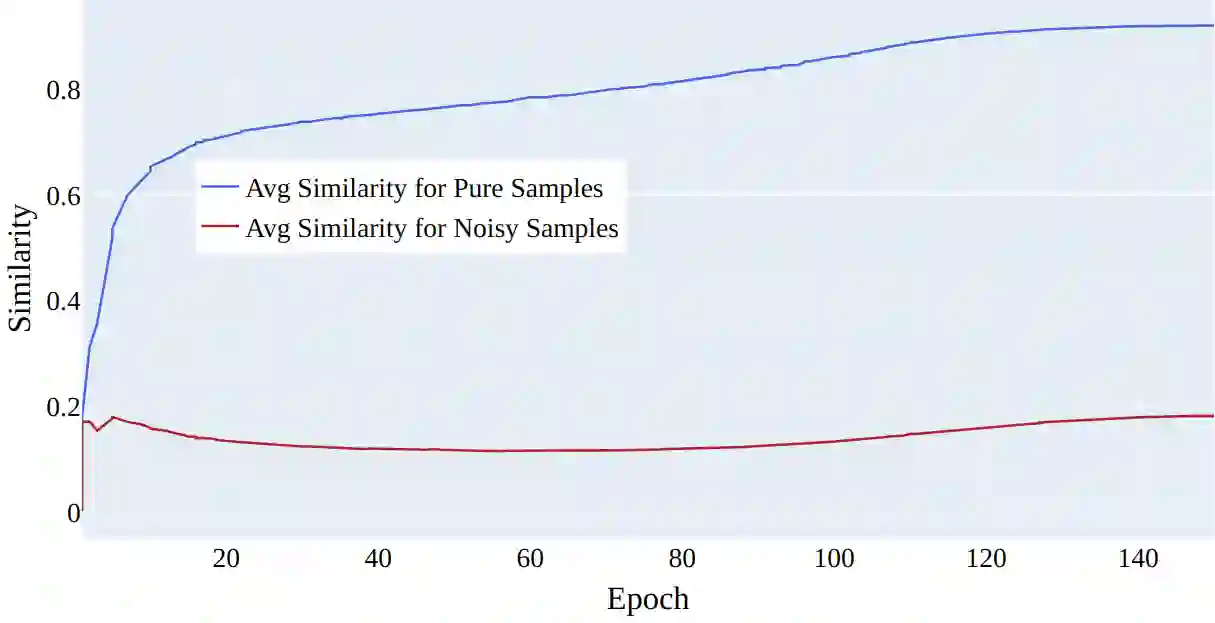

In this paper, we propose a new approach for addressing the challenge of training machine learning models in the presence of noisy labels. By combining a clever usage of distance to class centroids in the items' latent space with a discounting strategy to reduce the importance of samples far away from all the class centroids (i.e., outliers), our method effectively addresses the issue of noisy labels. Our approach is based on the idea that samples farther away from their respective class centroid in the early stages of training are more likely to be noisy. We demonstrate the effectiveness of our method through extensive experiments on several popular benchmark datasets. Our results show that our approach outperforms the state-of-the-art in this area, achieving significant improvements in classification accuracy when the dataset contains noisy labels.

翻译:在本文中,我们提出了一种新的方法来解决在存在噪声标签时训练机器学习模型的挑战。通过在项目的潜在空间中巧妙利用距离类别中心的方式,并使用折扣策略来减少远离所有类别中心(即离群值)的样本的重要性,我们的方法有效地解决了噪声标签的问题。我们的方法基于这样一个想法,即在训练的早期阶段,离其所属类别中心较远的样本更可能是有噪声的。通过对几个流行的基准数据集进行大量实验,我们展示了我们方法的有效性。我们的结果表明,在数据集包含噪声标签时,我们的方法胜过了该领域的最新技术,在分类精度方面取得了显著的提高。