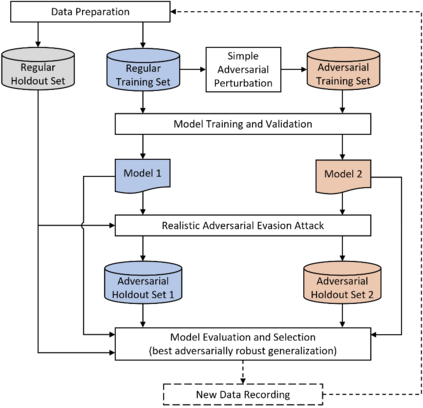

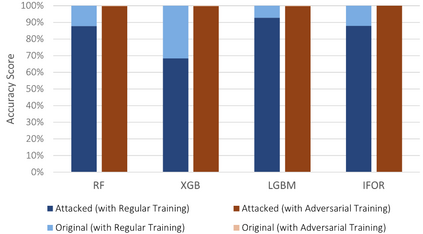

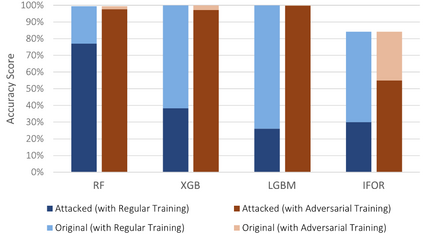

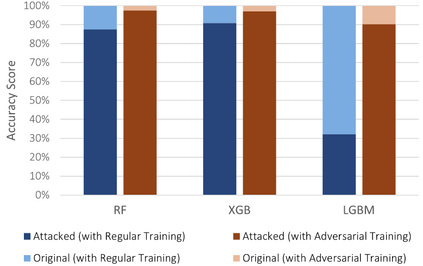

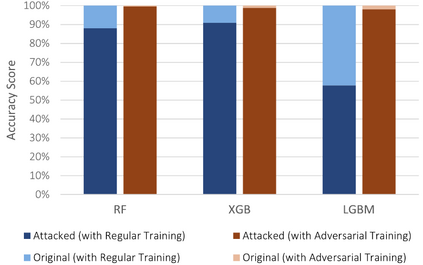

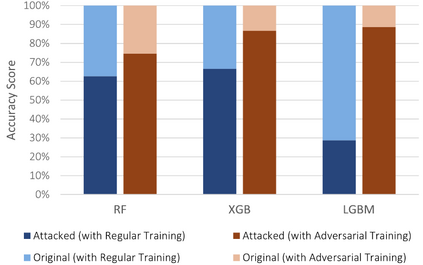

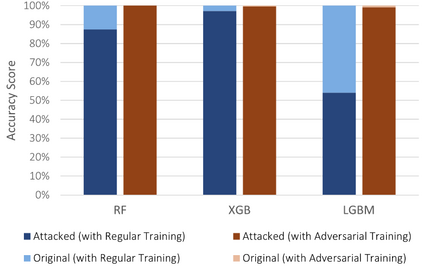

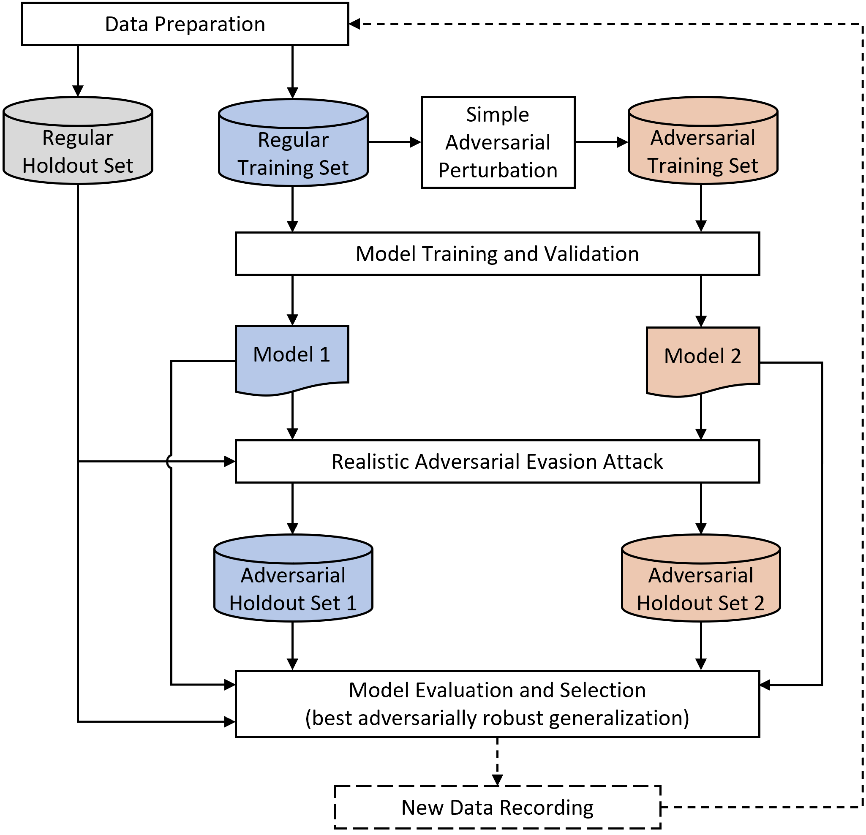

The Internet of Things (IoT) faces tremendous security challenges. Machine learning models can be used to tackle the growing number of cyber-attack variations targeting IoT systems, but the increasing threat posed by adversarial attacks restates the need for reliable defense strategies. This work describes the types of constraints required for an adversarial cyber-attack example to be realistic and proposes a methodology for a trustworthy adversarial robustness analysis with a realistic adversarial evasion attack vector. The proposed methodology was used to evaluate three supervised algorithms, Random Forest (RF), Extreme Gradient Boosting (XGB), and Light Gradient Boosting Machine (LGBM), and one unsupervised algorithm, Isolation Forest (IFOR). Constrained adversarial examples were generated with the Adaptative Perturbation Pattern Method (A2PM), and evasion attacks were performed against models created with regular and adversarial training. Even though RF was the least affected in binary classification, XGB consistently achieved the highest accuracy in multi-class classification. The obtained results evidence the inherent susceptibility of tree-based algorithms and ensembles to adversarial evasion attacks and demonstrates the benefits of adversarial training and a security by design approach for a more robust IoT network intrusion detection.

翻译:机器学习模式可用于应对越来越多的针对IOT系统的网络攻击变异,但对抗性攻击带来的日益增大的威胁再次表明需要可靠的防御战略。这项工作描述了对抗性网络攻击范例需要哪些类型的约束,以便现实可行,并提出了一种方法,用于进行可靠的对抗性对抗性对抗性攻击分析,同时采用现实的对抗性对抗性规避攻击矢量。拟议方法用于评价三种受监督的算法,即随机森林(Random Forest)、极重力推动(XGB)和轻增速推动机(LGBM),以及一种不受监督的算法,隔离森林(IFOR),这再次表明需要可靠的对抗性对抗性对抗性对抗性攻击模式(A2PM),以及针对通过定期和对抗性训练创建的模式进行规避性攻击。即使RF在二进制分类中受到的影响最小,XGB始终在多级分类中达到最高准确度。获得的结果证明,树基算法和轻于对抗性规避性攻击,以及一种不受监督的算法性攻击,并展示了较强的侵略性对抗性调查网络的好处。