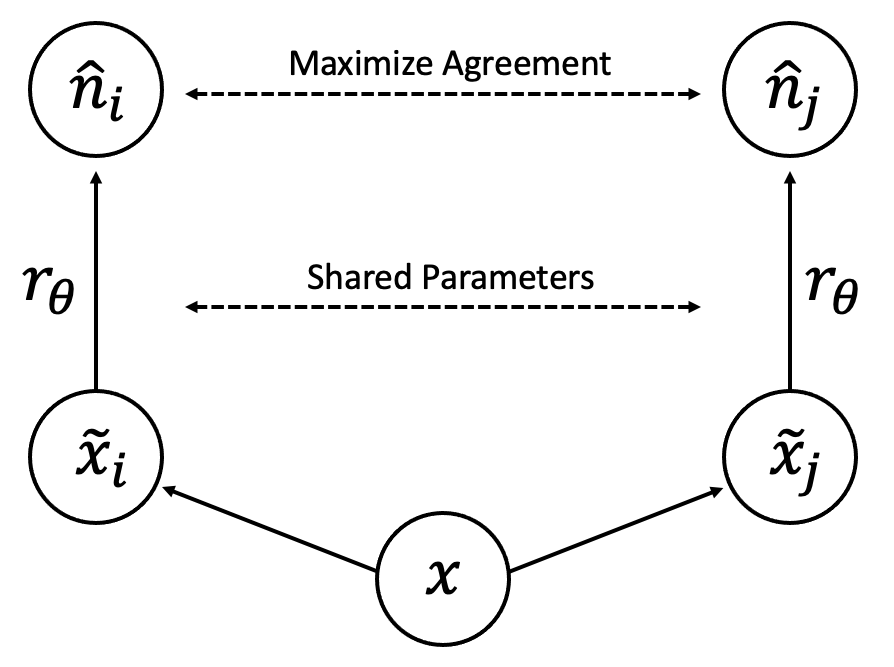

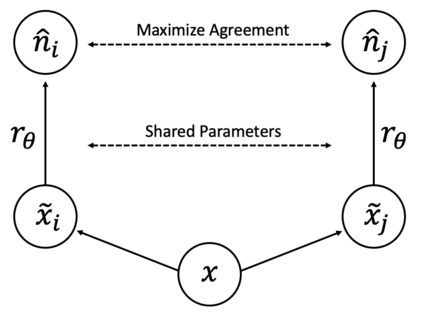

This paper is concerned with contrastive learning (CL) for low-level image restoration and enhancement tasks. We propose a new label-efficient learning paradigm based on residuals, residual contrastive learning (RCL), and derive an unsupervised visual representation learning framework, suitable for low-level vision tasks with noisy inputs. While supervised image reconstruction aims to minimize residual terms directly, RCL alternatively builds a connection between residuals and CL by defining a novel instance discrimination pretext task, using residuals as the discriminative feature. Our formulation mitigates the severe task misalignment between instance discrimination pretext tasks and downstream image reconstruction tasks, present in existing CL frameworks. Experimentally, we find that RCL can learn robust and transferable representations that improve the performance of various downstream tasks, such as denoising and super resolution, in comparison with recent self-supervised methods designed specifically for noisy inputs. Additionally, our unsupervised pre-training can significantly reduce annotation costs whilst maintaining performance competitive with fully-supervised image reconstruction.

翻译:本文涉及低层次图像恢复和提升任务的对比性学习(CL) 。 我们提出一个新的标签效率高的学习模式,其基础是残留物、残余对比性学习(RCL),并产生一个不受监督的视觉代表学习框架,适合于低层次的视觉任务,适合使用吵闹的投入。 监督的图像重建旨在直接将残余物最小化,而RCL则通过界定新颖的事例歧视借口任务,将残余物作为歧视特征,从而在残余物与CL之间建立联系。 我们的表述减轻了实例歧视借口任务与下游图像重建任务之间的严重任务不匹配。 我们实验地发现,RCL可以学习强健和可转移的表达方式,改善下游任务的业绩,例如脱色和超强分辨率,与最近专门为噪音投入设计的自我监督的方法相比。 此外,我们未经监督的预先培训可以大幅降低批注成本,同时保持完全受监督的图像重建的业绩竞争力。