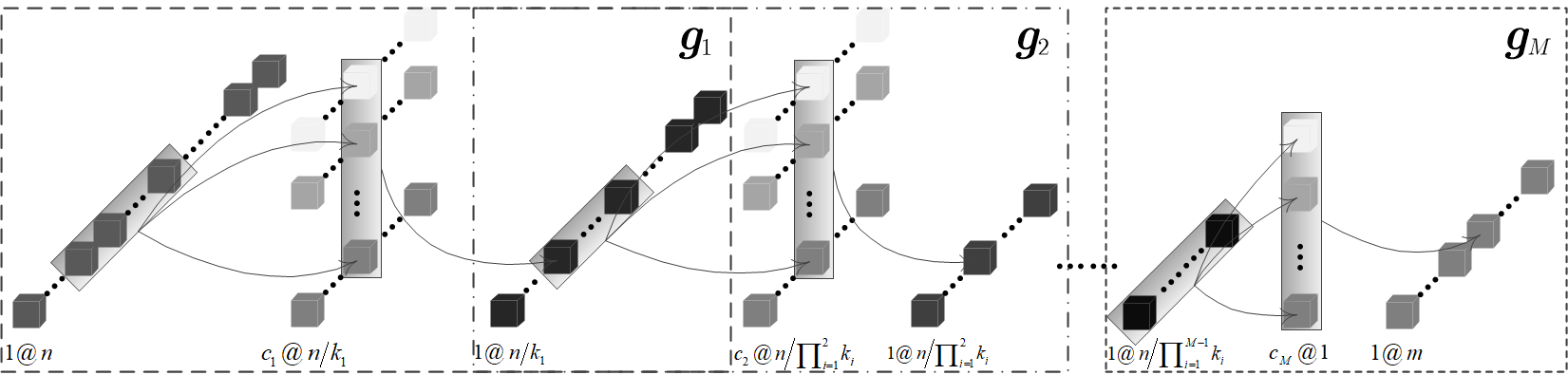

In this paper we present a class of convolutional neural networks (CNNs) called non-overlapping CNNs in the study of approximation capabilities of CNNs. We prove that such networks with sigmoidal activation function are capable of approximating arbitrary continuous function defined on compact input sets with any desired degree of accuracy. This result extends existing results where only multilayer feedforward networks are a class of approximators. Evaluations elucidate the accuracy and efficiency of our result and indicate that the proposed non-overlapping CNNs are less sensitive to noise.

翻译:在本文中,我们在研究CNN近效能力时,我们展示了一类被称为“不重叠CNN”的“不重叠CNN”神经神经网络(CNNs),我们证明,这种具有吸附激活功能的网络能够近似任意连续功能,这种功能在紧凑输入组中界定,且具有任何预期准确度。这一结果扩展了现有结果,即只有多层向前传输网络是近似型。评估阐明了我们结果的准确性和效率,并表明拟议的不重叠CNN对噪音不太敏感。

相关内容

Source: Apple - iOS 8