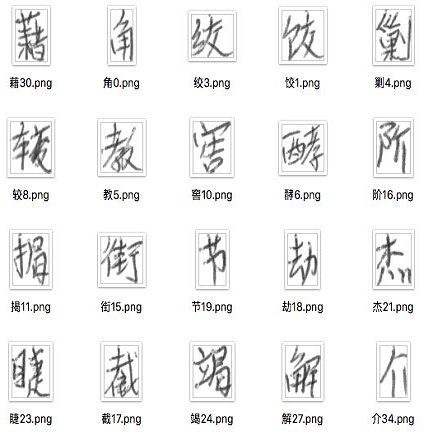

The recurrent neural network (RNN) is appropriate for dealing with temporal sequences. In this paper, we present a deep RNN with new features and apply it for online handwritten Chinese character recognition. Compared with the existing RNN models, three innovations are involved in the proposed system. First, a new hidden layer function for RNN is proposed for learning temporal information better. we call it Memory Pool Unit (MPU). The proposed MPU has a simple architecture. Second, a new RNN architecture with hybrid parameter is presented, in order to increasing the expression capacity of RNN. The proposed hybrid-parameter RNN has parameter changes when calculating the iteration at temporal dimension. Third, we make a adaptation that all the outputs of each layer are stacked as the output of network. Stacked hidden layer states combine all the hidden layer states for increasing the expression capacity. Experiments are carried out on the IAHCC-UCAS2016 dataset and the CASIA-OLHWDB1.1 dataset. The experimental results show that the hybrid-parameter RNN obtain a better recognition performance with higher efficiency (fewer parameters and faster speed). And the proposed Memory Pool Unit is proved to be a simple hidden layer function and obtains a competitive recognition results.

翻译:经常性神经网络( RNN) 适合处理时间序列。 在本文中, 我们展示了一个带有新特征的深 RNNN, 并将其应用于在线手写中文字符识别。 与现有的 RNN 模型相比, 有三个创新涉及拟议系统中的系统。 首先, 提议为 RNN 设置一个新的隐藏层功能, 以更好地学习时间信息。 我们称它为内存集合单元( MPU) 。 提议的 MPU 结构简单。 其次, 提供了一个新的带有混合参数的 RNN 结构, 以提高 RNN 的表达能力。 拟议的混合参数RNNN 在计算时间尺度的循环时, 参数有变化。 第三, 我们做了调整, 每个层的所有输出都堆叠成网络的输出。 堆放式隐藏层将所有隐藏层状态结合起来, 以提高表达能力。 在IAHCC- UCAS2016 数据集和 CASIA- OLHDB1.1 数据集上进行了实验。 实验结果显示, 混合参数 RNNNN 在计算时, 在计算时, 以更高效率( 参数和更快的速度计算时, ) 。, 所拟议的竞争性记忆库函数将被证明为简单的识别。 。