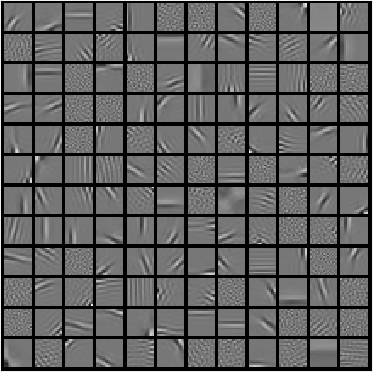

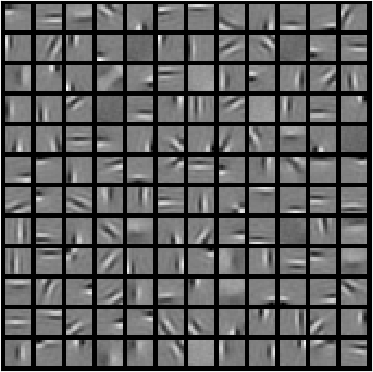

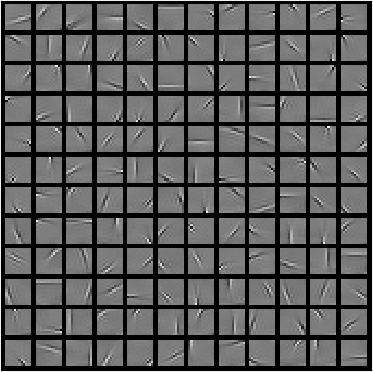

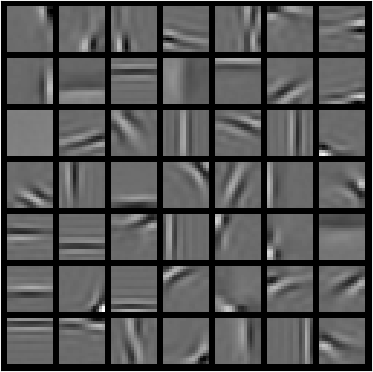

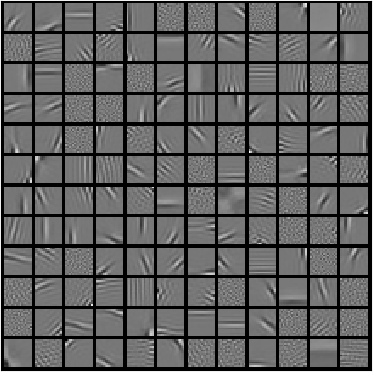

Neural networks, specifically deep convolutional neural networks, have achieved unprecedented performance in various computer vision tasks, but the rationale for the computations and structures of successful neural networks is not fully understood. Theories abound for the aptitude of convolutional neural networks for image classification, but less is understood about why such models would be capable of complex visual tasks such as inference and anomaly identification. Here, we propose a sparse coding interpretation of neural networks that have ReLU activation and of convolutional neural networks in particular. In sparse coding, when the model's basis functions are assumed to be orthogonal, the optimal coefficients are given by the soft-threshold function of the basis functions projected onto the input image. In a non-negative variant of sparse coding, the soft-threshold function becomes a ReLU. Here, we derive these solutions via sparse coding with orthogonal-assumed basis functions, then we derive the convolutional neural network forward transformation from a modified non-negative orthogonal sparse coding model with an exponential prior parameter for each sparse coding coefficient. Next, we derive a complete convolutional neural network without normalization and pooling by adding logistic regression to a hierarchical sparse coding model. Finally we motivate potentially more robust forward transformations by maintaining sparse priors in convolutional neural networks as well performing a stronger nonlinear transformation.

翻译:在各种计算机视觉任务中,具体来说,深深的神经神经网络,特别是深深的神经神经网络,取得了前所未有的业绩,但在各种计算机视觉任务中,成功神经网络计算和结构的理由没有完全理解。对于用于图像分类的神经神经网络的功能来说,理论对神经神经网络的进化能力是巨大的,但对于为什么这些模型能够执行复杂的视觉任务,例如推断和异常识别等,人们不太了解。在这里,我们提议对具有RELU激活功能的神经网络,特别是神经神经网络网络进行稀疏的编码解释。在稀疏的编码中,当模型的基础功能被假定为正向的,模型基础网络的基本功能的计算和结构的计算原理并不完全,而最佳的系数则由预测到输入图像图像的基函数的软门槛功能功能功能功能来给出。在无偏差的编码网络中,软峰值函数的功能功能是:软值的神经网络,我们通过一个更强的进化的进化的进化的进化前进化的进化前进化的进化的进化前进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进化的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的网络,进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的网络,进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的进的