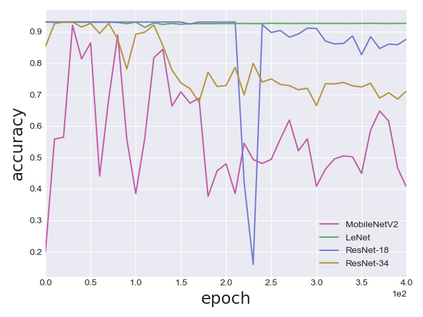

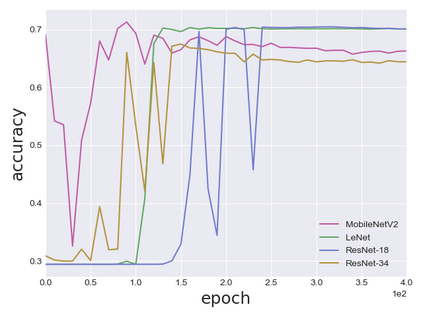

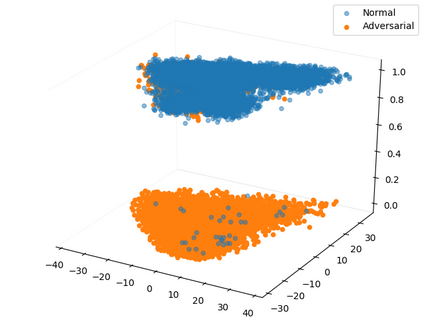

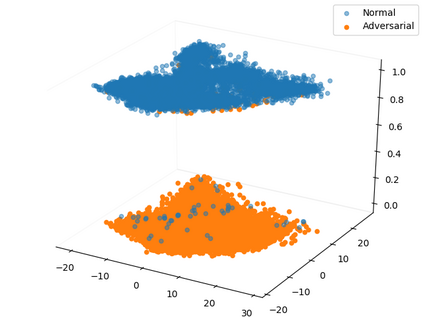

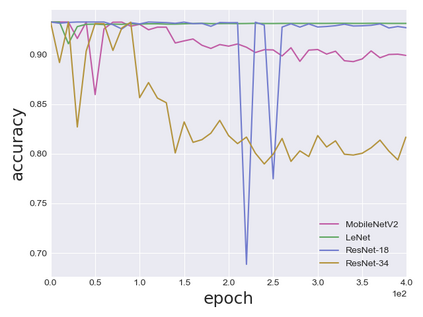

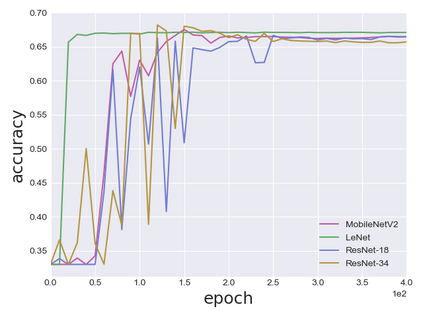

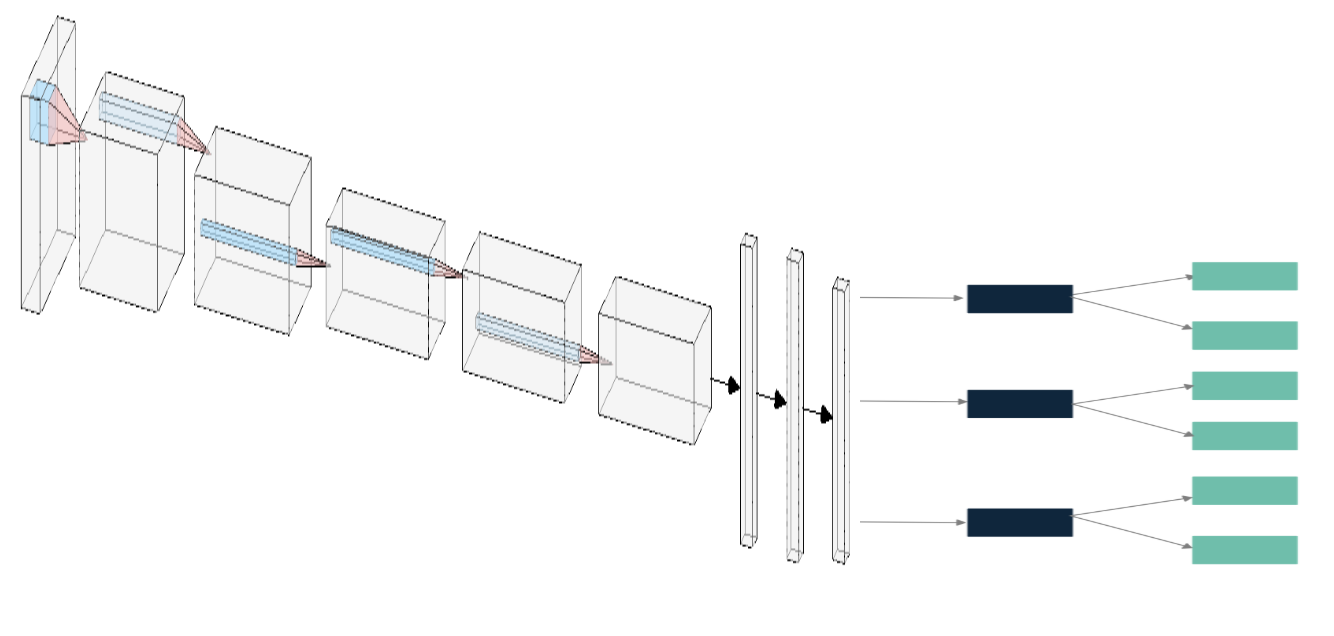

Despite the significant advances in deep learning over the past decade, a major challenge that limits the wide-spread adoption of deep learning has been their fragility to adversarial attacks. This sensitivity to making erroneous predictions in the presence of adversarially perturbed data makes deep neural networks difficult to adopt for certain real-world, mission-critical applications. While much of the research focus has revolved around adversarial example creation and adversarial hardening, the area of performance measures for assessing adversarial robustness is not well explored. Motivated by this, this study presents the concept of residual error, a new performance measure for not only assessing the adversarial robustness of a deep neural network at the individual sample level, but also can be used to differentiate between adversarial and non-adversarial examples to facilitate for adversarial example detection. Furthermore, we introduce a hybrid model for approximating the residual error in a tractable manner. Experimental results using the case of image classification demonstrates the effectiveness and efficacy of the proposed residual error metric for assessing several well-known deep neural network architectures. These results thus illustrate that the proposed measure could be a useful tool for not only assessing the robustness of deep neural networks used in mission-critical scenarios, but also in the design of adversarially robust models.

翻译:尽管过去十年来在深层次的深层次学习方面取得了显著进展,但限制广泛采用深层次学习的一大挑战是其易受对抗性攻击的影响。这种对在有对抗性扰动数据的情况下作出错误预测的敏感性,使得某些现实世界、任务关键应用难以采用深神经网络。虽然许多研究重点都围绕对抗性实例的创建和对抗性硬化,但是没有很好地探索评估对抗性强力的业绩计量领域。本研究报告为此提出了残余错误的概念,这是一项新的绩效衡量标准,不仅用于评估单个样本一级深层神经网络的对抗性强力,而且还可用于区分对抗性和非对抗性实例,以便利对敌对性实例的检测。此外,我们采用了混合模型,以易于移动的方式弥补残余错误。使用图像分类的实验结果表明,拟议的残余错误衡量标准对于评估几个众所周知的深层神经网络结构的有效性和有效性。这些结果表明,拟议的措施不仅可以作为评估强健的对立型飞行任务设计模型的有用工具,而且还可以用来评估在所使用的深层神经网络中评估稳健的防御性模型。