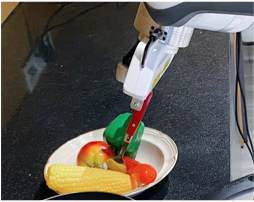

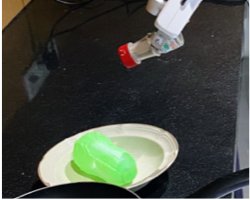

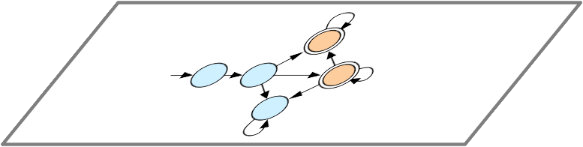

Sequences and time-series often arise in robot tasks, e.g., in activity recognition and imitation learning. In recent years, deep neural networks (DNNs) have emerged as an effective data-driven methodology for processing sequences given sufficient training data and compute resources. However, when data is limited, simpler models such as logic/rule-based methods work surprisingly well, especially when relevant prior knowledge is applied in their construction. However, unlike DNNs, these "structured" models can be difficult to extend, and do not work well with raw unstructured data. In this work, we seek to learn flexible DNNs, yet leverage prior temporal knowledge when available. Our approach is to embed symbolic knowledge expressed as linear temporal logic (LTL) and use these embeddings to guide the training of deep models. Specifically, we construct semantic-based embeddings of automata generated from LTL formula via a Graph Neural Network. Experiments show that these learnt embeddings can lead to improvements in downstream robot tasks such as sequential action recognition and imitation learning.

翻译:机器人任务中经常出现序列和时间序列,例如在活动识别和模拟学习中。近年来,深神经网络(DNNS)在有足够的培训数据和计算资源的情况下,成为处理序列的有效数据驱动方法。然而,当数据有限时,逻辑/基于规则的方法等更简单的模型运作得令人惊讶,特别是在相关先前知识在构建过程中应用时。然而,与DNNS不同,这些“结构”模型可能难以扩展,无法与原始的无结构数据相配合。在这项工作中,我们寻求学习灵活的 DNNS, 并在有时间知识的情况下利用已有的时间知识。我们的方法是嵌入以线性时间逻辑表示的象征性知识(LTL),并利用这些嵌入式来指导深模型的培训。具体地说,我们通过图形神经网络构建了LT公式生成的自动磁数据基于语系的嵌入。实验表明,这些学到的嵌入可以导致下游机器人任务的改进,例如连续行动识别和模仿学习。